This content originally appeared on Level Up Coding - Medium and was authored by Matt Wiater

In this article, we explore MiniSync, an automated file backup solution (ahem, a personal home project) designed to synchronize files from a Windows folder to a MinIO cluster running on Raspberry Pi SBCs. We’ll dive into the application’s purpose, its major components, and detailed implementation, including directory monitoring, file synchronization, and periodic full syncs. Full code available here.

While theoretical scenarios can be fascinating — especially when exploring programming languages — sometimes the best way to learn is by addressing a real-world need. Instead of endlessly meandering through language features and hypothetical implementations, working on a personal project with a clear, finite goal can provide a focused learning experience without the external pressures of deadlines or budgets.

When I first started as a software engineer, personal projects felt like a break from work: no project manager, no timeline, no budget (well, kind of), and no meetings. I would often jump into rapid prototyping without much thought to planning or project management. While this approach can be liberating, it also meant that I often struggled near the end, wishing I had spent more time setting clear objectives and breaking down the project into manageable milestones.

I’ve since learned that taking even an hour to define the minimum viable product (MVP) and identify key milestones can save you significant time and effort later. This approach not only makes the development process smoother but also helps you stay focused on the end goal without getting bogged down in unnecessary details.

MiniSync: Automated Backup to a Distributed Cluster

Inspired by the convenience of Google Drive folder synchronization, I wanted to create a similar solution that operates locally, providing the same level of automation and reliability without relying on third-party cloud services. I started by asking myself some critical questions:

- How do I efficiently monitor a folder for changes? Should synchronization occur on any file change, or should I implement periodic sweeps?

- Should synchronization be unidirectional (source -> destination only) or bidirectional (source <-> destination)?

- Where should the files be backed up?

- How do I create a Go application that runs as a Windows Service?

- How can I operate this Windows service with minimal dependencies, such as defining absolute paths to configuration files or environment variables?

Answering these questions led me to develop MiniSync, an application that automatically backs up files on my network to a distributed MinIO cluster, with the application running as a Windows service.

Goals for this project

Based on the initial questions, I set the following goals for this project:

- Run as a Windows Service: Ensure that the Go app can be easily installed, started, stopped, and uninstalled as a Windows service.

- Directory Monitoring: Specify a directory to monitor for changes, including renaming files and content updates. The system should also support nested files and folders.

- Redundant Synchronization: Implement a periodic timer to check for synchronization needs, handling edge cases like undetected changes or deletions in the destination location.

- Simplify MinIO Cluster Setup: Build the MinIO destination cluster with minimal fault tolerance, balancing simplicity and reliability.

- Minimize Maintenance: Design the application to be robust and require minimal ongoing maintenance.

The overall goal was to build a simple, reliable solution that could run with minimal intervention. Overengineering often sacrifices utility for complexity, so I focused on addressing the core problem directly without introducing unnecessary complications.

Caveats

Although my setup is specifically tailored to my network needs, the principles outlined here can be applied to various configurations. I had a few Raspberry Pi 4 SBCs and some SanDisk USB storage drives lying around, so I decided to repurpose them for this project. Having previously used a distributed MinIO cluster for S3-compatible backups, it felt like a natural choice for my home setup. My examples and code will reference a distributed MinIO cluster running on four networked Raspberry Pis. My setup documentation for my Pi cluster is here.

The Development Process

When thinking about packaging options for this application, there were a few options. The truth is, the way the initial concept was built worked perfectly for my needs: a simple command line application to install, start, stop, and uninstall the service — all “easily” configured by a config.json file.

The later iterations involving an installer and a GUI for configuration and management were mostly driven by curiosities: What is involved in creating an application with a minimal interface that can install and manage a Windows service?

Answering this question turned out to be quite a long, but eventually fruitful, exploration. When I look back and weigh out cost/benefits, the supplementary nice-to-haves took roughly 400% longer than developing the initial app. Totally unacceptable! Except, all of that extra time I invested wasn’t random meandering and debugging, it was learning new things that I’ll always have in my pocket going forward. So, I’ll consider any extraordinary self-overages a wash.

Part 1: MiniSyncService.exe

The Spawn of MiniSync.exe? (An Aside)

It is important to note that there are two distinct but dependent applications within this codebase:

- MiniSyncService.exe: This is the core service that is installed in Windows that takes care of the actual file synchronization.

- MiniSync.exe: This is the GUI wrapper for configuration and control of the MiniSyncService.exe service.

For these two binaries to work together, you must first compile MiniSyncService.exe. Subsequently, when you compile MiniSync.exe, the compiled MiniSyncService.exe is actually embedded into the application. When you run MiniSync.exe for the first time, it actually saves out the MiniSyncService.exe binary in the same directory. This way the service binary is in a consistent location for the management binary to find.

For example, first build the MiniSyncService.exe binary:

GOOS=windows GOARCH=amd64 go build -o bin/MiniSyncService.exe minisyncService/minisyncService.go

Then build the MiniSync.exe binary, which embeds the MiniSyncService.exe binary:

wails build

The result is a single distributable binary containing both pieces of the application: MiniSync.exe

Why a Windows Service?

I needed a solution that could run in the background, continuously monitoring specific directories and syncing files to MinIO without manual intervention. A Windows service was the perfect choice, as it can start automatically with the system, run under a dedicated user account, and operate without requiring a user session.

In the minisyncService directory, I developed the core logic for the Windows service. Here's how I approached it:

Installation and Service Management (minisyncService.go):

The minisyncService.go file is the core of the MiniSync application, responsible for managing the lifecycle of the Windows service. The service is built around the myService struct, which interacts with the Windows Service Control Manager (SCM) to handle various commands such as start, stop, pause, and shutdown.

Core Components

- Installation and Service Management: The minisyncService.go file manages the lifecycle of the Windows service, handling commands like start, stop, pause, and shutdown. The service is built around the myService struct, which interacts with the Windows Service Control Manager (SCM) to handle these commands. By using Go's golang.org/x/sys/windows/svc package, the service can interact seamlessly with the SCM, managing service registration and command processing. The official example I used as the base for my service is here.

- Directory Monitoring: The monitor.go file is responsible for responding to file system events like create, delete, rename, etc. When these events are triggered, we update the MinIO backing service accordingly. The MonitorDirectory function specifies which directory we are monitoring for file system events. Basically, it watches for changes and creates events we can react to in the handleEvent function:

// MonitorDirectory monitors the specified directory for changes and synchronizes those changes

// to MinIO. It watches for changes in both the directory and its subdirectories, responding to

// events such as file creation, modification, deletion, and renaming.

func MonitorDirectory(sourceFolder string, minioClient *MinioClient) {

watcher, err := fsnotify.NewWatcher()

if err != nil {

log.Fatal(err)

}

defer watcher.Close()

err = filepath.Walk(sourceFolder, func(path string, info os.FileInfo, err error) error {

if err != nil {

return err

}

if info.IsDir() {

err = watcher.Add(path)

if err != nil {

log.Fatal(err)

}

}

return nil

})

if err != nil {

log.Fatal(err)

}

for {

select {

case event, ok := <-watcher.Events:

if !ok {

return

}

handleEvent(sourceFolder, event, watcher, minioClient)

case err, ok := <-watcher.Errors:

if !ok {

return

}

log.Println("Error:", err)

}

}

}

// handleEvent processes file system events and performs the appropriate MinIO operations

// based on the type of event. It handles file creation, modification, deletion, and renaming

// while preserving the directory structure in MinIO.

func handleEvent(sourceFolder string, event fsnotify.Event, watcher *fsnotify.Watcher, minioClient *MinioClient) {

relativePath, err := filepath.Rel(sourceFolder, event.Name)

if err != nil {

log.Printf("Failed to get relative path for %s: %v", event.Name, err)

return

}

switch {

case event.Op&fsnotify.Create == fsnotify.Create:

log.Println("Created file:", event.Name)

if !isDir(event.Name) {

err := minioClient.CreateFile(relativePath, event.Name)

if err != nil {

log.Printf("Failed to upload file: %v", err)

}

} else {

err = watcher.Add(event.Name)

if err != nil {

log.Printf("Failed to watch new directory: %v", err)

}

}

case event.Op&fsnotify.Write == fsnotify.Write:

log.Println("Modified file:", event.Name)

if !isDir(event.Name) {

err := minioClient.UpdateFile(relativePath, event.Name)

if err != nil {

log.Printf("Failed to upload file: %v", err)

}

}

case event.Op&fsnotify.Remove == fsnotify.Remove:

log.Println("Deleted file or directory:", event.Name)

if !isDir(event.Name) {

err := minioClient.DeleteFile(relativePath)

if err != nil {

log.Printf("Failed to delete file: %v", err)

}

} else {

// Handle directory deletion by deleting all files under that directory in MinIO

err := minioClient.DeleteDirectory(relativePath)

if err != nil {

log.Printf("Failed to delete directory %s in MinIO: %v", relativePath, err)

}

}

case event.Op&fsnotify.Rename == fsnotify.Rename:

log.Println("Renamed file or directory:", event.Name)

if !isDir(event.Name) {

err := minioClient.DeleteFile(relativePath)

if err != nil {

log.Printf("Failed to delete old file after rename: %v", err)

}

// Note: In this simplified version, we assume the new file will be handled separately by a Create event.

} else {

// Handle directory rename by deleting the old directory and expecting the new one to be created

err := minioClient.DeleteDirectory(relativePath)

if err != nil {

log.Printf("Failed to delete old directory after rename: %v", err)

}

// Note: The new directory should trigger a Create event

}

}

}

- Timer Interval for Synchronization: A critical aspect of the service’s operation is the use of a timer interval to trigger periodic tasks. The timer is set up during the service’s initialization and runs continuously while the service is active. This interval dictates how frequently the service checks the monitored directories for changes, complementing real-time monitoring by providing a fallback mechanism. In case the file system monitor doesn’t catch an event, this mechanism just compares the local file system with the remote system and synchronizes them in a single direction: from local to remote. Bidirectional synchronization is simple to implement, but I only needed this application to act as a mirror to a backup service.

ticker := time.NewTicker(time.Duration(backupFrequencySeconds) * time.Second)

for range ticker.C {

elog.Info(1, "Starting full sync cycle")

err := filepath.Walk(MINISYNC_BACKUPFOLDER, func(path string, info os.FileInfo, err error) error {

if err != nil {

return err

}

relativePath, err := filepath.Rel(MINISYNC_BACKUPFOLDER, path)

if err != nil {

log.Printf("Failed to get relative path for %s: %v", path, err)

return err

}

if info.IsDir() {

log.Printf("Found directory: %s", relativePath)

return nil

}

// Check if the file exists on MinIO

remoteObject, err := minioClient.Client.StatObject(context.Background(), minioClient.BucketName, relativePath, minio.StatObjectOptions{})

if err != nil {

if minio.ToErrorResponse(err).Code == "NoSuchKey" {

// File does not exist on remote, upload it

log.Printf("Uploading new file %s to MinIO", relativePath)

err = minioClient.CreateFile(relativePath, path)

if err != nil {

log.Printf("Failed to upload file %s: %v", path, err)

return err

}

} else {

// Some other error occurred

log.Printf("Failed to stat remote file %s: %v", relativePath, err)

return err

}

} else {

// File exists on remote, compare it with the local file

if compareFiles(path, &remoteObject) {

// Files are identical, do nothing

log.Printf("File %s is identical on local and remote, skipping", relativePath)

} else {

// Files are not identical, update the remote file

log.Printf("Updating file %s on MinIO", relativePath)

err = minioClient.UpdateFile(relativePath, path)

if err != nil {

log.Printf("Failed to update file %s: %v", path, err)

return err

}

}

}

return nil

})

if err != nil {

log.Printf("Failed to walk directory: %v", err)

elog.Info(1, "Failed to walk directory")

} else {

log.Println("Full sync cycle completed")

elog.Info(1, "Full sync cycle completed")

}

// Check for files on the remote that don't exist locally and delete them

doneCh := make(chan struct{})

defer close(doneCh)

objectCh := minioClient.Client.ListObjects(context.Background(), minioClient.BucketName, minio.ListObjectsOptions{

Prefix: "", // Change prefix if you want to limit the scope

Recursive: true,

})

for object := range objectCh {

if object.Err != nil {

log.Printf("Error listing objects: %v", object.Err)

continue

}

localPath := filepath.Join(MINISYNC_BACKUPFOLDER, object.Key)

if _, err := os.Stat(localPath); os.IsNotExist(err) {

// File exists on remote but not locally, delete it

log.Printf("Deleting remote file %s that does not exist locally", object.Key)

err = minioClient.DeleteFile(object.Key)

if err != nil {

log.Printf("Failed to delete remote file %s: %v", object.Key, err)

}

}

}

}

- MinIO Communication: The minio.go file manages interactions with the MinIO backend, including uploading files, checking for existing objects, and managing buckets. The Go MinIO client library made this integration straightforward and reliable, acting as the bridge between the local file system and the remote MinIO server. To keep things dead simple, there are only two functions on the client: UploadFile and DeleteFile. For our unidirectional sync process, we only care about adding or removing files on the remote backing service. The only oddity here is renaming files. If a file is renamed, the original file (the file before it was renamed) is merely deleted on the remote destination. Instead of creating a new rename action, in the ticker loop above, the Walk will see that there is a new file on the local file system (the file after it was renamed), and simply add the file that doesn’t exist on the remote. Since the Delete action is triggered immediately on a file rename — deleting the file from the remote backup — and re-adding the renamed file is triggered by the periodic loop, there is a chance that any renamed files will be out of sync for up to backupFrequencySeconds, currently set for 30 seconds. This delay is completely fine for what I’m using this for.

// MinioClient wraps the MinIO client and provides additional context for operations on a specific bucket.

// It includes the MinIO client instance and the name of the bucket being operated on.

type MinioClient struct {

Client *minio.Client // Client is the MinIO client instance used to interact with MinIO.

BucketName string // BucketName is the name of the bucket where operations are performed.

}

// NewMinioClient creates a new MinioClient with the specified endpoint, access key, secret key, and bucket name.

// If the specified bucket does not exist, it attempts to create it. If the bucket already exists, it logs the information.

func NewMinioClient(endpoint, accessKey, secretKey, bucketName string) (*MinioClient, error) {

log.Printf("Creating MinIO client with endpoint: %s", endpoint)

minioClient, err := minio.New(endpoint, &minio.Options{

Creds: credentials.NewStaticV4(accessKey, secretKey, ""),

Secure: false,

})

if err != nil {

log.Printf("Error creating MinIO client with endpoint: %s, accessKey: %s", endpoint, accessKey)

return nil, err

}

err = minioClient.MakeBucket(context.Background(), bucketName, minio.MakeBucketOptions{})

if err != nil {

exists, errBucketExists := minioClient.BucketExists(context.Background(), bucketName)

if errBucketExists == nil && exists {

log.Printf("We already own %s\n", bucketName)

} else {

return nil, err

}

}

return &MinioClient{Client: minioClient, BucketName: bucketName}, nil

}

// CreateFile uploads a new file to MinIO, effectively the same as uploading a file.

func (c *MinioClient) CreateFile(relativePath, filePath string) error {

return c.UploadFile(relativePath, filePath)

}

// UpdateFile updates an existing file in MinIO by re-uploading it.

func (c *MinioClient) UpdateFile(relativePath, filePath string) error {

return c.UploadFile(relativePath, filePath)

}

// RenameFile renames a file in MinIO by copying it to the new path and deleting the old file.

func (c *MinioClient) RenameFile(oldRelativePath, newRelativePath, newFilePath string) error {

// Upload the file to the new path

err := c.UploadFile(newRelativePath, newFilePath)

if err != nil {

return err

}

// Delete the file from the old path

err = c.DeleteFile(oldRelativePath)

if err != nil {

return err

}

return nil

}

// UploadFile uploads a file to the specified bucket in MinIO, preserving the directory structure.

// The relativePath parameter specifies the path within the bucket, and filePath is the local file path to be uploaded.

func (c *MinioClient) UploadFile(relativePath, filePath string) error {

_, err := c.Client.FPutObject(context.Background(), c.BucketName, relativePath, filePath, minio.PutObjectOptions{})

return err

}

// DeleteFile deletes a file from the specified bucket in MinIO, preserving the directory structure.

// The relativePath parameter specifies the path within the bucket to the file to be deleted.

func (c *MinioClient) DeleteFile(relativePath string) error {

err := c.Client.RemoveObject(context.Background(), c.BucketName, relativePath, minio.RemoveObjectOptions{})

return err

}

- Status Reporting: The status.go file provides a mechanism to report the current status of the service back to the Wails frontend or other components. It exposes the service's state, such as "Running," "Paused," or "Stopped," which can then be displayed in the user interface. This file plays a key role in keeping the frontend updated with real-time information about the service, allowing users to make informed decisions about managing the service. The code below illustrates the execution and parsing of the internal Windows command sc query MiniSync by our application to get the current status of the MiniSync Servce.

// ServiceStatus represents the status of a service as a string.

type ServiceStatus string

const (

// StatusNotInstalled indicates that the service is not installed on the system.

StatusNotInstalled ServiceStatus = "NotInstalled"

// StatusStopped indicates that the service is installed but currently stopped.

StatusStopped ServiceStatus = "Stopped"

// StatusRunning indicates that the service is currently running.

StatusRunning ServiceStatus = "Running"

// StatusPaused indicates that the service is currently paused.

StatusPaused ServiceStatus = "Paused"

)

// GetServiceStatus checks the status of a Windows service by executing the `sc query` command

// and parsing its output. It returns a string representing the service's status, which can be

// one of the predefined statuses (Running, Stopped, Paused, or NotInstalled).

func GetServiceStatus(serviceName string) (string, error) {

cmd := exec.Command("sc", "query", serviceName)

var out bytes.Buffer

cmd.Stdout = &out

cmd.Stderr = &out

err := cmd.Run()

if err != nil {

if strings.Contains(out.String(), "The specified service does not exist as an installed service.") {

return string(StatusNotInstalled), nil

}

return "", err

}

output := out.String()

if strings.Contains(output, "STATE : 4 RUNNING") {

return string(StatusRunning), nil

} else if strings.Contains(output, "STATE : 1 STOPPED") {

return string(StatusStopped), nil

} else if strings.Contains(output, "STATE : 7 PAUSED") {

return string(StatusPaused), nil

}

return "", fmt.Errorf("unable to determine service status")

}

Part 2: MinIO Cluster

The MinIO cluster serves as the destination for the backed-up files, providing a distributed and scalable object storage solution. MinIO is an open-source, high-performance object storage system compatible with Amazon S3, making it an excellent choice for building private cloud storage infrastructures. Setting up a MinIO cluster involves deploying multiple MinIO server instances across different nodes, which then work together to store and manage data redundantly. This setup ensures high availability and durability, as data is distributed and replicated across the nodes. For details on how all of the pieces work to provide this service, check out: https://min.io/product/overview

For my setup, I utilized a few Raspberry Pi 4 boards each with 256GB USB thumb drive, which made this a logical and cost-effective choice. Detailed instructions on setting up a 4-node Raspberry Pi MinIO cluster can be found in my project’s repository: MINIO_SETUP.md

Likely, you’re installation will vary, so just check out the official detailed and simple setup docs for Kubernetes, Docker, Linux, macOS, and Windows.

Part 3: minisync.exe: Wails Configuration GUI

For the front end of this application, I chose Wails, a well-maintained and documented framework that binds the front end with the Go backend. While Wails is a powerful tool, it has a learning curve. If you’re new to Wails, I recommend starting with the demo, understanding the application setup options, and adding features incrementally. Testing both the development environment (wails dev) and the build environment (wails build) is crucial, as they may produce slightly different results.

When the application is launched, it checks to see if the MiniSync service is installed. If it is not, the application brings up the following form so that you can configure the MiniSync service:

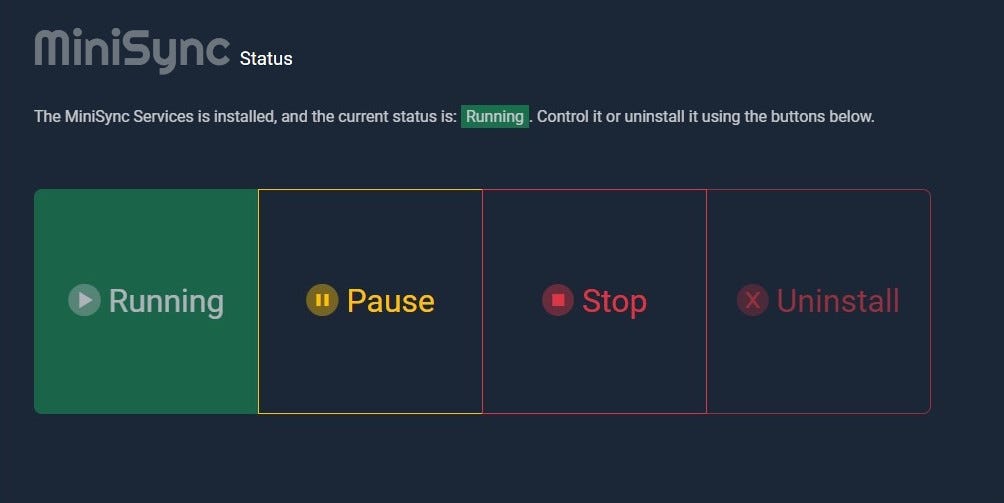

If the MiniSync service is already installed, it can be in one of the following three states:

- Stopped: From this state you can Start the service, or Uninstall the service. If you Uninstall the service, the application reverts back to the configuration screen above.

- Running: From this state you can Stop the service, or Pause the service.

- Paused: From this state you can Continue the service, or Stop the service.

Future Goals: Expanding MiniSync’s Capabilities

While MiniSync currently meets my personal needs for local file synchronization and backup, there are several exciting opportunities to expand its functionality. Given that MinIO is fully compatible with Amazon S3, one of the most promising enhancements would be to enable remote backups to an S3-compatible cloud storage service.

Remote Backups to Amazon S3

By leveraging MinIO’s S3 compatibility, it would be relatively straightforward to extend MiniSync to support remote backups. This would involve setting up a replication or synchronization process that allows my local MinIO cluster to automatically mirror its data to an S3 bucket on Amazon Web Services (AWS) or another S3-compatible cloud storage provider. MinIO actually makes this trivial to setup which you can do directly in the their web UI.

Logging

Logging is currently a bit overly verbose. I just exited the development phase of this application, so it’s currently this verbose for debugging reasons. There are currently 2 logging pieces: the application log, which I usually just store in the path I’m mirroring. In the MiniSyncService.exe, there are also lines like this in the service part of the application: elog.Info(1, “Set: minioClient”) . These are event logs for Windows related to the service. While logging in this space is important, I need to go back, prune, and standardize how I’m handling logs.

Conclusion

Building MiniSync was a rewarding experience that allowed me to explore and apply several important concepts in application development. From managing a Windows service with Go to setting up a MinIO backend and creating a Wails-based frontend, this project was both a learning tool and a practical solution for my personal needs.

Implementing an Automated File Backup System with Go and a MinIO Cluster: A Pragmatic Approach was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Matt Wiater

Matt Wiater | Sciencx (2024-08-18T16:51:39+00:00) Implementing an Automated File Backup System with Go and a MinIO Cluster: A Pragmatic Approach. Retrieved from https://www.scien.cx/2024/08/18/implementing-an-automated-file-backup-system-with-go-and-a-minio-cluster-a-pragmatic-approach/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.