This content originally appeared on HackerNoon and was authored by hackernoon

AI image manipulation tools have come a long way in the last few years. It’s quite easy nowadays to create beautiful, stylistic portraits of people and animals. But AI models are highly unpredictable. So most tools rely on the user (or some human) to weed out bad generations and find the best one.

\ This is the classic “human in the loop” problem that often plagues AI tools. Turns out, with some clever tricks and careful tuning, you can build a pipeline that reliably works for the vast majority of pets. It is extremely resilient to variations in pose, lighting etc.

\ In this post, I’ll dive deeper into how it works and all the neat little tricks that enable this. Here’s some examples of portraits you can generate with this pipeline.

Let’s get started!

The Key Ingredients

IPAdapter

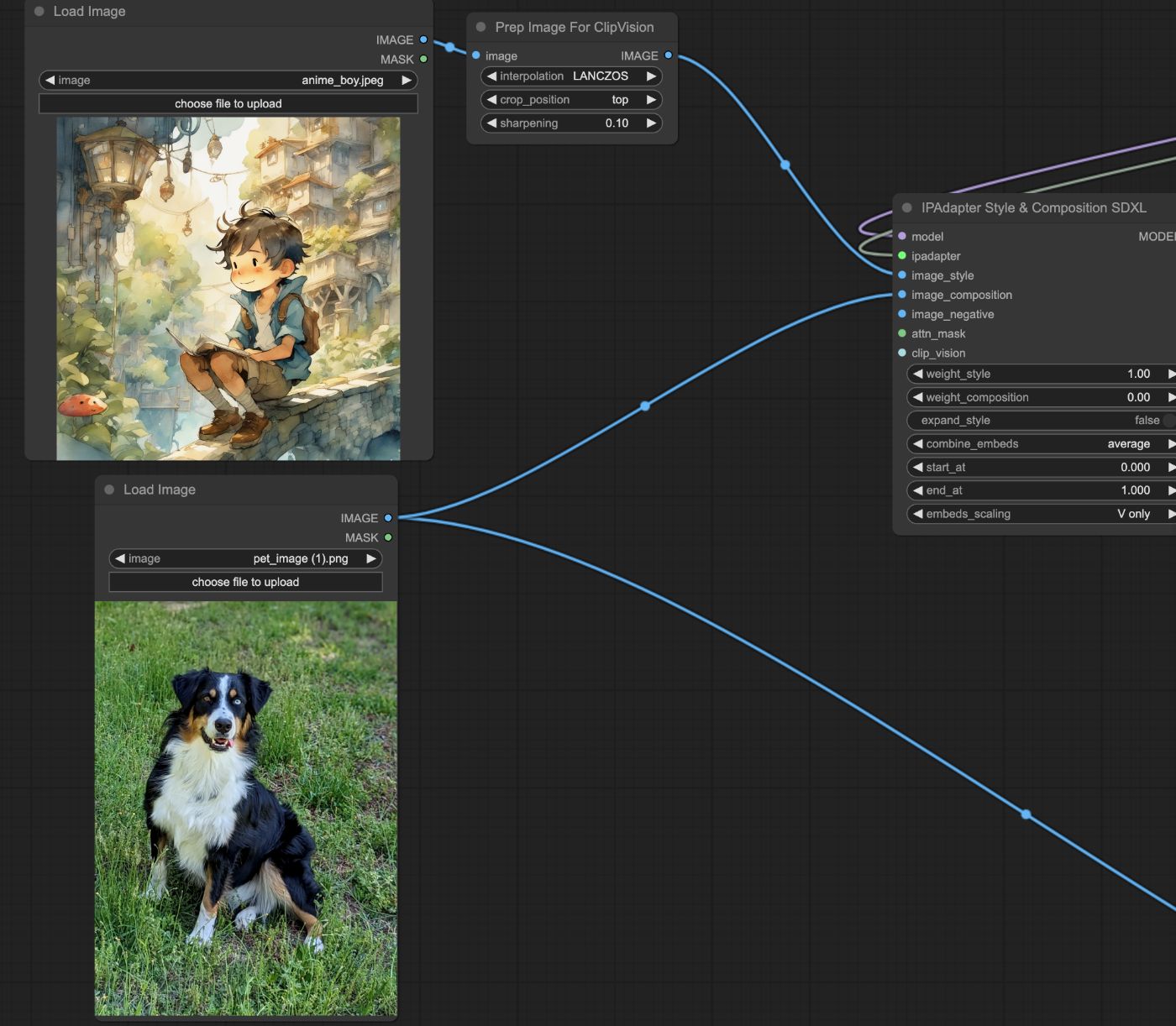

The crux of the technique is the IPAdapter. It’s essentially a way to prompt a model using an image instead of text (it literally stands for Image Prompt Adapter). So instead of taking a text embeddings, it uses an image to get the embeddings. This is super powerful because it can accurately capture the style and structure in an image directly, instead of someone having to translate what they want from an image into text. In our ComfyUI IPAdapter node, we have two inputs, one for style and one for composition. We use a watercolor painting image for style, and feed the original image for composition (since we want to keep the same composition, but change the style).

\

ControlNets

Now that we have a way to keep the style consistent, we can turn our attention to faithfully representing the pet. IPAdapters bias heavily towards image quality, and image likeness suffers. So we need to do something to be able to keep the output looking like the same object as the input.

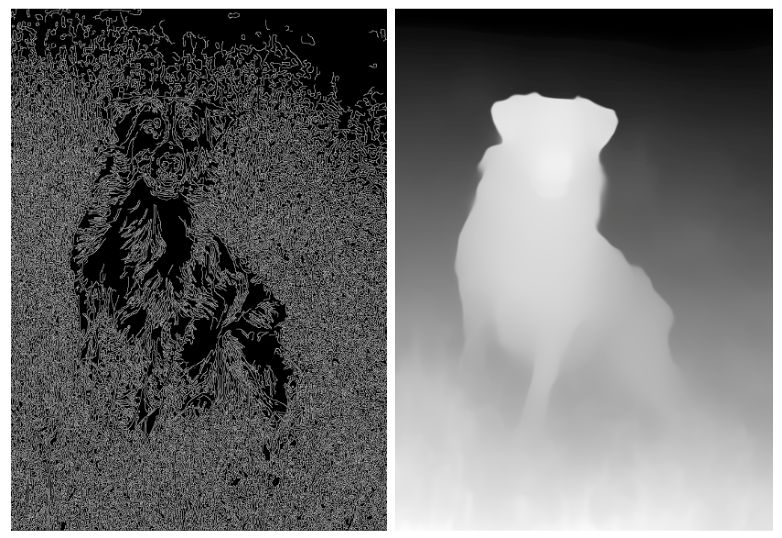

\ The answer to that is ControlNet. ControlNets are another cool technique to provide additional constraints to the image generation process. Using a ControlNet, you can specify constraints in the form of edges, depth, human pose etc. A great feature of ControlNets is that they can be stacked. So you can have an edge controlnet that forces the output to have similar edges as the input, and also a depth controlnet that forces the output to have a similar depth profile. And that’s exactly what I do here.

\

\n It turns out that controlnets are not just stackable with other controlnets, but they can also work in tandem with IPAdapter mentioned above. And so those are the tools we’ll use for this - IPAdapter with a source image to get the style, ControlNet with canny edge detector to constrain based on edges, and controlnet with depth to constrain based on depth profile.

\ That’s really all you need in terms of techniques, but what I’ve learnt from experimenting with machine learning for production is that a lot of the value of these things comes from taking the time to tune all your parameters perfectly. So I want to talk a little bit about that.

Finetuning

Have you ever found a model with amazing example outputs, tried it on your own images and realized they look terrible? Often, the only reason for that is that the model hasn’t been finetuned for your images. It can feel like a complete blocker sometimes, because where do we even start with finetuning a pre-trained model?! Here’s what I’ve learnt about the topic. This extends beyond this particular pipeline too, so it’s good knowledge to have generally.

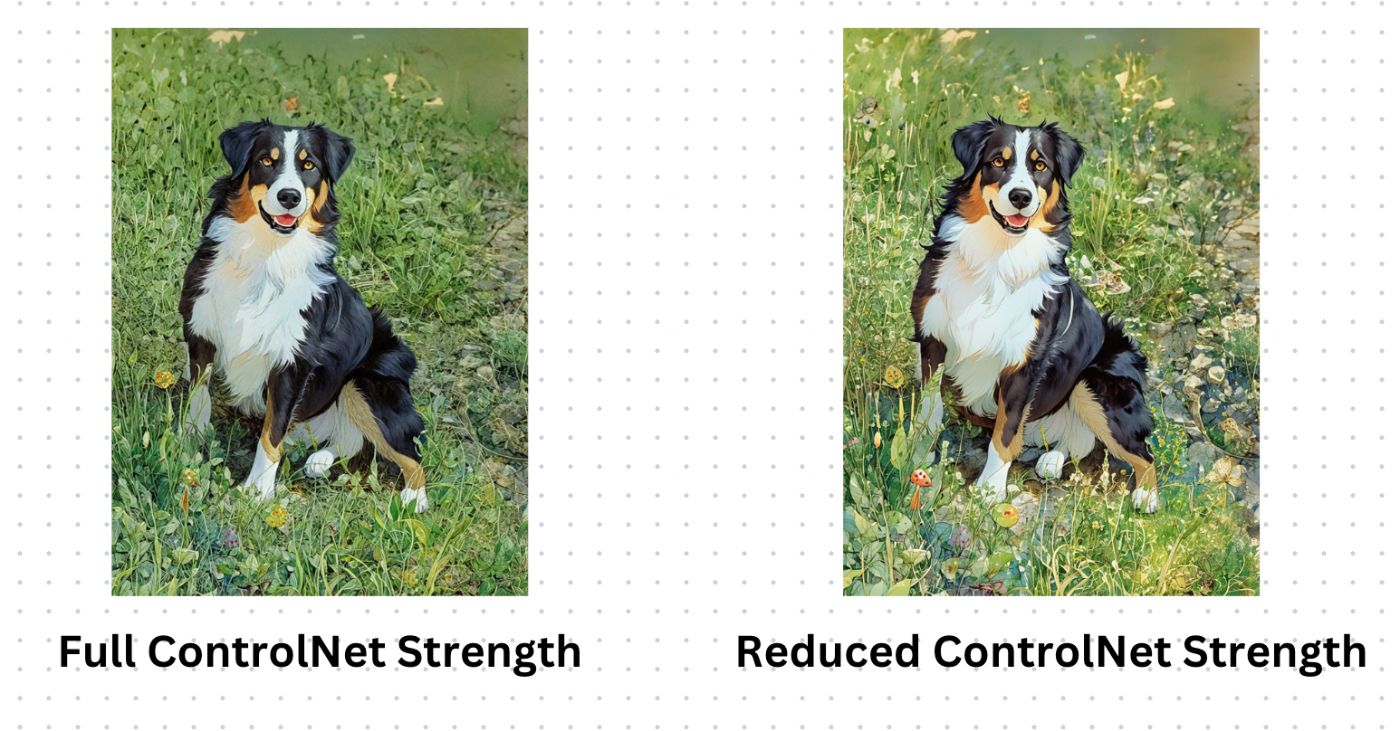

ControlNets

ControlNets are very powerful, so you have to be careful to control their effect on the output. Luckily, the custom nodes in ComfyUI let us reduce the effect of controlnets, and also stop their effects at any point. So we set the edge detector to 75% strength and make it stop influencing the generation at 75% and the depth detector stops at 30%. The reason we stop them at the end rather than just reducing their strength is that this allows the network to “clean up” any artifacts caused by them in the last few steps without being constrained externally. It just makes the image prettier. So it’s using just it’s training data to make things look as nice as possible, ignoring the edges and depth.

\

\n The other big thing to tune is the KSampler. There’s a lot of little things going on here, but I’ll just briefly touch on some of them:

KSampler - Steps

First we have the steps. This is literally the number of times the model will repeatedly run. The more it runs, the more stylized your output gets, and the further away it will get from the original image. The effects of this are often not that obvious, so it’s worth playing around with it.

\n

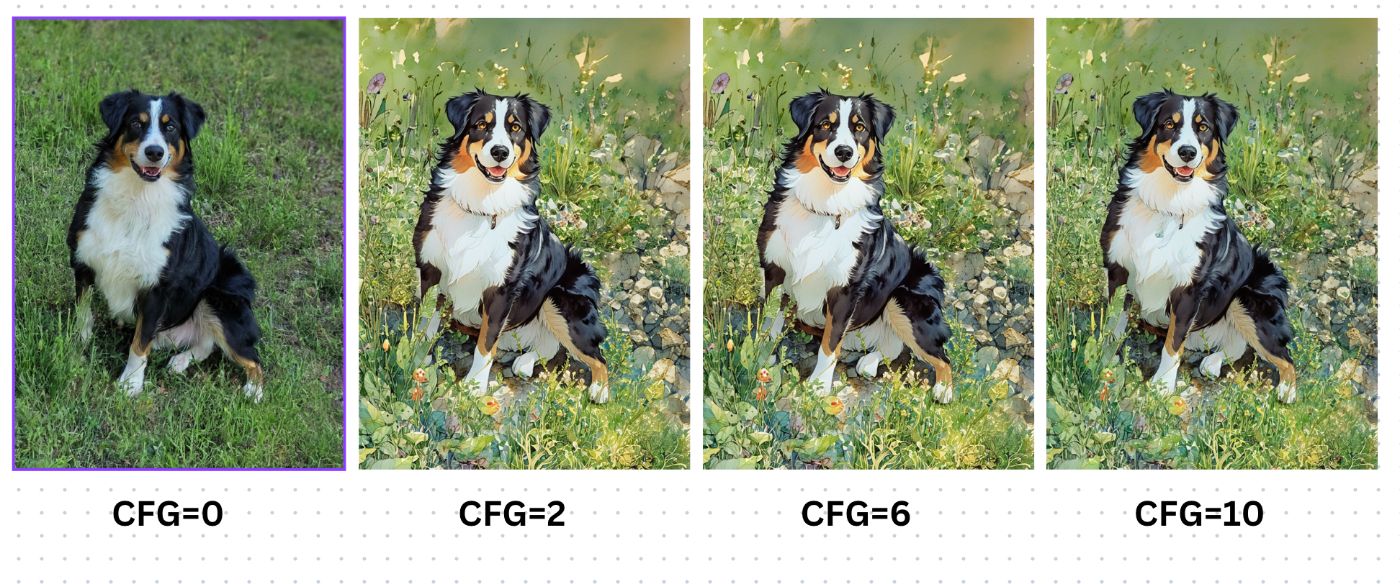

KSampler - CFG

Then there’s CFG. I honestly don’t fully understand this, but from its name - Classifier Free Guidance - I’m assuming it controls how much the model is allowed to modify the image unconstrained by the prompts in order to make it look better. This also affects the output image significantly, so is worth playing around with.

\

Denoise

Another neat little trick I use here is to start the image generation process with the input image instead of a blank image, and keep the denoising low. This ensures that the output will look similar in terms of colors and textures.

\

Text Prompt

One thing you’ll notice I never mentioned is the text prompt until now! Surprising, since that’s usually the only conditioning that you normally provide to diffusion models. But in this case, we have so many other ways of conditioning that the text prompts usually just get in the way. So in this case, the prompt is literally just “a dog”. I do use the text prompt a little more in some of the more stylized portraits, like the chef dog or the one in the bathroom.

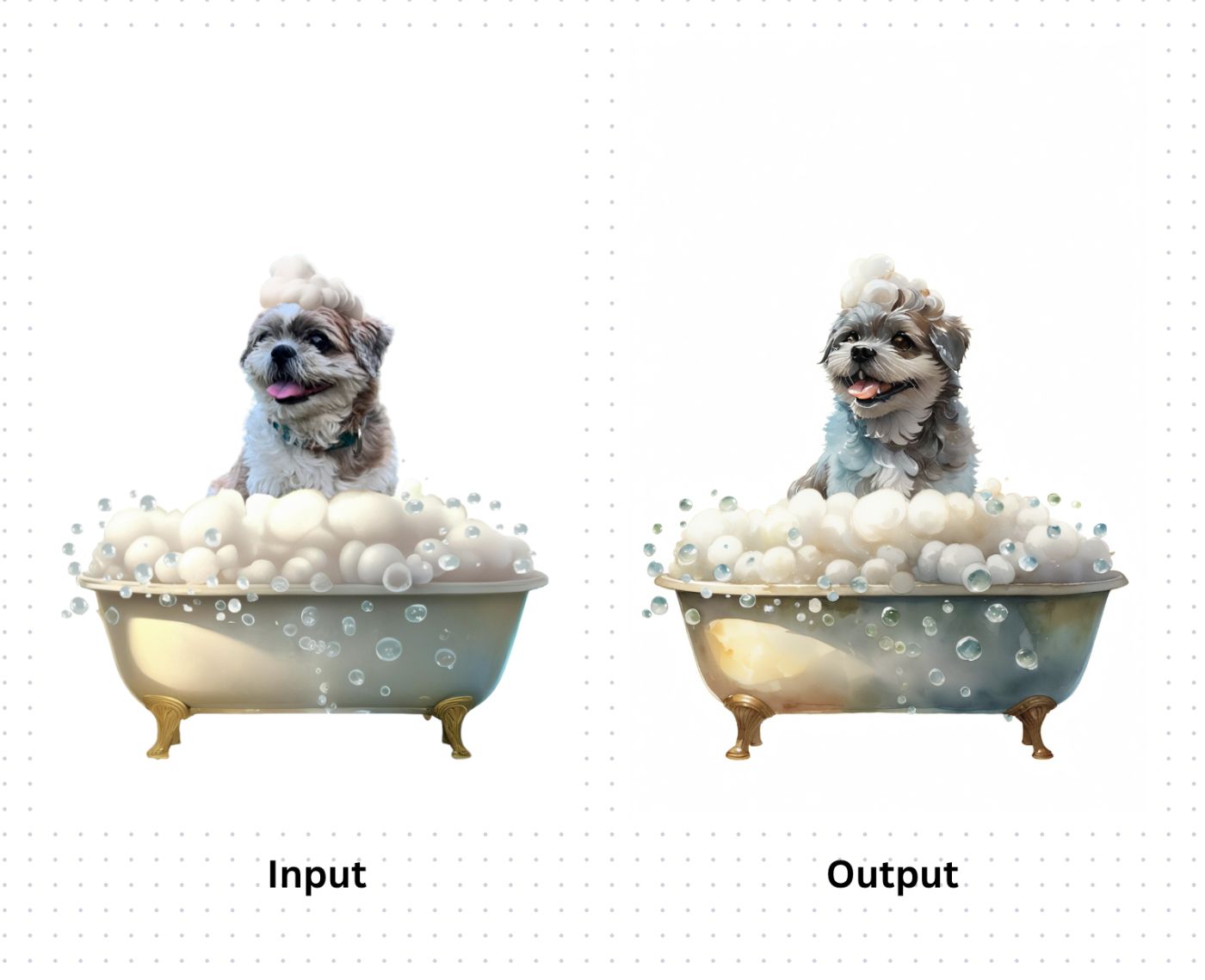

Adding accessories

In essence, this is more or less just an “AI Filter” that converts images into watercolor portraits. But it’s amazing how flexible this can be. For example, to make the portrait of the dog taking a shower, I literally just put images together in an image editing tool and used that as the input! The model takes care of unifying everything and cleaning up the image.

\

Conclusion

Now remove the background, add some text and Boom! You have a beautiful portrait that captures all the little details of your pet, and always paints them in the best light!

\ Huge thanks to @cubiq for his work on ComfyUI nodes and his awesome explainer series on youtube! Most of this pipeline was built and explained by him in his videos.

\ If you want a pet portrait without going through all this trouble, consider buying one from here: pawprints.pinenlime.com!

This content originally appeared on HackerNoon and was authored by hackernoon

hackernoon | Sciencx (2024-08-19T13:35:03+00:00) Make Masterpiece Pet Portraits with ComfyUI. Retrieved from https://www.scien.cx/2024/08/19/make-masterpiece-pet-portraits-with-comfyui/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.