This content originally appeared on Level Up Coding - Medium and was authored by Ivan Polovyi

Intermediate operations are crucial in stream processing, and the Stream API offers a wide range of these powerful tools. Mastering them is essential for any Java developer. Let’s explore them together.

Let’s start by defining what a stream pipeline is. A stream pipeline in Java is a sequence of operations that process data in a stream. It consists of three main components:

- Source: The origin of the stream, such as a collection, an array, a generator function, or an I/O channel. This is where the data to be processed comes from.

- Intermediate Operations: These are operations that transform or filter the data in the stream. Examples include filter(), map(), and sorted(). Intermediate operations are lazy, meaning they do not perform any processing until the pipeline is executed by a terminal operation.

- Terminal Operation: The final operation in the pipeline, which triggers the processing of the stream. Examples include forEach(), collect(), and reduce(). Once a terminal operation is called, the stream is consumed, and no further operations can be applied.

Now, let’s define what a stream intermediate operation is. A stream intermediate operation in Java is an operation that processes elements of a stream and produces another stream as a result. These operations can transform, filter, inspect, or otherwise modify the stream without consuming it. The key point is that intermediate operations always return a stream, allowing further operations to be chained together.

Key characteristics of intermediate operations include:

- Lazy Evaluation: Intermediate operations are lazy, meaning they do not actually process the elements of the stream when they are called. Instead, they build up a pipeline of operations that will be applied to the data only when a terminal operation is invoked.

- Chaining: Since intermediate operations return a stream, they can be chained together to form complex processing pipelines. This chaining allows for clear and concise code.

- Stateless or Stateful: Intermediate operations can be stateless, where each element is processed independently (e.g., map()), or stateful, where the operation needs to remember information from previous elements (e.g., distinct() or sorted()).

If we write code like the example below, the stream won’t be executed because the stream pipeline does not end with a terminal operation:

stream()

.intermediateOperation1()

.intermediateOperation2()

...

.intermediateOperationN()

Notice I said ‘does not end.’ As the name suggests, the terminal operation terminates the stream; once it’s executed, the stream is consumed and cannot be reused.

For example, this code won't even compile:

stream()

.intermediateOperation1()

.terminalOperation1()

.intermediateOperation2()

stream()

.intermediateOperation1()

.terminalOperation1()

.terminalOperation2()

This is because the terminal operation ends the stream and transforms it into something else, like a list. Once the stream is terminated, any further attempts to call another operation, whether intermediate or terminal, will fail because the resulting object is no longer a stream.

This code will throw an exception because the first terminal operation closes the stream, making it unusable for any further operations.

Stream<T> stream = Stream.of(elemt1, element2)

.intermediateOperation1();

stream.terminalOperation(); -- stream is closed

stream.intermediateOperation2(); -- IllegalStateException

In most cases, a stream pipeline follows this structure:

stream()

.intermediateOperation1()

.intermediateOperation2()

...

.intermediateOperationN()

.terminalOperation()

However, a pipeline can be constructed as in the very first example, with intermediate operations and then executed later when a terminal operation is eventually called.

Stateful vs. Stateless operations

In Java’s Stream API, operations can be categorized into two types: stateful and stateless. Understanding the difference between these two is crucial for effectively using streams in Java.

- Stateless operations do not retain any state from previously processed elements when processing new elements in the stream. Each element is handled independently, meaning the operation does not require knowledge of the elements that came before it. They can be processed in parallel more efficiently and generally perform better because there’s no need to maintain state information.

- Stateful operations require some state information to be maintained while processing elements in the stream. The result of processing an element might depend on the previous elements, or the entire stream needs to be processed before the operation can produce its output.

The limit operation

The limit operation, as its name suggests, restricts the number of elements processed in the stream pipeline.

Stream<T> limit(long maxSize);

In other words, it truncates the stream to include only the number of elements specified by the parameter. It can limit both infinite and finite streams.

List<Integer> example1 = Stream.iterate(1, n -> n + 1)

.limit(3)

.toList();

// [1, 2, 3]

List<Integer> example2 = Stream.of(1, 2, 3, 4, 5)

.limit(3)

.toList();

// [1, 2, 3]

It is a stateful operation because it needs to keep track of the number of elements processed. It can be more expensive for parallel streams, especially when the stream is ordered, as it requires coordination across different segments of the stream to ensure the correct number of elements are passed through.

The skip operation

The skip operation, as the name suggests, skips the first few elements of the stream.

Stream<T> skip(long n);

The number of elements to skip is determined by the parameter passed to the method.

List<Integer> example3 = Stream.of(1, 2, 3, 4, 5)

.skip(3)

.toList();

// [4, 5]

The skip is a stateful operation because it needs to keep track of the elements it bypasses. This can make it more expensive during parallel processing, especially when maintaining the order of elements.

We can combine the skip and limit operations in the same pipeline to precisely define the stream's size.

List<Integer> example4 = Stream.iterate(1, n -> n + 1)

.skip(1)

.limit(3)

.toList();

// [2, 3, 4]

The distinct operation

The distinct operation removes duplicates from the stream.

Stream<T> distinct();

In other words, it returns a new stream containing only distinct elements based on the Object.equals() method. The operation relies on Object.hashCode() to efficiently track and compare elements. Therefore, both equals() and hashCode() methods must be correctly overridden in the objects being compared.

List<Integer> example5 = Stream.of(1, 1, 2, 2, 3, 3)

.distinct()

.toList();

// [1, 2, 3]

This is a stateful operation, as it must keep track of all previously encountered elements to ensure uniqueness. It can be resource-intensive, particularly in parallel streams, due to the additional overhead required to maintain this state across multiple threads.

The sorted

The sorted method orders the elements of a stream. The Stream interface provides two overloaded versions of this method.

Stream<T> sorted();

This version takes no arguments and sorts the stream using the natural order of the elements. For example, you can use it to sort a stream of integers:

List<Integer> example6 = Stream.of(2, 3, 5, 4, 1)

.sorted()

.toList();

// [1, 2, 3, 4, 5]

The second version accepts a Comparator as an argument, allowing you to define a custom order for the stream.

Stream<T> sorted(Comparator<? super T> comparator);

We can use it to apply a reverse order like so:

List<Integer> example7 = Stream.of(2, 3, 5, 4, 1)

.sorted(Comparator.reverseOrder())

.toList();

// [5, 4, 3, 2, 1]

For a deeper dive into how to use Comparator in streams, including multiple examples, check out my comprehensive tutorial on the subject.

The sorted() operation is a stateful operation, which means it needs to process all elements before it can produce the sorted output. This makes it resource-intensive, particularly when used in parallel streams.

The unordered operation

The unordered() method in the Java Stream API is used to remove the encounter order constraint from a stream. By default, some streams, especially those derived from ordered sources like lists, maintain the order of elements. This ordering can impact the performance of certain operations, particularly in parallel processing.

S unordered();

When you call unordered() on a stream, it indicates that the order in which elements are processed or produced no longer matters. This can potentially allow for more efficient processing, especially when using parallel streams, as it frees the implementation from maintaining the order of elements.

It’s important to note that unordered() does not actually reorder elements; it merely removes the requirement for operations to preserve the order. If the stream was already unordered, calling this method has no effect.

Using unordered() can be beneficial when you don’t care about the order of elements in the stream, especially in parallel streams, as it can lead to performance improvements by allowing more flexibility in how elements are processed.

List<Integer> example8 = List.of(2, 3, 5, 4, 1)

.stream()

.unordered()

.parallel() // potentially can lead to better performance

.toList();

This operation is stateless and doesn’t rely on or maintain any state between stream elements. Instead, it simply provides a hint that the order of elements can be ignored.

The peek operation

The peek method is an intriguing operation in the Stream API.

Stream<T> peek(Consumer<? super T> action);

Its primary purpose is to observe the elements of the stream without modifying them. It returns a stream with the same elements but also performs an additional action on each element, as specified by a Consumer passed as a parameter.

While peek() can be useful for debugging, but it is generally not recommended for production code. In the example below, we use peek to print each element of the stream before and after sorting, giving us insight into the stream's state at different stages.

List<Integer> example9 = Stream.of(2, 3, 5, 4, 1)

.peek(System.out::println)

.sorted(Comparator.reverseOrder())

.peek(System.out::println)

.toList();

It’s important to note that peek() is a stateless operation that does not alter the stream's size, order, or element type.

The filter operation

Without a doubt, one of the most powerful operations in the Stream API is the filter() operation. It has transformed the way we filter collections, eliminating the need for cumbersome if statements and making the code more concise and readable.

Stream<T> filter(Predicate<? super T> predicate);

The result of this operation is a stream that includes only the elements matching the predicate provided as a parameter. In the example below, the filter() operation removes odd digits from the stream.

List<Integer> example10 = Stream.of(1, 2, 3, 4, 5)

.filter(n -> n % 2 == 0)

.toList();

// [2, 4]

It is a stateless operation that can potentially reduce the number of elements in the stream, but it does not alter their order or type.

The take while operation

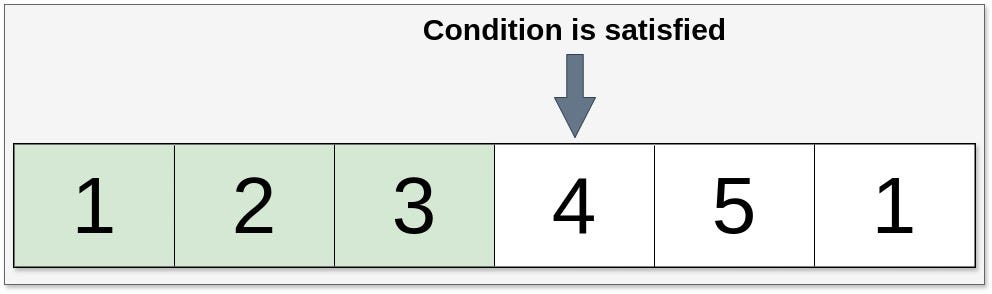

The takeWhile() method in Java's Stream API, which is a powerful tool for working with streams. It allows you to take elements from a stream as long as they satisfy a given predicate. Once an element does not meet the predicate, the operation stops, and no further elements are processed, even if they might satisfy the predicate.

default Stream<T> takeWhile(Predicate<? super T> predicate) {...}It operates in a short-circuiting manner, meaning it stops processing as soon as an element fails to meet the condition, and no further elements are examined. This method respects the order of elements in the stream and is useful for scenarios where you want to process a sequence of elements up to a certain point where the predicate no longer holds.

It is best demonstrated with an example:

List<Integer> example11 = Stream.of(1, 2, 3, 4, 5, 1)

.takeWhile(n -> n < 4)

.toList();

// [1, 2, 3]

In this example, the takeWhile() method uses the predicate 'elements less than 4'. As a result, the operation stops as soon as it encounters element 4, which does not satisfy the predicate. All elements before 4 are included in the result. Note that even though the element 1 after 5 also satisfies the predicate, it is not included because takeWhile() halts processing once the condition is no longer met.

Let’s check another example in which the predicate is satisfied at the first element; in this case, we will have an empty stream.

List<Integer> example12 = Stream.of(4, 1, 2, 3, 4, 5)

.takeWhile(n -> n < 4)

.toList();

// []

ThetakeWhile() is a stateless operation that processes elements based on their position relative to the predicate and does not maintain any state between elements.

It’s important to note that if the stream is unordered, the result of the takeWhile() method may be non-deterministic. See the example below:

List<Integer> example13 = Set.of(1, 2, 3, 4, 5)

.stream()

.takeWhile(n -> n < 4)

.toList();

// [] -- first run

// [2, 3] -- second run

// [1, 2, 3] -- third run

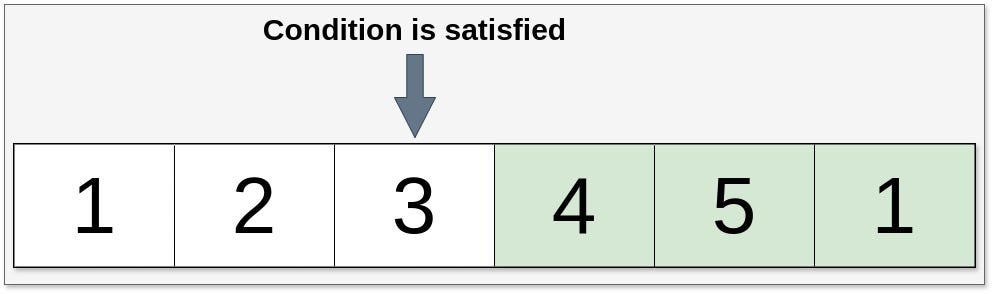

The drop while operation

The counterpart to the takeWhile() operation is dropWhile(). While takeWhile() processes elements as long as they meet the specified condition, dropWhile() discards elements until the condition is no longer satisfied. After this point, all subsequent elements are included in the result, regardless of whether they meet the condition.

default Stream<T> dropWhile(Predicate<? super T> predicate) {...}The best way to understand how it works is to use the example.

List<Integer> example14 = Stream.of(1, 2, 3, 4, 5, 1)

.dropWhile(n -> n < 4)

.toList();

// [4, 5, 1]

In this example, the dropWhile() operation drops all elements that satisfy the predicate 'less than 4'. Once it encounters the first element that does not meet the predicate—in this case, the number 4—it stops dropping elements. Note that even though there is another element in the stream that satisfies the predicate after 4, it is not dropped because the operation has already short-circuited.

If the predicate is satisfied by the very first element, then no elements are dropped.

List<Integer> example15 = Stream.of( 4, 2, 3, 4, 5, 1)

.dropWhile(n -> n < 4)

.toList();

// [4, 2, 3, 4, 5, 1]

The dropWhile() method is a stateless operation that evaluates elements based on their position relative to the predicate without maintaining any state between elements. It’s important to note that if the stream is unordered, the result of the dropWhile() method may be non-deterministic. See the example below:

List<Integer> example16 = Set.of(1, 2, 3, 4, 5)

.stream()

.dropWhile(n -> n < 4)

.toList();

// [5, 4] -- first run

// [5, 4, 3, 2, 1] -- second run

// [4, 5] -- third run

I personally think of takeWhile() and dropWhile() as analogous to limit and skip operations, respectively, but with predicates. This perspective helps me understand their behavior more clearly.

The parallel operation

Parallel streams in Java allow you to leverage multiple CPU cores to process data concurrently, making your operations faster when dealing with large datasets. By splitting the stream into multiple parts and processing them in parallel, Java’s parallel stream can significantly reduce the time it takes to execute a pipeline.

S parallel();

This method turns sequential stream into parallel:

List<Integer> example17 = Set.of(1, 2, 3, 4, 5)

.stream()

.parallel()

.toList();

Parallel streams are a powerful tool in Java for improving performance. Still, they should be used with careful consideration of their impact on execution order, side effects, and the specific nature of the task.

The sequential operation

A counterpart of a parallel method is sequential:

S sequential();

It simply turns a parallel stream into a sequential one.

List<Integer> example18 = Set.of(1, 2, 3, 4, 5)

.parallelStream()

.sequential()

.toList();

If you’re wondering why I haven’t discussed map operations here, it’s not because I forgot them — I’m dedicating an entire tutorial just to map operations. Stay tuned for that!

The sequence of operations in a stream pipeline

When working with Java Streams, the sequence of operations can significantly impact performance. Here are some key considerations:

- Filtering operations (filter(), takeWhile(), dropWhile()) should be placed as early as possible in the stream pipeline. Filtering out elements early reduces the number of elements that subsequent operations need to process, leading to performance gains.

- If you know that you only need a specific number of elements from the stream, using limit() early can reduce the workload of subsequent operations, especially in large or infinite streams.

- Operations like sorted(), distinct(), skip(), and limit() on ordered streams are stateful and may require more computation, especially in parallel processing. Use them sparingly and consider the cost associated with maintaining the state.

- If the order of elements doesn’t matter, using the unordered() method can improve the performance of parallel streams by allowing more flexibility in how elements are processed. This can lead to more efficient parallel execution.

The complete code can be found here:

GitHub - polovyivan/java-streams-api-intermediate-operations

Conclusion

You can’t honestly consider yourself a proficient Java programmer without mastering Streams. The Stream API offers a variety of intermediate operations that can simplify even the most challenging tasks. I’ve walked you through most of these operations with practical examples in this tutorial.

Thank you for reading! If you enjoyed this post, please like and follow it. If you have any questions or suggestions, feel free to leave a comment or connect with me on my LinkedIn account.

Java Stream API: Exploring Intermediate Operations was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Ivan Polovyi

Ivan Polovyi | Sciencx (2024-08-20T11:27:19+00:00) Java Stream API: Exploring Intermediate Operations. Retrieved from https://www.scien.cx/2024/08/20/java-stream-api-exploring-intermediate-operations/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.