This content originally appeared on HackerNoon and was authored by Computational Technology for All

:::info Authors:

(1) Athanasios Angelakis, Amsterdam University Medical Center, University of Amsterdam - Data Science Center, Amsterdam Public Health Research Institute, Amsterdam, Netherlands

(2) Andrey Rass, Den Haag, Netherlands.

:::

Table of Links

- Abstract and 1 Introduction

- 2 The Effect Of Data Augmentation-Induced Class-Specific Bias Is Influenced By Data, Regularization and Architecture

- 2.1 Data Augmentation Robustness Scouting

- 2.2 The Specifics Of Data Affect Augmentation-Induced Bias

- 2.3 Adding Random Horizontal Flipping Contributes To Augmentation-Induced Bias

- 2.4 Alternative Architectures Have Variable Effect On Augmentation-Induced Bias

- 3 Conclusion and Limitations, and References

- Appendices A-L

Appendices

Appendix A: Image dimensions (in pixels) off training images after being randomly cropped and before being resized

[32x32, 31x31, 30x30,

29x29, 28x28, 27x27,

26x26, 25x25, 24x24,

22x22, 21x21, 20x20,

19x19, 18x18, 17x17,

16x16, 15x15, 14x14,

13x13, 12x12, 11x11,

10x10, 9x9, 8x8,

6x6,5x5, 4x4, 3x3]

Appendix B: Dataset samples corresponding to the Fashion-MNIST segment used in training

Appendix C: Dataset samples corresponding to the CIFAR-10 segment used in training

Appendix D: Dataset samples corresponding to the CIFAR-100 segment used in training

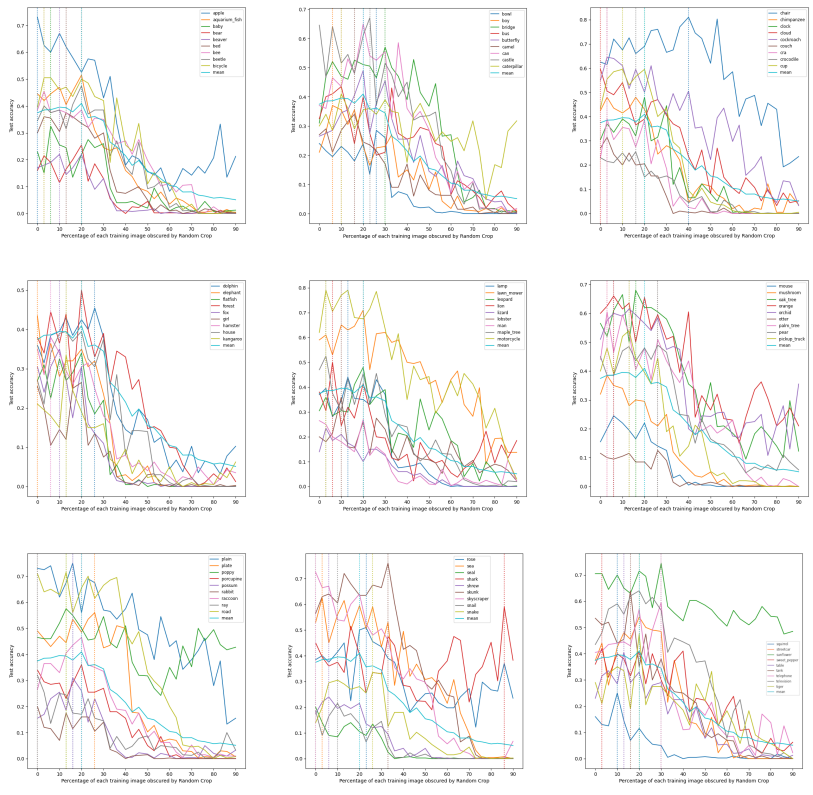

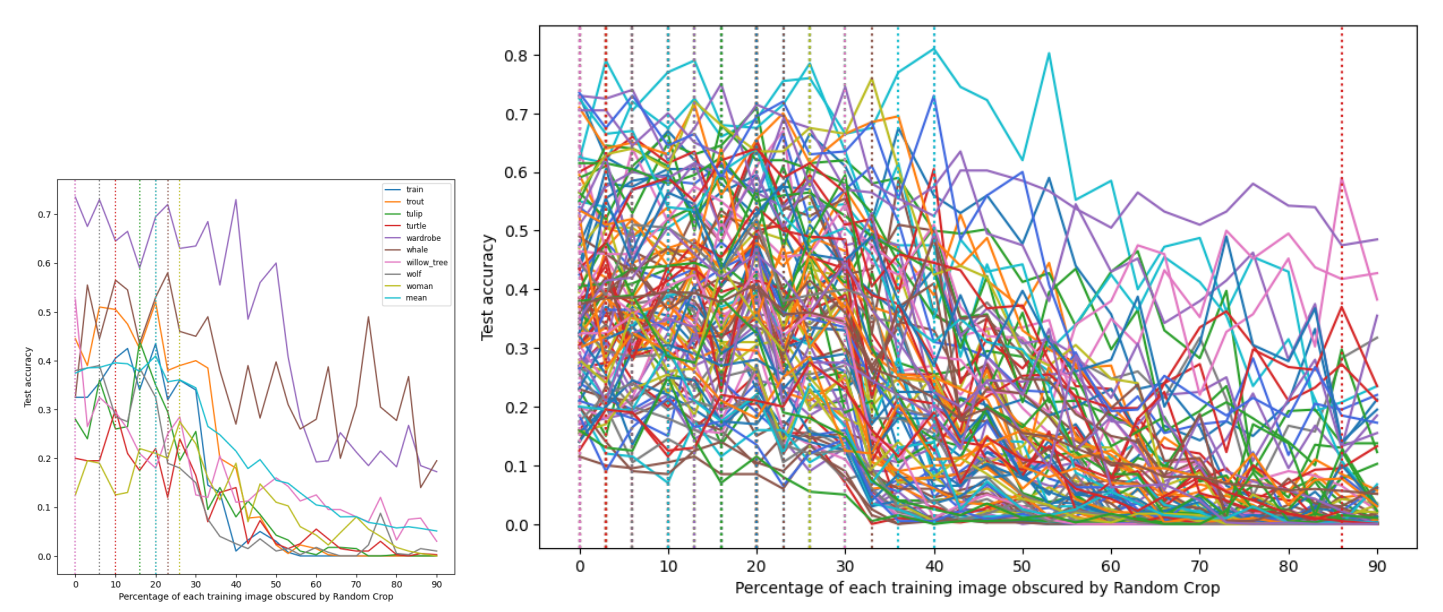

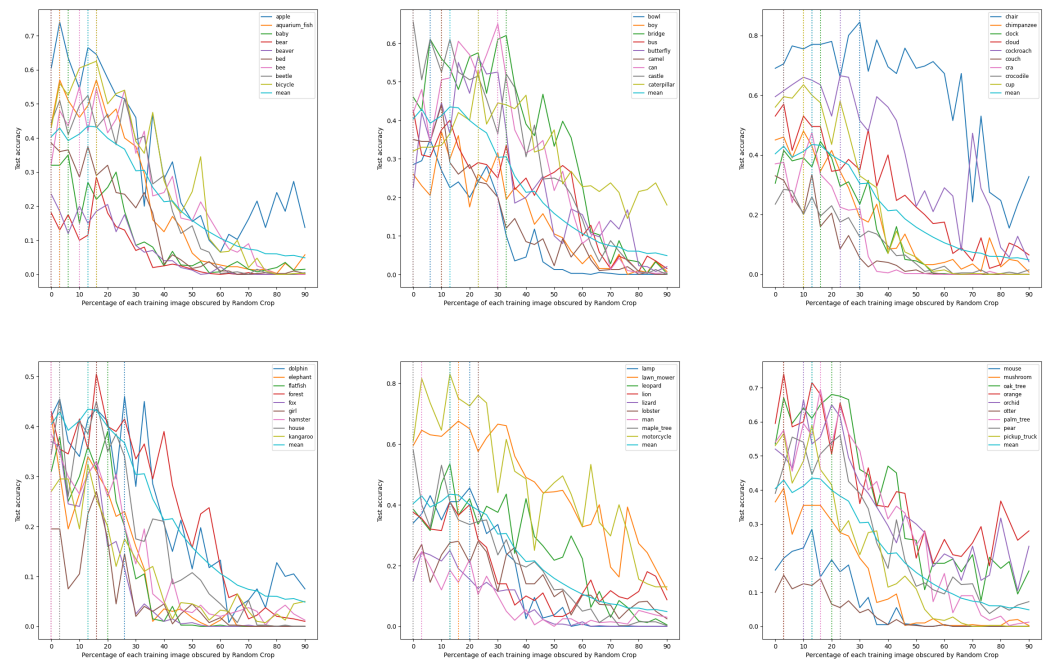

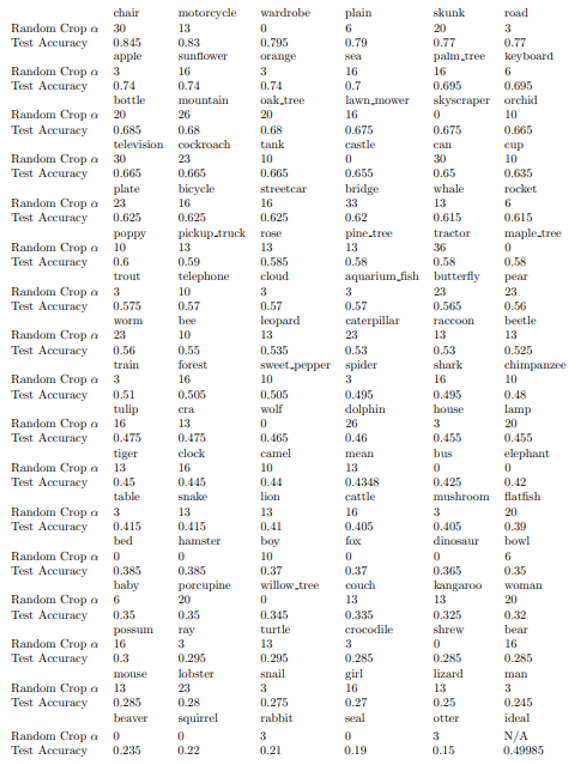

Appendix E: Full collection of class accuracy plots for CIFAR-100

\

\

\

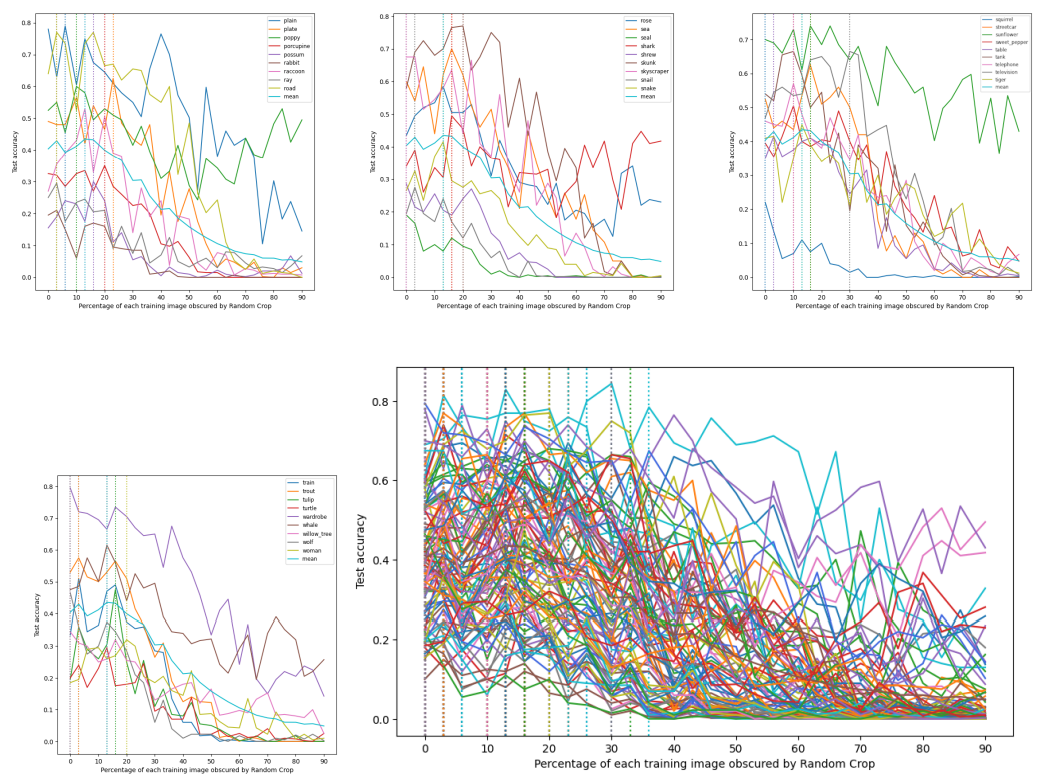

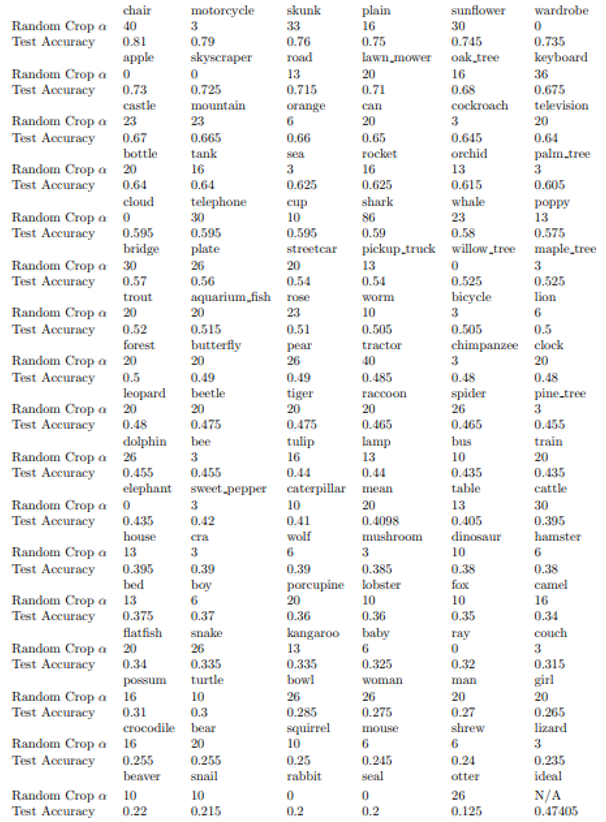

Appendix F: Full collection of best test performances for CIFAR100

Without Random Horizontal Flip:

\

\ With Random Horizontal Flip

\

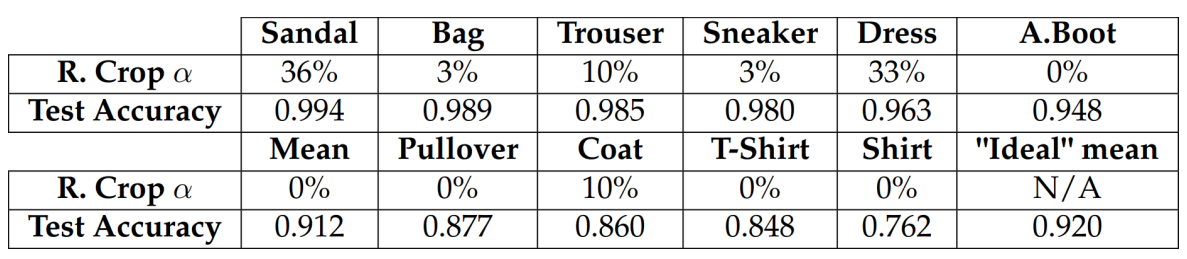

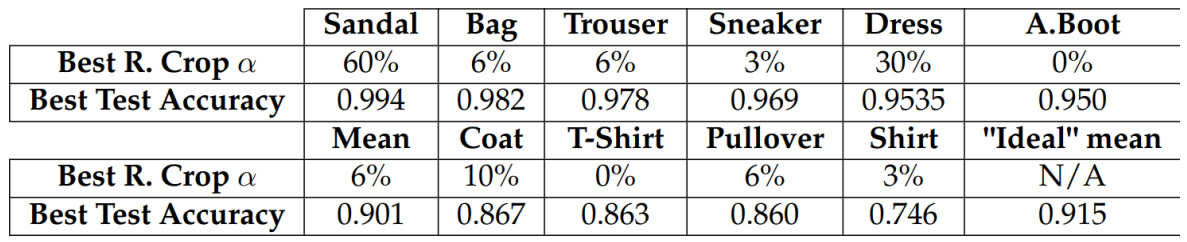

Appendix G: Per-class and overall test set performances samples for the Fashion-MNIST + ResNet50 + Random Cropping + Random Horizontal Flip experiment

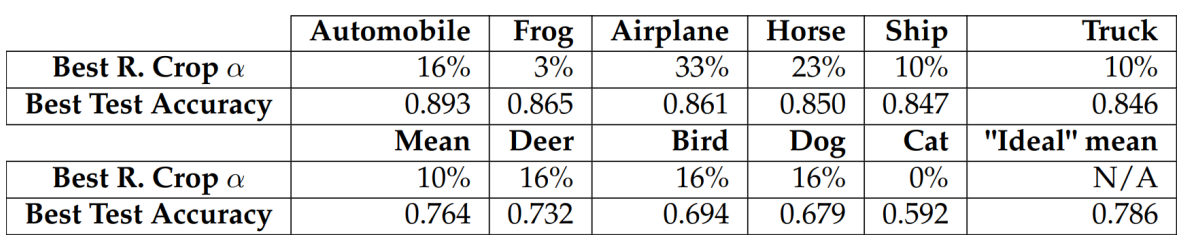

Appendix H: Per-class and overall test set performances samples for the CIFAR-10 + ResNet50 + Random Cropping + Random Horizontal Flip experiment

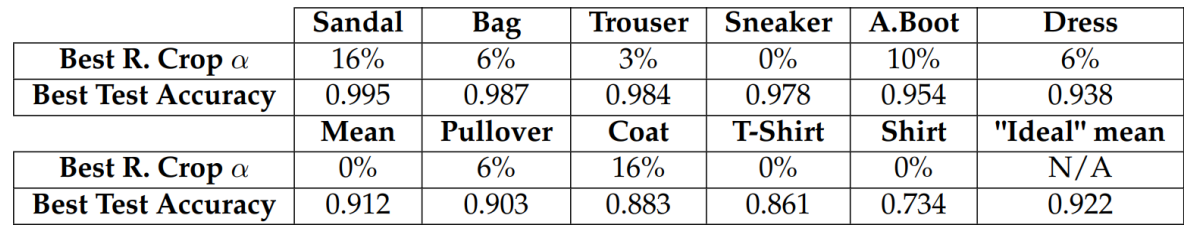

Appendix I: Per-class and overall test set performances samples for the Fashion-MNIST + EfficientNetV2S + Random Cropping + Random Horizontal Flip experiment

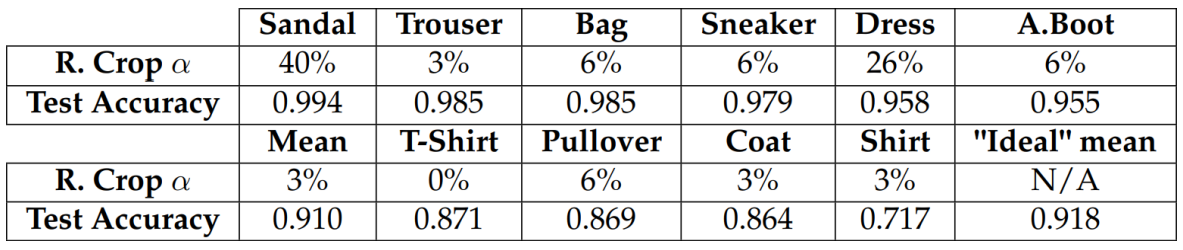

Appendix J: Per-class and overall test set performances samples for the Fashion-MNIST + ResNet50 + Random Cropping experiment

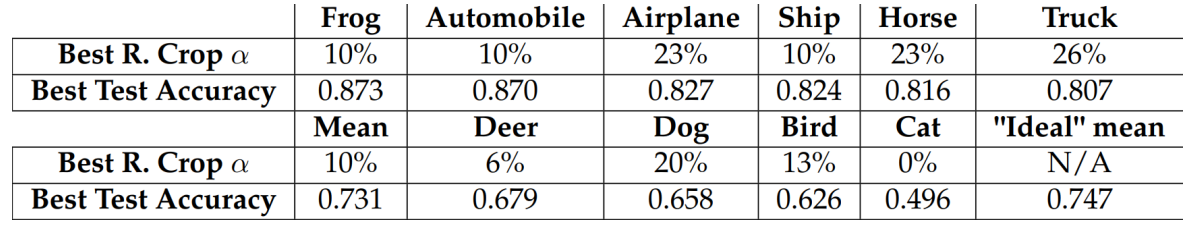

Appendix K: Per-class and overall test set performances samples for the CIFAR-10 + ResNet50 + Random Cropping experiment

Appendix L: Per-class and overall test set performances samples for the Fashion-MNIST + SWIN Transformer + Random Cropping + Random Horizontal Flip experiment

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by Computational Technology for All

Computational Technology for All | Sciencx (2024-08-31T18:00:22+00:00) A Data-centric Approach to Class-specific Bias in Image Data Augmentation: Appendices A-L. Retrieved from https://www.scien.cx/2024/08/31/a-data-centric-approach-to-class-specific-bias-in-image-data-augmentation-appendices-a-l/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.