This content originally appeared on HackerNoon and was authored by ViAchKoN

How to Use GitHub Actions and Create Integration With pypi for Python Projects

Introduction

When I was creating my first Python package, dataclass-sqlalchemy-mixins (github or pypi), I encountered an interesting challenge:

how to set up CI/CD on GitHub to ensure that nothing breaks when I push new changes and to automatically publish the code to PyPI. I discussed how to publish your own package using poetry in this article.

\ Typically, to verify any commit made to the master branch through a pull request, it is necessary to run tests.

\ Additionally, using linters to check code style is beneficial, especially when multiple developers are working on the project.

\ *CI (Continuous Integration) - The practice of automatically building and testing code changes after they are merged into the repository*

*CD (Continuous Delivery) - The automated delivery of code to development or production environments*

Creating Workflows

GitHub supports Actions, which are automated processes that can run one or more jobs, including CI/CD pipelines.

\ You can read more about them in the GitHub documentation.

\

Workflows are what GitHub will run according to your configuration. They should be located in the .github/workflows directory and be .yaml files.

\

For example, if we want to run tests after each commit, we need to create a file named test.yml.

\

I chose the name test for convenience and to indicate that this particular workflow is responsible for tests, but it can be anything.

name: "Test"

on:

pull_request:

types:

- "opened"

- "synchronize"

- "reopened"

push:

branches:

- '*'

workflow_dispatch:

jobs:

test:

runs-on: ubuntu-latest

strategy:

fail-fast: false

steps:

- uses: actions/checkout@v4

- name: Set up Python 3.12

id: setup-python

uses: actions/setup-python@v5

with:

python-version: 3.12

- name: Cache poetry install

uses: actions/cache@v4

with:

path: ~/.local

key: poetry-${{ steps.setup-python.outputs.python-version }}-1.7.1-0

- uses: snok/install-poetry@v1

with:

version: 1.7.1

virtualenvs-create: true

virtualenvs-in-project: true

- name: Load cached venv

id: cached-poetry-dependencies

uses: actions/cache@v4

with:

path: .venv

key: venv-${{ steps.setup-python.outputs.python-version }}-${{ hashFiles('**/poetry.lock') }}

- name: Install dependencies

if: steps.cached-poetry-dependencies.outputs.cache-hit != 'true'

run: poetry install --no-interaction

- name: Run tests

run: poetry run pytest --cov-report=xml

\ Let me explain the key elements of the file:

name- This is the name of the workflow.pull request- Specifies the types of pull requests that trigger the workflow. The recommended values by default areopened,synchronize, andreopened.You can read more about these types here.

push- Specifies the branches that trigger the workflow.jobs- A workflow can consist of one or more jobs, which run in parallel by default but can also be configured to run sequentially.

\ I’d like to discuss what happens during the execution of jobs with this code.

\ First, the name of a job can be used to set up rulesets for the repository or specific branches. In this case, the only job that will run is test. The details of rulesets will be covered later.

\

The runs-on key specifies which operating system the jobs will run on.

\

Setting strategy: fail-fast: false means that GitHub will not cancel all in-progress and queued jobs in the matrix if any job in the matrix fails.

\

The matrix parameter will be discussed later.

\

As you can see, there are a lot of similar codes in the uses key in the steps. For example:

actions/setup-python@v5actions/cache@v4snok/install-poetry@v1

\

At first glance, the names of the actions might be confusing, but they are actually the paths to the GitHub repositories for these actions,

excluding the @v part.

\

For example, you can view the source code of the first action by visiting https://github.com/actions/setup-python.

\

The same can be done for the other actions. @v is used to set which version of the action is to use.

\ The with key is used to pass parameters to an action.

\

For example, if we want to install Python 3.12, we pass python-version: 3.12 in the Set up Python 3.12 step. The same approach applies to other steps.

\ In short, the test job performs the following steps:

\

- Sets up

Pythonwith the required version. - Installs the

Poetry1.7.1 dependency using GitHub’s cache. - Creates a Python .venv in the root directory if a cache is not found. The virtual environment cache depends on the

Pythonversion andpoetry.lock. - If the cached virtual environment is not found, the project's dependencies are installed.

- Runs the tests.

Using Services in Workflows

The created workflow is quite simple and may not be suitable for testing more sophisticated applications or projects.

\ This is because such projects often require services like databases or caching to function correctly. While it’s possible to write tests that mock requests to these services, I wouldn’t recommend it, as it can reduce the effectiveness of test coverage.

\

I will demonstrate how to include a service in a workflow, using PostgreSQL as an example.

jobs:

test:

services:

postgres:

image: postgres:latest

env:

POSTGRES_PASSWORD: postgres

ports:

- 5432:5432

options: >-

--health-cmd pg_isready

--health-interval 10s

--health-timeout 5s

--health-retries 5

\

Adding a services key with a required service name allows to set up it during a job.

\

Moreover, you can pass settings to it similarly when using docker.

Using Several Parameters During a Job Run

Last but not least is the situation where you need to run a job using different parameters.

\

For example, you may need to run a job with several specific Python versions, not just the latest, or with different dependencies.

\

This can be particularly useful when you need to support backward compatibility with older dependencies. GitHub provides the strategy key to address this. The matrix parameter allows you to run a job with multiple values.

jobs:

test:

runs-on: ubuntu-latest

strategy:

fail-fast: false

matrix:

python-version: ["3.11", "3.12"]

sqlalchemy-version: ["1.4.52", "2.0.31"]

\ A job with a matrix set up this way will run each value of a parameter’s list against every other value.

\

As a result, there will be four runs of the job with the following combinations of Python and SQLAlchemy versions, respectively:

3.11,1.4.523.11,2.0.313.12,1.4.523.12,2.0.31

\

You can access the parameters in a job run using ${{ matrix.python-version }} or ${{ matrix.sqlalchemy-version }}.

- name: Set up Python ${{ matrix.python-version }}

id: setup-python

uses: actions/setup-python@v5

with:

python-version: ${{ matrix.python-version }}

- name: Install sqlalchemy ${{ matrix.sqlalchemy-version }}

run: pip install sqlalchemy==${{ matrix.sqlalchemy-version }}

Using GitHub Rulesets

But sometimes, simply running jobs for your project isn't enough.

\ There are situations where you may need to restrict merging commits to the master branch. While this might not be necessary when you're working alone on a project, it’s highly recommended when multiple people are working on the same codebase. This helps prevent situations where someone accidentally merges a commit that breaks the code, even if the pipelines fail.

\ GitHub provides a useful tool for this called rulesets.

\

You can access it from the repository settings menu at https://github.com/{Author}/{Repository}/settings/rules.

\

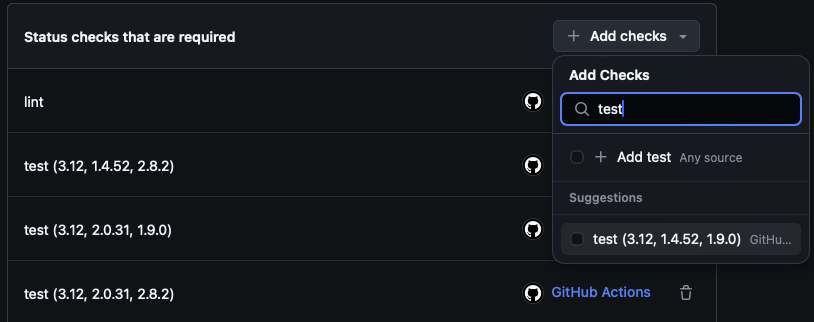

After creating a new ruleset, scroll down to Require status checks to pass, turn it on, and specify which jobs are required to pass.

\ To find a check, type the name of the required job, and GitHub will provide suggestions.

\ Note that each job in the matrix will have its own name, allowing you to select them individually.

\

Publishing to pypi

In the last part, I would like to talk about how to create an integration with pypi and publish new versions with release.

\ In order to do that, a new Yaml file with publishing workflows should be created.

name: Upload Python Package to PyPi on release

on:

release:

types: [published]

permissions:

contents: read

jobs:

deploy:

runs-on: ubuntu-latest

environment:

name: pypi

url: https://pypi.org/project/dataclass-sqlalchemy-mixins/

steps:

- uses: actions/checkout@v4

- name: Set up Python

uses: actions/setup-python@v3

with:

python-version: '3.x'

- uses: snok/install-poetry@v1

with:

version: 1.7.1

virtualenvs-create: true

virtualenvs-in-project: true

- name: Build package

run: poetry build

- name: Publish package

uses: pypa/gh-action-pypi-publish@27b31702a0e7fc50959f5ad993c78deac1bdfc29

with:

user: __token__

password: ${{ secrets.PYPI_API_TOKEN }}

This workflow:

- Sets up

Python. - Installs the

Poetry1.7.1 dependency using GitHub’s cache. - Build the source and wheel archives.

- Publishes the package to pypi

\ Yes, it is as simple as it looks.

\

You just need to add a PYPI_API_TOKEN to repository secrets(https://github.com/{Author}/{Repository}/settings/secrets/actions).

\

Finally, create a new release (https://github.com/{Author}/{Repository}/releases), and the package will be uploaded.

This content originally appeared on HackerNoon and was authored by ViAchKoN

ViAchKoN | Sciencx (2024-09-05T00:50:30+00:00) How to Set Up GitHub Actions and PyPI Integration for Python Projects. Retrieved from https://www.scien.cx/2024/09/05/how-to-set-up-github-actions-and-pypi-integration-for-python-projects/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.