This content originally appeared on HackerNoon and was authored by Cosmological thinking: time, space and universal causation

:::info Authors:

(1) Zhan Ling, UC San Diego and equal contribution;

(2) Yunhao Fang, UC San Diego and equal contribution;

(3) Xuanlin Li, UC San Diego;

(4) Zhiao Huang, UC San Diego;

(5) Mingu Lee, Qualcomm AI Research and Qualcomm AI Research

(6) Roland Memisevic, Qualcomm AI Research;

(7) Hao Su, UC San Diego.

:::

Table of Links

Motivation and Problem Formulation

Deductively Verifiable Chain-of-Thought Reasoning

Conclusion, Acknowledgements and References

\ A Deductive Verification with Vicuna Models

C More Details on Answer Extraction

E More Deductive Verification Examples

2 Related work

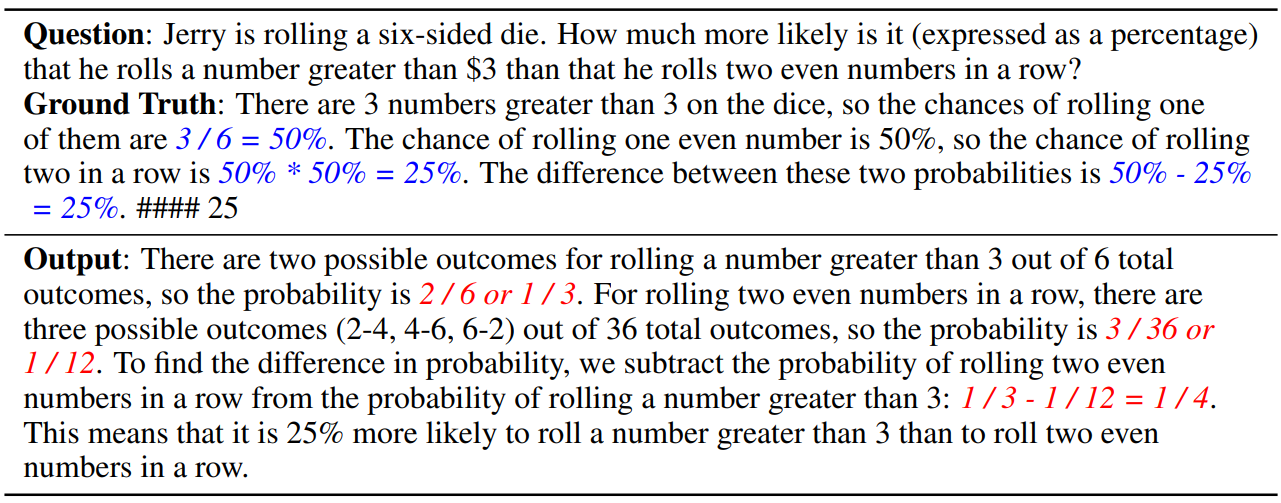

Reasoning with large language models. Recent large language models (LLMs) [3, 8, 57, 47, 38, 18, 9, 37] have shown incredible ability in solving complex reasoning tasks. Instead of letting LLMs directly generate final answers as output, prior work have shown that by encouraging step-by-step reasoning through proper prompting, such as Chain-of-Thought (CoT) prompting [50] and many others [21, 59, 58, 44, 48, 60, 25, 54], LLMs exhibit significantly better performance across diverse reasoning tasks. To further improve the step-by-step reasoning process, some recent studies have investigated leveraging external solvers such as program interpreters [39, 5, 27], training and calling external reasoning modules [11], or performing explicit search to generate deductive steps [2, 46]. Parallel to these works, we do not rely on external modules and algorithms, and we directly leverage the in-context learning ability of LLMs to generate more precise and rigorous deductive reasonings.

\ Large language models as verifiers. Using language models to evaluate model generations has been a long standing idea [22, 36, 40, 4]. As LLMs exhibit impressive capabilities across diverse tasks, it becomes a natural idea to use LLMs as evaluation and verification tools. For example, [10, 11, 33] finetune LLMs to verify solutions and intermediate steps. LLMs aligned with RLHF [32, 31, 48] have also been employed to compare different model generations. In addition, recent works like [43, 52, 28, 6] leverage prompt designs to allow LLMs to self-verify, self-refine, and self-debug without the need for finetuning. However, these works do not focus on the rigor and trustworthiness of the deductive reasoning processes at every reasoning step. In this work, we propose a natural language-based deductive reasoning format that allows LLMs to self-verify every intermediate step of a deductive reasoning process, thereby improving the rigor and trustfulness of reasoning.

\

\ Additionally, while some recent works [12, 53, 15, 34] have proposed methods to verify individual steps in a reasoning process, our approach distinguishes from these works in the following perspectives: (1) Our approach leverages in-context learning to achieve reasoning verification, without the need for language model finetuning. (2) Our Natural Program-based LLM verification approach not only identifies invalid reasoning steps, but also provides explicit explanations for why they are invalid, detailing the specific reasoning errors involved. (3) Our Natural Program-based reasoning and verification approach is compatible with in-context abstract reasoning tasks where reasoning steps do not possess proof-like entailment structures. For example, our approach is compatible with the Last Letters task, where the LLM is instructed to output the concatenation of the last letters of all words in a sequence as the final answer. (4) Our Natural Program approach allows the use of commonsense knowledge not explicitly listed in premises. For example, consider this problem: “Marin eats 4 apples a day. How many apples does he eat in November?” Even though “November has 30 days” is not explicitly listed in the premises, Natural Program permits the use of such common knowledge within a reasoning step. Our in-context verification process is also capable of handling these implicit premises (e.g., if LLM outputs “November has 29 days” in a reasoning step, it will be marked as invalid).

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by Cosmological thinking: time, space and universal causation

Cosmological thinking: time, space and universal causation | Sciencx (2024-09-08T13:58:45+00:00) Solving the AI Hallucination Problem with Self-Verifying Natural Programs. Retrieved from https://www.scien.cx/2024/09/08/solving-the-ai-hallucination-problem-with-self-verifying-natural-programs/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.