This content originally appeared on HackerNoon and was authored by Cosmological thinking: time, space and universal causation

:::info Authors:

(1) Zhan Ling, UC San Diego and equal contribution;

(2) Yunhao Fang, UC San Diego and equal contribution;

(3) Xuanlin Li, UC San Diego;

(4) Zhiao Huang, UC San Diego;

(5) Mingu Lee, Qualcomm AI Research and Qualcomm AI Research

(6) Roland Memisevic, Qualcomm AI Research;

(7) Hao Su, UC San Diego.

:::

Table of Links

Motivation and Problem Formulation

Deductively Verifiable Chain-of-Thought Reasoning

Conclusion, Acknowledgements and References

\ A Deductive Verification with Vicuna Models

C More Details on Answer Extraction

E More Deductive Verification Examples

6 Limitations

While we have demonstrated the effectiveness of Natural Program-based deductive reasoning verification to enhance the trustworthiness and interpretability of reasoning steps and final answers, it is

\

\

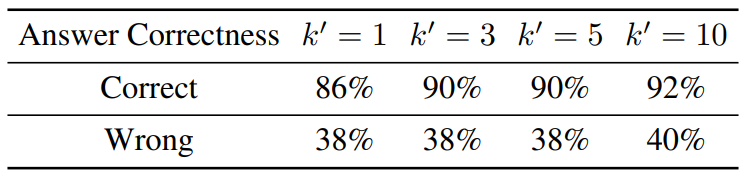

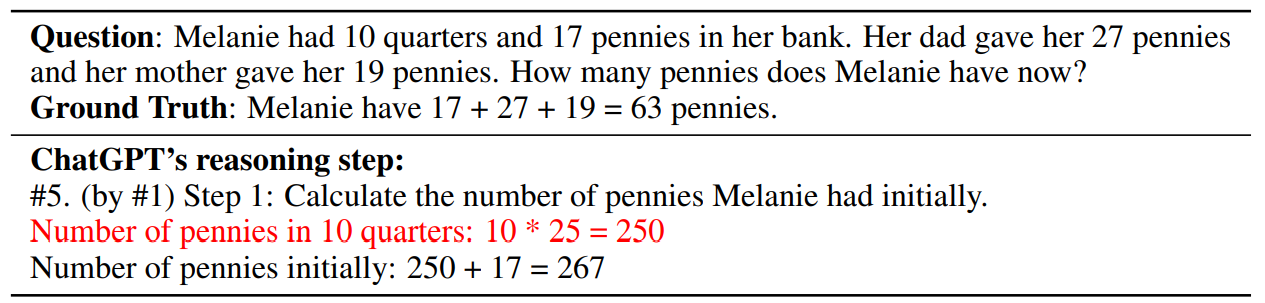

\ important to acknowledge that our approach has limitations. In this section, we analyze a common source of failure cases to gain deeper insights into the behaviors of our approach. The failure case, as shown in Tab. 8, involves the ambiguous interpretation of the term “pennies,” which can be understood as either a type of coin or a unit of currency depending on the context. The ground truth answer interprets “pennies” as coins, while ChatGPT interprets it as a unit of currency. In this case, our deductive verification process is incapable of finding such misinterpretations. Contextual ambiguities like this are common in real-world scenarios, highlighting the current limitation of our approach.

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by Cosmological thinking: time, space and universal causation

Cosmological thinking: time, space and universal causation | Sciencx (2024-09-08T13:59:10+00:00) When Deductive Reasoning Fails: Contextual Ambiguities in AI Models. Retrieved from https://www.scien.cx/2024/09/08/when-deductive-reasoning-fails-contextual-ambiguities-in-ai-models/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.