This content originally appeared on HackerNoon and was authored by Kinetograph: The Video Editing Technology Publication

:::info (1) Feng Liang, The University of Texas at Austin and Work partially done during an internship at Meta GenAI (Email: jeffliang@utexas.edu);

(2) Bichen Wu, Meta GenAI and Corresponding author;

(3) Jialiang Wang, Meta GenAI;

(4) Licheng Yu, Meta GenAI;

(5) Kunpeng Li, Meta GenAI;

(6) Yinan Zhao, Meta GenAI;

(7) Ishan Misra, Meta GenAI;

(8) Jia-Bin Huang, Meta GenAI;

(9) Peizhao Zhang, Meta GenAI (Email: stzpz@meta.com);

(10) Peter Vajda, Meta GenAI (Email: vajdap@meta.com);

(11) Diana Marculescu, The University of Texas at Austin (Email: dianam@utexas.edu).

:::

Table of Links

- Abstract and Introduction

- 2. Related Work

- 3. Preliminary

- 4. FlowVid

- 4.1. Inflating image U-Net to accommodate video

- 4.2. Training with joint spatial-temporal conditions

- 4.3. Generation: edit the first frame then propagate

- 5. Experiments

- 5.1. Settings

- 5.2. Qualitative results

- 5.3. Quantitative results

- 5.4. Ablation study and 5.5. Limitations

- Conclusion, Acknowledgments and References

- A. Webpage Demo and B. Quantitative comparisons

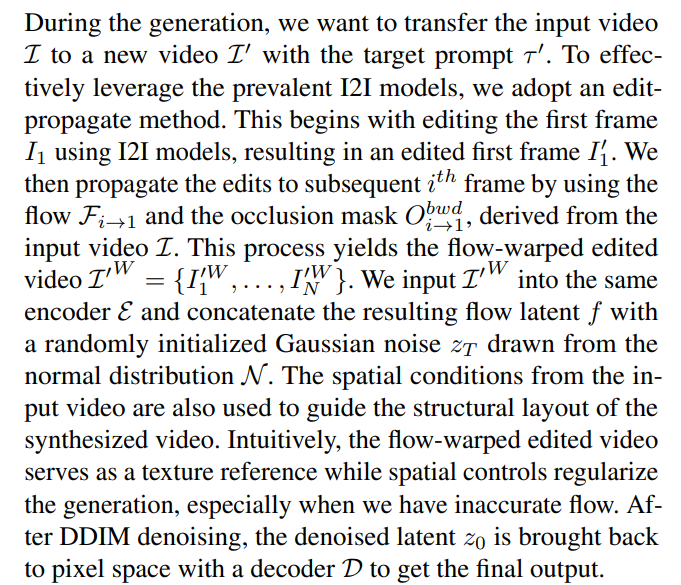

4.3. Generation: edit the first frame then propagate

\ Another advantageous strategy we discovered is the integration of self-attention features from DDIM inversion, a technique also employed in works like FateZero [35] and TokenFlow [13]. This integration helps preserve the original structure and motion in the input video. Concretely, we use DDIM inversion to invert the input video with the original prompt and save the intermediate self-attention maps at various timesteps, usually 20. During the generation with the target prompt, we substitute the keys and values in the selfattention modules with these pre-stored maps. Then, during the generation process guided by the target prompt, we replace the keys and values within the self-attention modules with previously saved corresponding maps.

\

:::info This paper is available on arxiv under CC 4.0 license.

:::

\

This content originally appeared on HackerNoon and was authored by Kinetograph: The Video Editing Technology Publication

Kinetograph: The Video Editing Technology Publication | Sciencx (2024-10-09T12:00:42+00:00) FlowVid: Taming Imperfect Optical Flows: Generation: Edit the First Frame Then Propagate. Retrieved from https://www.scien.cx/2024/10/09/flowvid-taming-imperfect-optical-flows-generation-edit-the-first-frame-then-propagate/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.