This content originally appeared on HackerNoon and was authored by Kinetograph: The Video Editing Technology Publication

:::info (1) Feng Liang, The University of Texas at Austin and Work partially done during an internship at Meta GenAI (Email: jeffliang@utexas.edu);

(2) Bichen Wu, Meta GenAI and Corresponding author;

(3) Jialiang Wang, Meta GenAI;

(4) Licheng Yu, Meta GenAI;

(5) Kunpeng Li, Meta GenAI;

(6) Yinan Zhao, Meta GenAI;

(7) Ishan Misra, Meta GenAI;

(8) Jia-Bin Huang, Meta GenAI;

(9) Peizhao Zhang, Meta GenAI (Email: stzpz@meta.com);

(10) Peter Vajda, Meta GenAI (Email: vajdap@meta.com);

(11) Diana Marculescu, The University of Texas at Austin (Email: dianam@utexas.edu).

:::

Table of Links

- Abstract and Introduction

- 2. Related Work

- 3. Preliminary

- 4. FlowVid

- 4.1. Inflating image U-Net to accommodate video

- 4.2. Training with joint spatial-temporal conditions

- 4.3. Generation: edit the first frame then propagate

- 5. Experiments

- 5.1. Settings

- 5.2. Qualitative results

- 5.3. Quantitative results

- 5.4. Ablation study and 5.5. Limitations

- Conclusion, Acknowledgments and References

- A. Webpage Demo and B. Quantitative comparisons

4.1. Inflating image U-Net to accommodate video

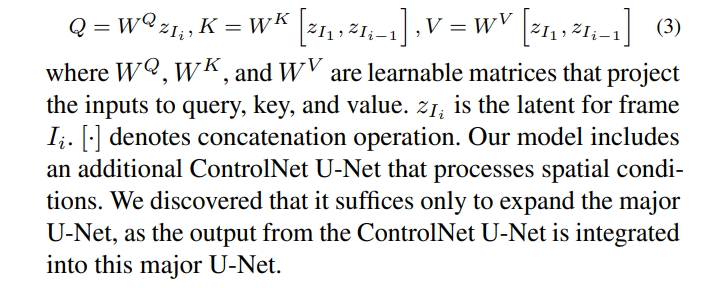

The latent diffusion models (LDMs) are built upon the architecture of U-Net, which comprises multiple encoder and decoder blocks. Each block has two components: a residual convolutional module and a transformer module. The transformer module, in particular, comprises a spatial selfattention layer, a cross-attention layer, and a feed-forward network. To extend the U-Net architecture to accommodate an additional temporal dimension, we first modify all the 2D layers within the convolutional module to pseudo-3D layers and add an extra temporal self-attention layer [18]. Following common practice [6, 18, 25, 35, 46], we further adapt the spatial self-attention layer to a spatial-temporal self-attention layer. For video frame Ii , the attention matrix would take the information from the first frame I1 and the previous frame Ii−1. Specifically, we obtain the query feature from frame Ii , while getting the key and value features from I1 and Ii−1. The Attention(Q, K, V ) of spatial-temporal self-attention could be written as

\

\

:::info This paper is available on arxiv under CC 4.0 license.

:::

\

This content originally appeared on HackerNoon and was authored by Kinetograph: The Video Editing Technology Publication

Kinetograph: The Video Editing Technology Publication | Sciencx (2024-10-09T11:30:15+00:00) FlowVid: Taming Imperfect Optical Flows: Inflating Image U-Net to Accommodate Video. Retrieved from https://www.scien.cx/2024/10/09/flowvid-taming-imperfect-optical-flows-inflating-image-u-net-to-accommodate-video/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.