This content originally appeared on HackerNoon and was authored by Auto Encoder: How to Ignore the Signal Noise

:::info Authors:

(1) Liang Wang, Microsoft Corporation, and Correspondence to (wangliang@microsoft.com);

(2) Nan Yang, Microsoft Corporation, and correspondence to (nanya@microsoft.com);

(3) Xiaolong Huang, Microsoft Corporation;

(4) Linjun Yang, Microsoft Corporation;

(5) Rangan Majumder, Microsoft Corporation;

(6) Furu Wei, Microsoft Corporation and Correspondence to (fuwei@microsoft.com).

:::

Table of Links

3 Method

4 Experiments

4.1 Statistics of the Synthetic Data

4.2 Model Fine-tuning and Evaluation

5 Analysis

5.1 Is Contrastive Pre-training Necessary?

5.2 Extending to Long Text Embeddings and 5.3 Analysis of Training Hyperparameters

B Test Set Contamination Analysis

C Prompts for Synthetic Data Generation

D Instructions for Training and Evaluation

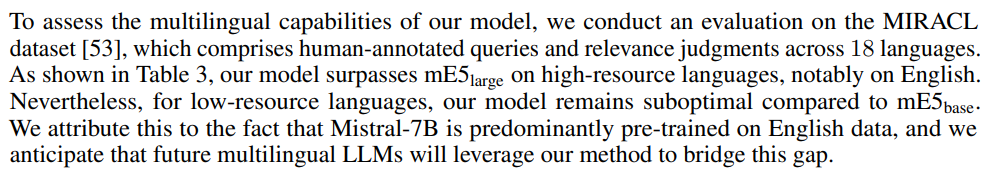

4.4 Multilingual Retrieval

\

![Table 3: nDCG@10 on the dev set of the MIRACL dataset for both high-resource and low-resource languages. We select the 4 high-resource languages and the 4 low-resource languages according to the number of candidate documents. The numbers for BM25 and mDPR come from Zhang et al. [53]. For the complete results on all 18 languages, please see Table 5.](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-vs930cg.png)

\

:::info This paper is available on arxiv under CC0 1.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by Auto Encoder: How to Ignore the Signal Noise

Auto Encoder: How to Ignore the Signal Noise | Sciencx (2024-10-09T18:00:31+00:00) Improving Text Embeddings with Large Language Models: Multilingual Retrieval. Retrieved from https://www.scien.cx/2024/10/09/improving-text-embeddings-withlarge-language-models-multilingual-retrieval/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.