This content originally appeared on HackerNoon and was authored by Gamifications FTW Publications

Table of Links

2.2 Gamification of Software Testing

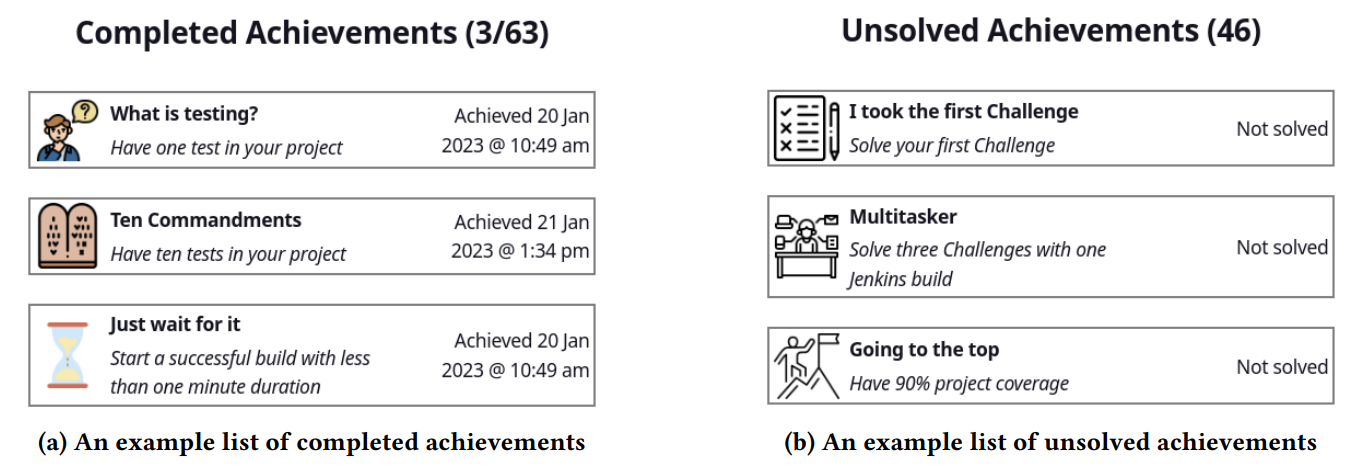

3 Gamifying Continuous Integration and 3.1 Challenges in Teaching Software Testing

3.2 Gamification Elements of Gamekins

3.3 Gamified Elements and the Testing Curriculum

4 Experiment Setup and 4.1 Software Testing Course

4.2 Integration of Gamekins and 4.3 Participants

5.1 RQ1: How did the students use Gamekins during the course?

5.2 RQ2: What testing behavior did the students exhibit?

5.3 RQ3: How did the students perceive the integration of Gamekins into their projects?

7 Conclusions, Acknowledgments, and References

4 EXPERIMENT SETUP

In order to evaluate whether Gamekins influences the testing behavior of students, we integrated it into our software testing course and aim to answer the following questions:

\ • RQ1: How did the students use Gamekins during the course? •

\ RQ2: What testing behavior did the students exhibit?

\ • RQ3: How did the students perceive the integration of Gamekins into their projects?

4.1 Software Testing Course

During the computer science Bachelor program at the University of Passau, students are required to take a course on Software Testing. This course includes two hours of lectures and two hours of exercise every week. The coursework consists of five coding projects that students have to implement throughout the course:

\

\ • Introduction: The initial assignment requires students to create and test a small class using JUnit5[6].

\ • Test-driven development: For the second assignment, students are tasked with implementing the model of a canteen web application to display the current menu. The goal is to create it in a test-driven manner, where students have to write their tests before implementing any features [4].

\ • Behavior-driven development: In the third task of the assignment, students are required to create the view for the already implemented model of the canteen web app. To test the graphical user interface (GUI), students have to utilize Selenium[7] a web testing framework, and Cucumber[8], a tool for behavior-driven development.

\ • Line-coverage analyzer (Analyzer): In the fourth project, students are required to implement a simple line-coverage analyzer. This analyzer will track the lines of code that are visited during the execution of tests.

\ • Coverage-based fuzzer (Fuzzer): In the last assignment, students have to implement a coverage-based fuzzer, which uses coverage information to guide the generation of inputs and to prioritize the exploration of untested code paths.

\ The students’ grades are determined based on the performance and correctness of the five projects. The main objective is to implement the required functionality for each project and thoroughly test it with 100 % code coverage. Only the last two projects (Analyzer and Fuzzer) were used for the evaluation.

4.2 Integration of Gamekins

The integration of Gamekins focuses on the last two tasks, as the introduction task is too small to viably use CI, and for the test-driven and behavior-driven tasks we require the commit history to show evidence of a correct implementation of these approaches, where Gamekins would interfere. As one of the requirements for the last two assignments, the students are mandated to use Gamekins by writing tests based on the challenges generated by Gamekins. Since students are evaluated individually, they cannot utilize the team functionalities of Gamekins. The workflow for the students is as follows: They select a challenge to tackle, write the corresponding test in their IDE, and commit and push the test to allow Gamekins to verify it. The students’ grades are based on their adherence to this workflow, and they can conclude their testing efforts when Gamekins is no longer able to generate new challenges.

4.3 Participants

The participants of our study are the students enrolled in the Software Testing course in the winter semester of 2022/23. There were a total of 26 students who completed the Analyzer project and 25 students for the Fuzzer project (one student dropped out after completing the Analyzer). We retrieved their consent for anonymized usage of data collected by Gamekins. Out of the total participants, we received survey responses from 17 students, providing valuable insights for our evaluation (as discussed in Section 4.4.3). Among the respondents, two out of 17 students identify as female. Participants were primarily in their beginning and mid-twenties, and the majority of students (65 %) claimed between one and three years of experience with Java. Additionally, most students (35 %) claimed between three and six months of experience with JUnit, followed by 29 % of students with six to twelve months.

\

:::info This paper is available on arxiv under CC BY-SA 4.0 DEED license.

:::

[6] https://junit.org/junit5/

\ [7] https://www.selenium.dev/

\ [8] https://cucumber.io/

:::info Authors:

(1) Philipp Straubinger, University of Passau, Germany;

(2) Gordon Fraser, University of Passau, Germany.

:::

\

This content originally appeared on HackerNoon and was authored by Gamifications FTW Publications

Gamifications FTW Publications | Sciencx (2025-01-20T13:00:03+00:00) Evaluating the Impact of Gamekins on Student Testing Behavior in Software Courses. Retrieved from https://www.scien.cx/2025/01/20/evaluating-the-impact-of-gamekins-on-student-testing-behavior-in-software-courses/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.