This content originally appeared on DEV Community and was authored by Alexey Ryazhskikh

I own a pipeline that builds, tests, and deploys many asp.net core services to k8s cluster. The pipeline is running on Azure DevOps hosted agents. They are scalable, but not robust, so I need to do some performance optimizations for my pipeline.

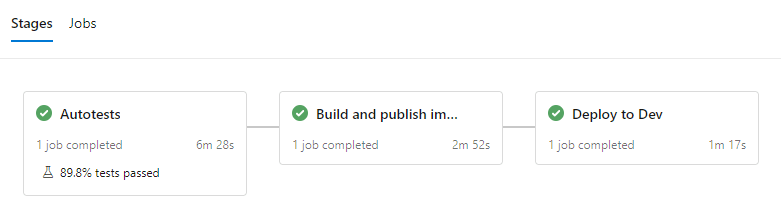

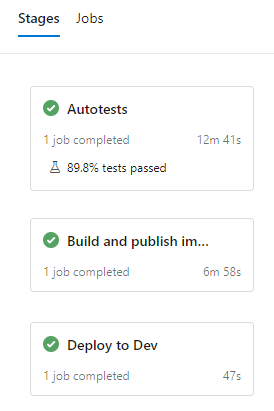

The pipeline has three main stages:

- running various automated tests

- building and pushing number of docker images for k8s deployment

- deploying services by terraform

Running stages in parallel

My pipeline runs in two modes:

-

Pull request validation - it runs all tests and executes only

terraform plan. - Deployment mode - it runs all tests and does actual deployment to the k8s cluster.

My pipeline doesn't change anything in PR validation mode, so I can validate terraform configuration, Dockerfiles, and run autotests in parallel.

By default stages are depend on each other, but you can change this behaviour by forcing dependsOn: [] in azure-pipelines.yml:

variables:

isPullRequestValidation: ${{ eq(variables['Build.Reason'], 'PullRequest') }}

...

stages:

- stage: deploy_to_dev

displayName: "Deploy to Dev"

${{ if eq(variables.isPullRequestValidation, true) }}:

dependsOn: []

SQL Server startup optimization

For running integration tests, I use docker-compose with SQL Server and test container declarations.

version: "3.7"

services:

mssql:

image: "mcr.microsoft.com/mssql/server"

environment:

SA_PASSWORD: "Sa123321"

ACCEPT_EULA: "Y"

ports:

- 5433:1433

integrationTests:

container_name: IntegrationTests

image: my/my_integration_tests

build:

context: ..

dockerfile: ./Integration.Tests/Dockerfile

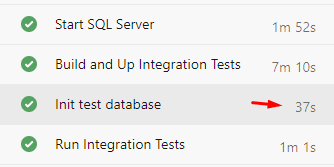

There is a problem with running SqlServer in docker - it needs few minutes to start.

My idea is to run SQL Server before start building test containers.

For that, I split the docker-compose.yml into two ones: docker-compose.db.yml and docker-compose.tests.yml.

Docker-compose services running with the same --project-name share the same scope.

So my integration test job does the following steps:

docker-compose -f docker-compose.db.yml --project-name test up -d

docker-compose -f docker-compose.tests.yml --project-name test build

docker-compose -f docker-compose.tests.yml --project-name test up -d

docker-compose -f docker-compose.tests.yml --project-name test exec integrationTests "/src/Integration.Tests/init-db.sh"

docker-compose -f docker-compose.tests.yml --project-name test exec integrationTests dotnet test

After the optimization Init test database step doesn't wait for SQL Server running, and I found no build speed degradation.

.Net Core services build optimization

Build Parallelism

Azure DevOps agents do not guarantee persistence for docker cache between jobs running. However, you can be lucky and get an agent with prepopulated docker cache.

Anyway, you surely can rely on the docker cache for layers built due to the current job.

It might be better to have many build tasks in one job, rather than having multiple parallel jobs.

Dockerfile code generation

My pipeline builds one .Net solution with C# project for each service. Each service has a similar Dockerfile.

I created a simple template tool for generating Dockerfiles for each service to keep them identical as much as possible.

Copy and restore .csproj

There is a recommendation to copy only *.csproj files for all projects and restore them before copying other source files. It allows creating a long-live caching image layer with restored dependencies.

# copy only *.csproj and restore

COPY ["MyService/MyService.csproj", "MyService/"]

COPY ["My.WebAPI/My.WebAPI.csproj", "My.WebAPI/"]

RUN dotnet restore "MyService/MyService.csproj"

RUN dotnet restore "My.WebAPI/My.WebAPI.csproj"

COPY . .

RUN dotnet build My.WebAPI/My.WebAPI.csproj"

That is not so important for Azure DevOps hosted agents, but useful for the local builds.

But you need to maintain a list of *.csproj in each Dockerfile of your project. So every new .csproj leads you to update every Dockerfile in your solution.

I think code-generation is the best way to automate it.

Do more, but once

Building single *.csproj with all dependencies takes about 1 minute for my case. Building an entire solution takes ~3 minutes. But thanks to image layer caching I need to build the solution only once.

Here is an example of a service Dockerfile I have:

FROM mcr.microsoft.com/dotnet/aspnet:5.0-buster-slim AS base

WORKDIR /app

FROM mcr.microsoft.com/dotnet/sdk:5.0-buster-slim AS build

WORKDIR /src

# creating common references cache

COPY ["Common/Common.csproj", "Common/"]

RUN dotnet restore "Common/Common.csproj"

COPY . .

# exclude test projects from restore/build

RUN dotnet sln ./My.sln remove **/*Tests.csproj

RUN dotnet restore ./My.sln

RUN dotnet build ./My.sln -c Release

WORKDIR "Services/My.WebAPI"

FROM build AS publish

RUN dotnet publish "My.WebAPI.csproj" -c Release -o /app/publish

FROM base AS final

WORKDIR /app

COPY --from=publish /app/publish .

ENTRYPOINT ["dotnet", "My.WebAPI.dll"]

There is another way to improve solution build speed: exclude unnecessary projects from .sln file with dotnet sln command.

A piece of job execution that builds the services

As you see, building the entire solution took 2 minutes for the first service, other services used created cache and do only RUN dotnet publish for 30 seconds.

Conclusion

- Job parallelism and utilizing docker layer cache are orthogonal technics, but you can find the balance for your project.

- Docker-compose for integration tests could be split: so one set of services could be built while the other set is initializing.

- Code generation can help to keep Dockerfiles similar and utilize the docker layer cache.

This content originally appeared on DEV Community and was authored by Alexey Ryazhskikh

Alexey Ryazhskikh | Sciencx (2021-05-04T21:36:17+00:00) Azure DevOps pipeline optimization for .net core projects. Retrieved from https://www.scien.cx/2021/05/04/azure-devops-pipeline-optimization-for-net-core-projects/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.