This content originally appeared on Level Up Coding - Medium and was authored by Davis Kirkendall

FastAPI and Celery

FastAPI is a new and very popular framework for developing python web APIs. Celery is probably the most used python library for running long running tasks within web applications.

FastAPI and Celery are often used together (the FastAPI documentation even recommends this) and applications in spaces like data science and machine learning, where longer running CPU bound tasks need to be completed asynchronously are an ideal match for the combination of libraries.

Developing

To demonstrate a simple application we’ll start with a fresh python virtual environment and install our libraries (we’re assuming that Redis is installed started on localhost here and you’ve created a virtual environment with python 3.9 or similar):

pip install fastapi celery[redis] uvicorn

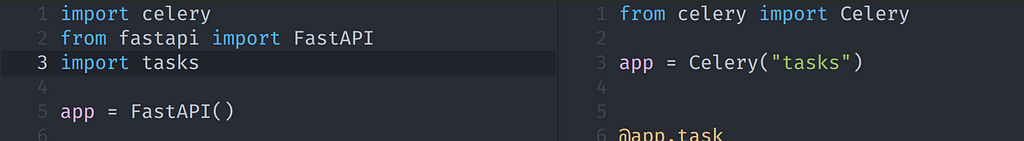

Now let’s create a few files. The first file (tasks.py) defines our celery task, while the second file main.py defines a very simple FastAPI app that uses the celery task we just created.

When developing such applications, developers typically start two processes in separate terminals:

- The API server (running the FastAPI app) and

- The celery worker (running the Celery tasks)

While having these processes separate is critical in production, during development it most often isn’t an issue to have these running in the same process.

Running both in the same process allows a simpler development flow, since we only need one command and one terminal to start developing.

Solution

Instead of using the two terminals and commands, we can use Celerys testing utilities to start the celery worker in a background thread (note that because of the way python’s threads work, CPU bound tasks will block the API server, which obviously is not good for production deployments, but shouldn’t be a problem for during development and is still much nearer to the “real” setup than using Celerys task_always_eager setting).

To do that, we’re going to wrap the web servers (we’re using Uvicorn here) command:

This might look strange because we’re using a few hacks here but the important part is the celery.contrib.testing.worker.start_worker which starts the celery worker in the background. The rest of the code wraps the Uvicorn cli command so that we can use our new script the same way we would otherwise use Uvicorn. The run.py script will accept the same commands as the uvicorn command:

python run.py --help

Now we can just start our web server almost the same way that we would otherwise:

python run.py main:app --reload

but instead of just starting the web server, it will also start a celery worker in the background.

Visiting http://127.0.0.1:8000 will now show:

{"2+2":4}with the result being calculated in the celery worker running on a thread in the same process!

Conclusion

While it might seem overkill to go through this work to save a terminal window, I do think that simplifying development setup as much as possible for new co-workers or contributors (especially if they are less experienced) makes a huge difference. Having a single command to get your development environment running might be worth it in may cases.

Running FastAPI and celery together in a single command was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Davis Kirkendall

Davis Kirkendall | Sciencx (2021-08-26T16:06:53+00:00) Running FastAPI and celery together in a single command. Retrieved from https://www.scien.cx/2021/08/26/running-fastapi-and-celery-together-in-a-single-command/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.