This content originally appeared on DEV Community and was authored by Taavi Rehemägi

In this article, we'll discuss the potential pitfalls that we came across when configuring ECS task definitions. While considering this AWS-specific container management platform, we'll also examine some general best practices for working with containers in production.

1. Wrong logging configuration

Containers are ephemeral in nature. Once a container finishes execution, the only way to detect whether our job ran successfully or not is to* look at the logs*.

Docker has a mechanism called log driver that collects containers' stdout and stderr outputs and forwards those to a specified location. If you choose the awslogs log driver, this location will be Amazon CloudWatch. But there are other options to choose from.

For example, the splunk log driver allows us to use Splunk instead of CloudWatch. Once that's configured in a task definition, all container logs for that task will be continuously forwarded to that logging service.

In the default ECS task definition using awslogs as the log driver, you can select the option "Auto-configure CloudWatch Logs". It is recommended to choose this option, as shown in the image below.

Task Definition configuration in the AWS management console --- image by the author

The alternative to that is creating the log group manually, which can be accomplished in a single AWS CLI command:

If you don't set it up properly (i.e., selecting awslogs but without ensuring the log group exists or gets created), your ECS task will fail because it won't be able to push container logs to CloudWatch.

2. Failing to enable "Auto-assign public IP"

Another common source of potential problems is the network configuration in the "run task" API. Imagine that you configured your task definition and you now want to start the container(s). The "Run Task" wizard in the AWS management console has the option "Auto-assign public IP", as shown in the image below.

If you select ENABLED, your container will receive a public IP, and therefore, will be able to send requests over the Internet. If you don't enable that, ECS won't be able to pull the container image from a container image registry, such as AWS ECR or DockerHub.

Run Task configuration in the AWS management console --- image by the author

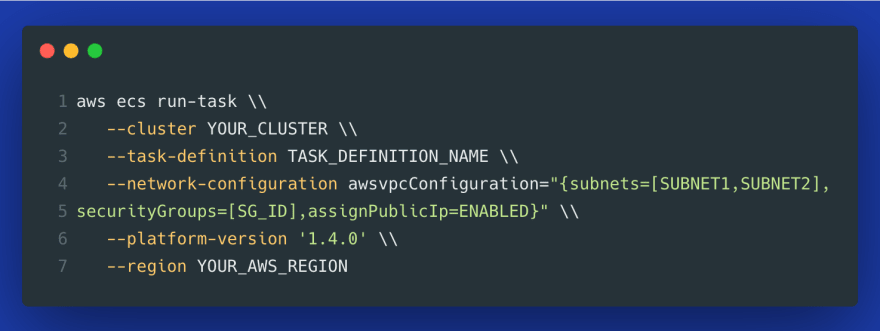

If you use AWS CLI to run a task, you can set that option in the awsvpcConfiguration="{...,assignPublicIp=ENABLED}", for example:

3. Storing credentials in plain text in the ECS task definition

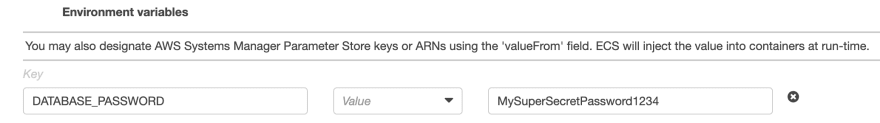

It is common to pass sensitive information to the containers as environment variables defined as plain text in the task definition. The problem with this approach is that anybody who has access to the management console, or to the DescribeTaskDefinition or DescribeTasks API calls, will be able to view those credentials.

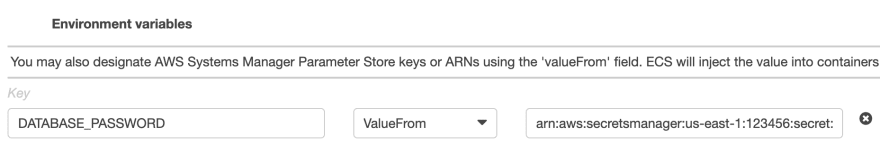

To solve this problem, AWS suggests passing sensitive data to ECS tasks by referencing the secret ARN from AWS Systems Manager Parameter Store or AWS Secrets Manager in the environment variables. Let's see how to do that and how NOT to do that.

Don't define your passwords in plain text as Value:

Instead, apply one of those two options:

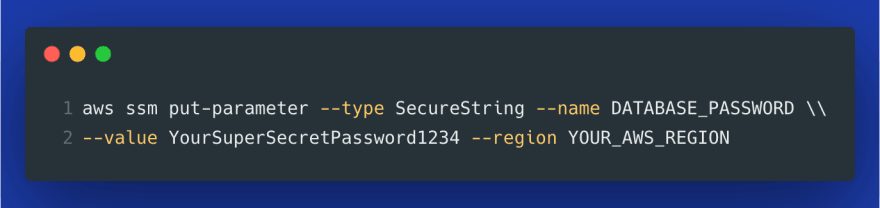

1. Using AWS Systems Manager Parameter Store

Note that instead of Value, we now select ValueFrom from the dropdown.

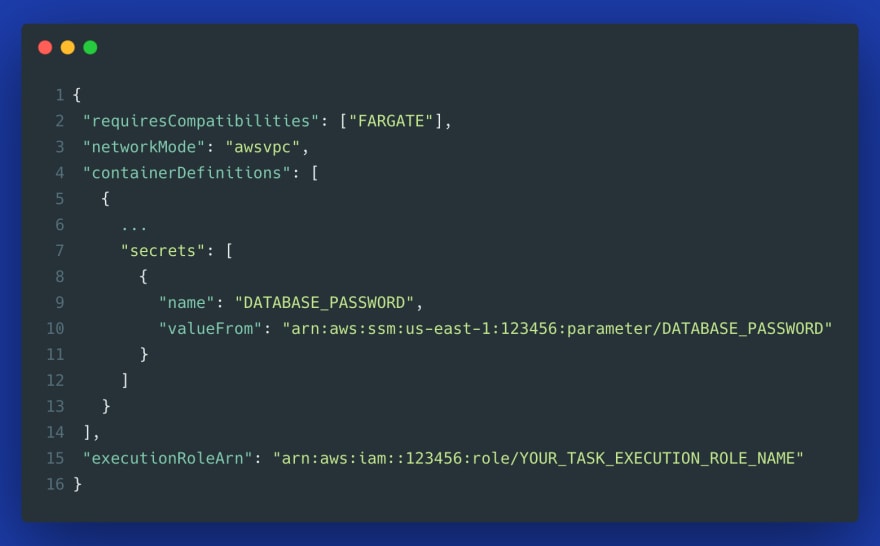

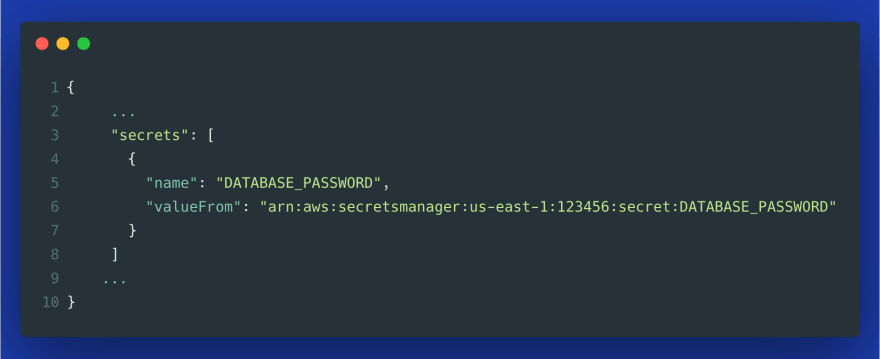

The same applied to a JSON-based task definition looks as follows:

2. Using AWS Secrets Manager

The same applied to a JSON-based task definition (same as above, only the valueFrom changed) looks as follows:

What's the difference between AWS Systems Manager Parameter Store and AWS Secrets Manager?

While Secrets Manager is designed exclusively to handle secrets, Parameter Store is a much more general service for storing configuration data, parameters, and also secrets --- all of those can be stored either as plain text or as encrypted data.

In contrast, the more specialized Secrets Manager includes additional capabilities to share secrets across AWS accounts, generate random passwords, automatically rotate and encrypt them, and return them after decryption to applications over a secured channel using HTTPS with TLS.

When it comes to the price, AWS SecretsManager is more expensive --- at the time of writing, you pay around 40 cents per stored credential per month, plus an additional tiny fee per 10,000 API calls.

With Parameter Store, you can store up to 10,000 credentials for free, and once you reach more than 10,000 of them in your AWS account (that would be impressive!), you are charged 5 cents per secret.

4. Using the same IAM task role for all tasks

There are two essential IAM roles that you need to understand to work with AWS ECS. AWS differentiates between a task execution role, which is a general role that grants permissions to start the containers defined in a task, and a task role that grants permissions to the actual application once the container is started. Let's dive deeper into what both roles entail.

Task Execution Role

This role is not used by the task itself. Instead, it is used by the ECS agent and container runtime environment to prepare the containers to run. If you use the AWS management console, you can choose to let AWS create this role automatically for you. Alternatively, you can create it yourself, but then you need to make sure that the AWS-managed policy called AmazonECSTaskExecutionRolePolicy gets attached to this role. This policy grants permissions to:

- pull the container image from Amazon ECR,

- manage the logs for the task, i.e. to create a new CloudWatch* log stream within a specified log group*, and to push container logs to this stream during the task run.

Additionally, if you use any secrets in your task definition, you need to attach another policy to the task execution role. Here is how this policy could look like --- note that this example allows secrets from both, Parameter Store and Secrets Manager, and you typically would need only one of those:

The above role grants permission to access all secrets from ECS ("Resource": "*"). For production, you can build it in a more granular way to only allow access to a specific secret ARN.

Why would you use secrets in a task definition?

- To set the credential to an external logging service such as Splunk,

- To avoid storing secrets in plain text as environment variables, as described in the previous section.

Both use cases for using secrets in a task definition are shown in the following example task definition:

Task Role

In contrast to the task execution role, the task role grants additional AWS permissions required by your application once the container is started. This is only relevant if your container needs access to other AWS resources, such as S3 or DynamoDB.

Why is it suboptimal to use the same IAM task role for multiple tasks?

It's a security best practice to follow the least privilege principle so that each application has only the bare minimum permissions it really needs. At the same time, having a separate IAM role for each type of project or task makes it* easy to change* --- if your specific ECS task no longer uses DynamoDB, but rather a relational database, you can make this permission change on the task role of that task without affecting other ECS tasks. If you would be using the same role for multiple tasks, you would likely end up with a task role that has way more permissions than required.

It's considered good practice to define the task role as a regular part of every project, to version control its definition, and allow it to be created automatically by leveraging a CI/CD pipeline.

5. Container images and ECS tasks configured in different AWS regions

You can reduce the latency in your ECS task execution if you choose to store the container images in the same region as your ECS tasks. This reduces the image download times because the pull operation doesn't have to leave your VPC. This means that it can be potentially faster for your ECS task to pull the image from ECR in your AWS region than from Dockerhub.

6. Using too big base images

Big container images are expensive. Container registries such as ECR are billed per GB storage per month. With smaller images, you not only save costs but also allow faster start times of containers, since smaller images can be pulled much quicker. Also, bigger container images may have some services and features you don't even need for production.

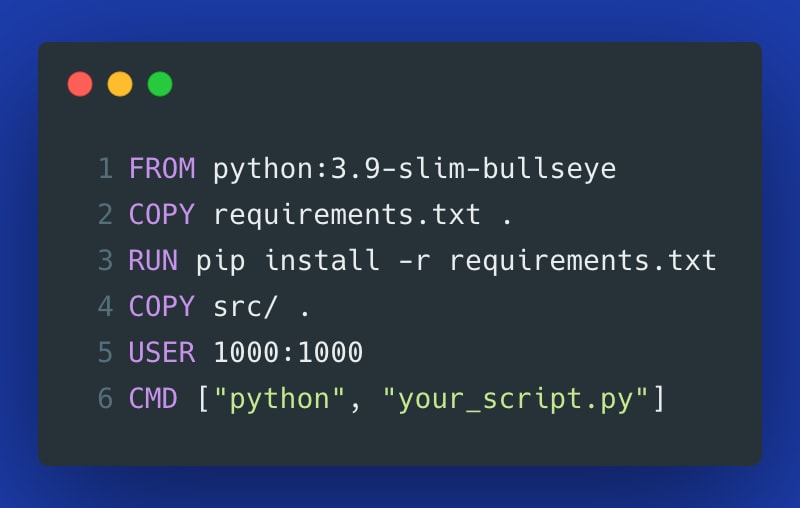

Consider Python container images. The default base images contain many system libraries and binaries including C and C++. Those images are great for local development since they contain everything you may need, but they are likely overkill for a production application.

A good practice for building containers that are intended to run on ECS Fargate is to start with a slim version of the image and gradually install all dependencies you need.

Why are small images essential when using ECS Fargate?

When you run your containers always on the same server, your Docker image layers are cached and the size doesn't matter that much. But Fargate is a serverless stateless service --- your entire image gets pulled from the registry every time your ECS task runs with no caching.

7. Not allocating enough CPU and memory

One way of mitigating the problem of large container images is allocating more CPU and memory resources to your ECS tasks. When a task has more compute resources allocated to it, even a larger container image can be potentially pulled and executed faster.

How can you find out whether you leverage enough resources? You can have a look at Dashbird --- a serverless observability platform that automatically pulls CloudWatch logs and helps you gain insights into the health of your serverless resources. It visualizes the memory and CPU usage levels and you can configure alerts based on specific thresholds.

ECS service memory tracking --- image courtesy of Dashbird

Well-architected lens dashboard --- image courtesy of Dashbird

8. Running containers as a root user

By default, when you create a Dockerfile and you don't include a specific USER, then your container will run as root. Even though Docker restricts what root can do by attaching fewer capabilities than those of a host's root user, it's still dangerous if such a container gets compromised. Adding a USER directive limits the surface area and is considered a security best practice. Here is how you could define it:

Conclusion

This article investigated the common pitfalls when using AWS ECS. We looked at logging and networking and securely passing secrets to the containers. Then, we discussed the differences between task execution role and task role, and how to use them with ECS tasks. Finally, we examined some general best practices when working with containers.

Further reading:

AWS ECS vs AWS Lambda comparison

Log-based monitoring for AWS Lambda

How to manage credentials in Python using AWS Secrets Manager

Resources:

- AWS ECS Best Practices

- How can I pass secrets or sensitive information securely to containers in an Amazon ECS task?

- Keynote: Running with Scissors --- Liz Rice, Technology Evangelist, Aqua Security

This content originally appeared on DEV Community and was authored by Taavi Rehemägi

Taavi Rehemägi | Sciencx (2021-09-20T16:01:08+00:00) 8 common mistakes when using AWS ECS to manage containers. Retrieved from https://www.scien.cx/2021/09/20/8-common-mistakes-when-using-aws-ecs-to-manage-containers/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.