This content originally appeared on DEV Community and was authored by Farhim Ferdous

Learn what the Bridge driver is in Docker, how it simplifies multi-container networking on a single host, how to use it, possible use cases and limitations

What is the bridge network driver in Docker and how does it simplify networking on a single host?

This blog will try to answer that (and more) as simply as possible.

Introduction

This blog is the sixth one in a series for Docker Networking.

- blog #1 - Why is Networking important in Docker?

- blog #2 - Basics of Networking

- blog #3 - Docker Network Drivers Overview

- blog #4 - None Network Driver

- blog #5 - Host Network Driver

If you are looking to learn more about the basics of Docker, I’ll recommend checking out the Docker Made Easy series.

Here’s the agenda for this blog:

- What is the

bridgenetwork driver? - How to use it?

- When to use it? - possible use cases

- it’s limitations

Here are some quick reminders:

- A Docker host is a physical or virtual machine that runs the Docker daemon.

- Docker Network drivers enable us to easily use multiple types of networks for containers and hide the complexity required for network implementations.

Alright, so...

What is the bridge driver?

A

bridgedriver can be used to create an internal network within a single Docker host.

This driver is used most often for applications that require one or more containers running on a single host.

When Docker is started, it automatically creates a default bridge network named bridge, and uses it for containers that do not specify any networks explicitly. We can also create many custom ‘user-defined’ bridge networks.

User-defined bridge networks are superior to the default bridge network in the following ways…

Differences between default bridge and User-defined bridge networks

-

User-defined bridges provide automatic DNS resolution between containers

Containers on the default bridge network can only access each other by IP addresses, unless you manually create links using the

--linkoption, which is considered legacy. These links need to be created in both directions, so this gets increasingly more complex the more containers there are to communicate.

On a user-defined bridge network, containers can resolve each other by name or alias. Imagine an application with a web front-end and a database back-end. If you call your containerswebanddb, the web container can connect to the db container using the hostnamedb, no matter which Docker host the application stack is running on. -

User-defined bridges provide better isolation

All containers without a

--networkspecified, are attached to the default bridge network, which can be a risk, as unrelated stacks/services/containers are then able to communicate.Using a user-defined network provides a scoped network in which only containers attached to that network are able to communicate.

-

Containers can be attached and detached from user-defined networks on the fly

To remove a container from the default bridge network, you need to stop the container and recreate it with different network options.

But you can connect or disconnect containers from user-defined networks on the fly.

-

Each user-defined network creates a configurable bridge

If your containers use the default bridge network, you can configure it, but all the containers use the same settings, such as MTU and

iptablesrules. In addition, configuring the default bridge network happens outside of Docker itself, and requires a restart of the Docker Daemon.User-defined bridge networks are created and configured using

docker network createcommand. If different groups of applications have different network requirements, you can configure each user-defined bridge separately. -

Linked containers on the default bridge network share environment variables

Originally, the only way to share environment variables between two containers was to link them using the

--linkflag. Although this is not possible with user-defined networks, there are better alternatives for sharing environment variables like:- sharing files using Docker Volumes - sharing variables from a `docker-compose` configuration when using Docker Compose - using Docker Secrets and/or Docker Configs when using Docker Swarm Services

How to use the bridge driver?

I encourage you to follow along with the hands-on lab that follows.

Demo: Default bridge network with cli

Let’s start off by running two nginx containers (in the background with -d) named app1 and app2:

docker run -d --name app1 nginx:alpine

docker run -d --name app2 nginx:alpine

As discussed previously, when no particular --network is specified from the cli, the default bridge network is used.

We can check that nginx is in fact running on both containers by using docker exec to run curl localhost on both containers:

docker exec app1 curl localhost

docker exec app2 curl localhost

On success, this would print out the index.html page of nginx.

Footnotes:

-

docker execis used to execute a command inside a running container. - nginx runs on port 80 inside its container.

-

curl localhostis the same ascurl http://localhost:80

Now let’s try to communicate between the containers.

Trying to reach app2 from app1 using the hostname app2 will not work since DNS doesn’t work out of the box with the default bridge.

docker exec app1 curl app2

Let’s get the IP address of app2 with:

docker inspect -f '{{range.NetworkSettings.Networks}}{{.IPAddress}}{{end}}' app2

On my system, it is 172.17.0.3 (could be different in yours).

If we now try to reach app2 from app1 again, but with the IP address:

docker exec app1 curl 172.17.0.3

We should see that it works!

Using IP addresses is neither reliable nor easy to maintain since more containers could be added to the default network.

If we used the legacy --link option, we could have used the name of the container instead of the IP. But there are major drawbacks to using the default bridge (as discussed in the previous section), and using the --link is discouraged.

So, let’s directly go to…

Demo: User-defined bridge network with cli

A user-defined bridge network has to be created before it can be used. So let’s create one named my-bridge:

docker network create --driver bridge my-bridge

NOTE: since bridge is the default network driver, specifying --driver bridge in the command is optional.

We will run two containers again and name them app3 and app4:

docker run -d --name app3 --network my-bridge nginx:alpine

docker run -d --name app4 --network my-bridge nginx:alpine

Again, let’s test if nginx is running properly on both containers:

docker exec app3 curl localhost

docker exec app4 curl localhost

On successfully printing the index pages, let’s move on to communication between the containers.

If we try to reach app4 from app3 using the hostname app4:

docker exec app3 curl app4

We will see that it will succeed!

The same thing will also work when reaching app3 from app4:

docker exec app4 curl app3

Will we be able to reach app2 from app3 using DNS?

docker exec app3 curl app2

Nope.

What about using app2’s IP address? (connection timeout added to not keep curl waiting indefinitely)

docker exec app3 curl 172.17.0.3 --connect-timeout 5

No again.

We cannot reach app2 from app3 (or app4) and vice versa because they are on two separate networks, the default bridge and our user-defined my-bridge.

Demo: publishing container ports

Containers connected to the same bridge network effectively expose all ports to each other. For a container port to be accessible from the host machine or from hosts of external networks, that container port must be published using the -p or --publish flag.

Let’s see this in action.

All the existing containers have nginx running inside them separately on port 80 of each container, but none of them can be reached from the host’s port 80 yet. We can confirm by running curl on the host:

curl localhost

Assuming you didn’t have any applications running on port 80, curl should return an error like “Connection refused”.

If we want the container’s application (in this case nginx) to be reachable from the host, we can publish its port (i.e. map the container's port to a host port).

docker run -d --name app5 -p 3000:80 nginx:alpine

This will map port 3000 on the host to port 80 inside the container.

If we now try to curl on port 3000 of the host:

curl localhost:3000

We should see the nginx index page.

Nice work! :)

Clean up

We can remove all containers on the system using the following command:

docker rm -f $(docker ps -aq)

NOTE: this will also remove any containers you had in your system previously before this tutorial.

Let’s also remove the my-bridge as well.

docker network rm my-bridge

All clean! ✨

When to use the bridge driver? - possible use cases

- When Container Networking is required for a single host

Networks created by using the bridge driver are contained within a single host, therefore it is ideal for most workloads that run on a single machine, like an engineer's local development environment or a company that runs multiple applications on a single server, etc.

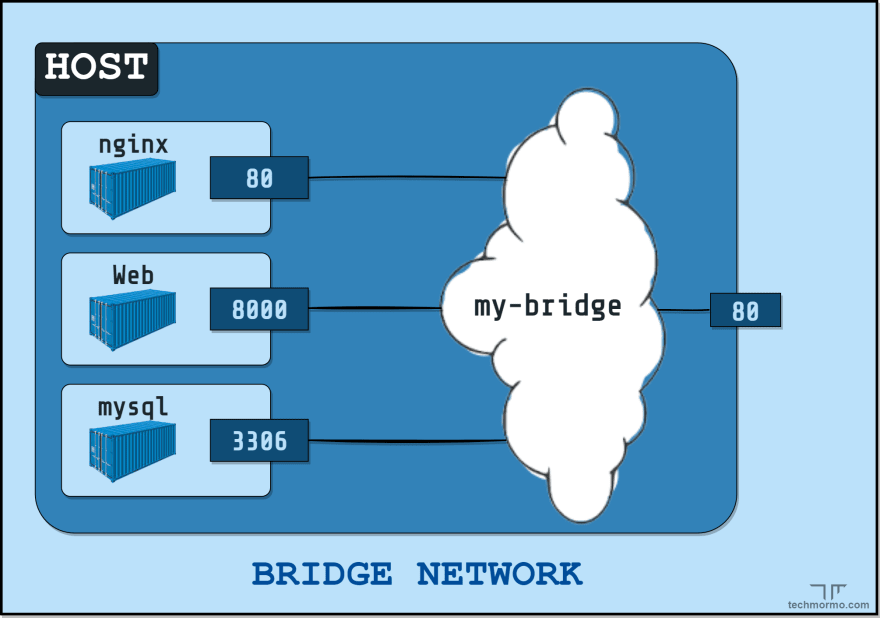

- When using Docker Compose

Docker Compose simplifies running multi-container workloads, especially on a single machine. It does so by using a YAML configuration file (docker-compose.yaml) like the following example:

version: "3.1"

services:

nginx:

image: nginx:alpine

ports:

- 80:80

web:

image: myapp-custom-image

mysql:

image: mysql

# ...

Compose creates and uses user-defined bridge networks out of the box when its services are first created using the docker-compose up command. It also removes the user-defined bridge networks created when services are taken down with docker-compose down.

NOTE: We will learn more about the awesome Docker Compose tool in an upcoming post, so, stay tuned.

If you know of any other use cases for bridge driver, please let us know.

Limitations of the bridge driver

- Limited to a single host The bridge driver provides seamless network isolation for containers on a single host only. If networking between multiple hosts is required, either the Overlay driver (recommended) or some custom OS level routing has to be implemented.

-

bridgedriver is slower thanhostdriver Since an internal network has to be created and ports have to be mapped (via NAT/userland-proxy) forbridgedriver, it incurs more overhead in terms of raw network performance at the cost of providing better isolation than thehostdriver. If you are not running multiple containers on a single production machine or if a very high network throughput is required, then thehostdriver might provide a better alternative tobridgedue to its faster performance. -

default

bridgedrawbacks As discussed previously in the section ‘Differences between default bridge and User-defined bridge networks’, the default bridge is far inferior to user-defined ones. The defaultbridgenetwork is considered a legacy detail of Docker and is not recommended for production use.

Conclusion

In this blog, we learnt about the bridge network driver in docker - what it is, how to use it, and some possible use cases and limitations.

By creating an internal network, the bridge driver simplifies network isolation on a single host. But its drawbacks are to be kept in mind, especially when using it in production.

I hope I could make things clearer for you, be it just a tiny bit.

In the next blog, we will learn about the overlay driver - which simplifies multi-host container networking.

Thanks for making it so far! 👏

See you at the next one.

Till then…

Be bold and keep learning.

But most importantly,

Tech care!

This content originally appeared on DEV Community and was authored by Farhim Ferdous

Farhim Ferdous | Sciencx (2022-06-15T14:10:12+00:00) The Bridge Network Driver | Networking in Docker #6. Retrieved from https://www.scien.cx/2022/06/15/the-bridge-network-driver-networking-in-docker-6/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.