This content originally appeared on DEV Community 👩💻👨💻 and was authored by Rose Chege

Message queues (MQs) allow you to run distributed services. This article will go over MQs in further detail. Then Discuss the benefits cloud-native has to provide for your application and why we need it for MQs. Finally, highlight the top five cloud native MQs that can be easily run with Node.js.

The Beginning: Synchronous

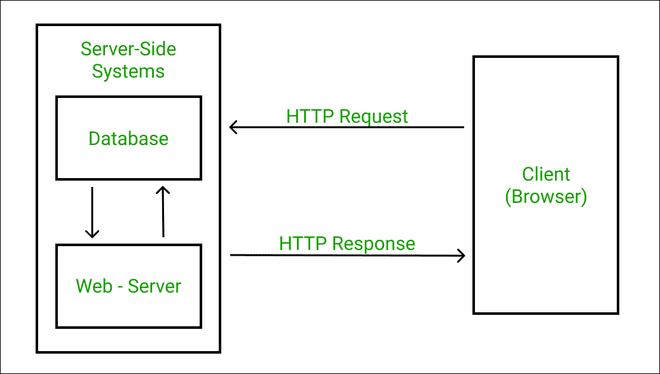

The application's scalability model is a tremendous consideration when rolling out your application. Take an example of a simple application using a request-response model. You have three major components to facilitate your services: a client, a server, and the database to process your system data.

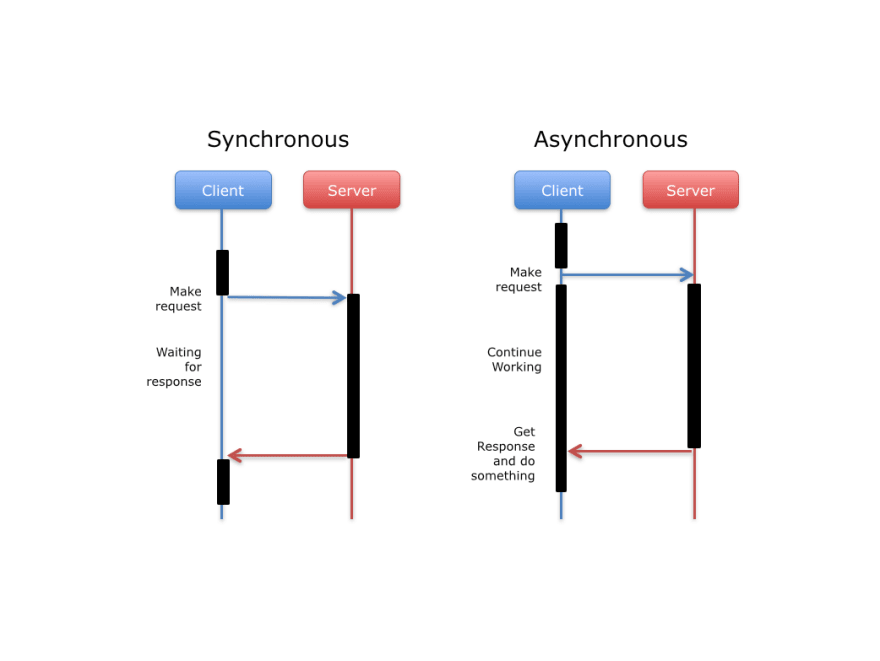

Using this approach, once the client sends the request to the server, it has to wait for the processing to finish before getting back a response. However, this itself being a problem, it doesn't pose any technical processing problems to a simple application with just a few random users. Here you are running a synchronous model that your database can comfortably handle.

What if your system starts to get popular? Your server will start handling time-consuming tasks to fit the demand of the growing user base. Imagine your synchronous model handling these requests each at a time. Your client, in this case, cannot proceed with any other operations. Once you open the http connection, you cannot close the connection until you get a response from the server.

The client will have to wait longer for a response as the server receives more and more requests. You will end up with a backlog of requests. Even when the client expects a simple success message as the response, it has to wait for the first request to get processed, as requests get expected in the order they are received. By the fact that 53% of mobile users abandon sites take over 3 seconds to respond, your system paradigm ends up creating a huge gap between user expectations and the application capabilities.

Introducing Asynchronous

One of the best ways to solve this problem is to create an asynchronous communication model. An asynchronous model will execute that first request and the rest of any received requests simultaneously.

The beauty of this approach is that when the client sends a request to the server, it doesn't have to wait for the server to get any response. It can actually go ahead and do some other operations. Here your application is running to its full potential with no limitations on what it can do at any given time.

Getting a Message Queue: Asynchronous Approach

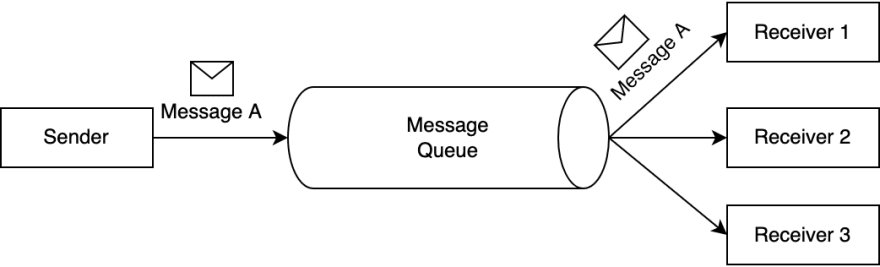

One of the best ways to achieve asynchronous communication is to use a message queue (MQ). A message queue enables the client to reduce the wait time for a task to finish and, as a result, execute other tasks during that time. Additionally, it allows a server to carry out tasks in the order it chooses.

Message queues are a service to service asynchronous communication used to build highly decoupled and reliable microservice applications. It allows one service to communicate with another service by sending messages to each other.

Message queuing uses a broker for centralized data management to ensure that the communication is delivered in a reliable fashion between the services.

This architecture uses messages as the data. The server, also known as the producer, creates the messages (the data) and sends them to the centralized message queuing manager (the broker). The client, also known as the consumer, retrieves the message from the queue and processes the data.

This way, the producers can send any amount of data without worrying about consumer availability. The application can now manage multiple heavy scaling tasks using this microservice-based architecture. When the client requires data, it simply requests messages from the queue anytime.

Node.js for Message Queuing

You have decided to run your system asynchronously. Thanks to the message queuing architecture. But then again, you need to implement this architecture to leverage your system's capabilities. One of the best ways to implement messages queues is using Node.js (Typescript/NestJS).

Node.js is a popular technology that offers a wide range of features for your application. This include:

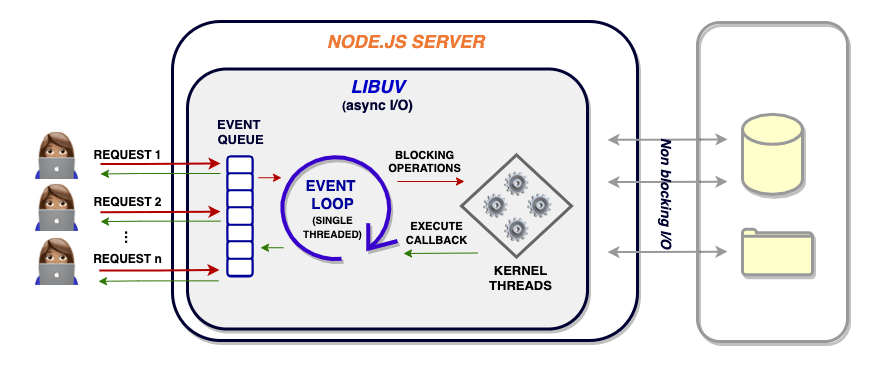

- Asynchronous/Non-blocking thread execution. Node.js allows you to create Non-blocking servers/API. Every Node.js API is non-blocking. The subsequent tasks in the stack are continuously run while waiting for a response for something outside of the execution chain. Its single-threaded asynchronous capabilities make it well-suited to real-time communication processes. This is the exact architecture that you would desire to build your servers with. The fact that message queues are Asynchronous, your application performs at full potential.

- Event-driven. A node.js server is Event-driven. It uses Event Loop to handle multiple clients simultaneously. It uses its multiple threads pool for concurrent executions. This way, each time a request is sent, a dedicated thread is created to handle that specific request. This, again, makes it easier for non-blocking executions.

Image Source

The above are a few advantages that Node.js offers. They make it easier and more scalable to build microservices applications. Adding the benefits of message queues, the combination allows you to create complex and high performant apps.

There are many message queues that you can use to run with Node.js. However, setting up one requires layers of infrastructure such as databases, networks, servers, OS, security, etc. Setting up all these elements to run your infrastructure can be challenging to maintain and monitor. Yet, it reduces your infrastructure portability. Downtime of one layer can hugely affect the whole application as the entire infrastructure is closely coupled to run all these layers together.

To overcome such challenges, we need modern solutions that exploit a modern infrastructure's flexibility, scalability, and resilience. A huge thanks to cloud native computing that allows you to run and host applications in the cloud to take advantage of the inherent characteristics of a cloud computing software delivery paradigm.

Going Serverless: Running Message Queues as a Service (Cloud Native)

As of right now, almost every IT tool or product is accessible as a service. A delivery model that allows you to run serverless architecture. And Message Queues haven't been left on the cloud bandwagon. Cloud-native is built to offer a serverless development strategy created specifically to properly exploit the cloud computing architecture.

Serverless architecture, a cloud-native development methodology, allows developers to create applications without provisioning servers or to handle scaling management. Instead, such tasks are abstracted and handled by the cloud provider. This allows developers to create vendor agnostic, auto-provisioning, and highly scalable applications with the faster release of production-ready and fault-tolerant models.

This is facilitated by the fact that:

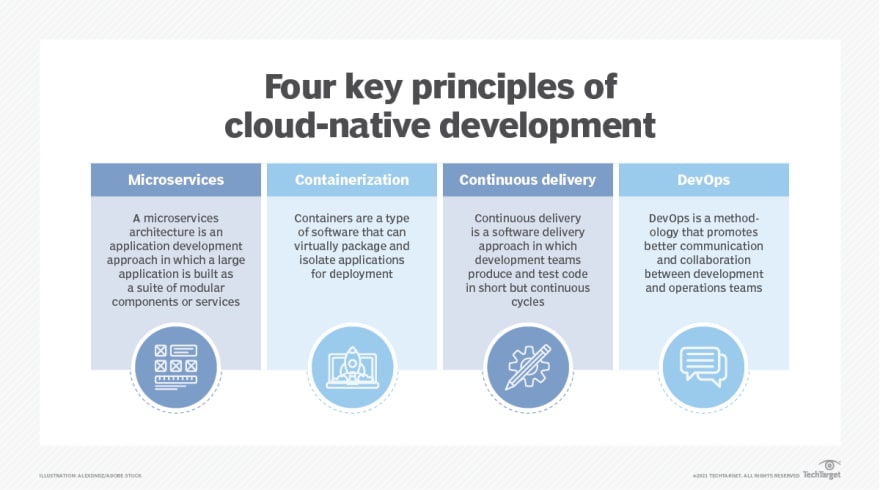

- Cloud natives are microservices centric - this way, your messages queues still run loosely coupled. Specific features can be updated without causing any downtime to your apps.

- They are created with Continuous Integration/Continuous Delivery (CI/CD) pipelines in mind. This brings the concept of Accelerated Software Development Lifecycle (SDLC) to your team and members to collaborate at different levels, such as designing, development, and testing.

- CI/CD is packed with automation. This allows you to make high-impact changes with minimal effort.

- Cloud Native Architecture is container orchestrated. You don't have to be concerned about the platform, operating system, or runtime environments.

To sum up, cloud native apps principles are:

Now imagine running your whole Message Queue architecture as a service. This is a huge step forward with a tone of benefits:

- Automation opportunities allow you to focus on other pressing challenges.

- Portability - message queues become vendor agnostic. Containers allow you to connect to different microservices components through port mapping. This way, you avoid vendor lock-in.

- Increased Reliability. You have reduced downtime, and the failure of one component doesn't affect adjacent services.

- Easy to manage and scale your architecture.

- Language - cloud native message queues are built to work with modern languages and frameworks such as Node.js, Typescript, Go, Python, Rust, etc.

Since cloud native can be easily implemented with a wide range of frameworks and languages, choosing what you want to work with is easy. Node.js is an excellent candidate for message queueing. We have seen that Node.js compliments the message queuing architecture.

Top 5 Cloud-Native MQs with Node.js Support

Let's dive and learn about Node.js top cloud native message queues (MQs) that Node.js supports.

1. Memphis

Memphis is an open-source, real-time data processing platform with an embedded distributed messaging queue. It is made to eliminate the heavy-lifting tasks for in-app streaming use cases.

Memphis is cloud native. It thrives by offering a Real-Time Data Processing Platform for the producer-consumer paradigm.

What makes Memphis special

- Memphis is a distributed message broker built for async communication

- It supports numerous Cloud Deployment platforms.

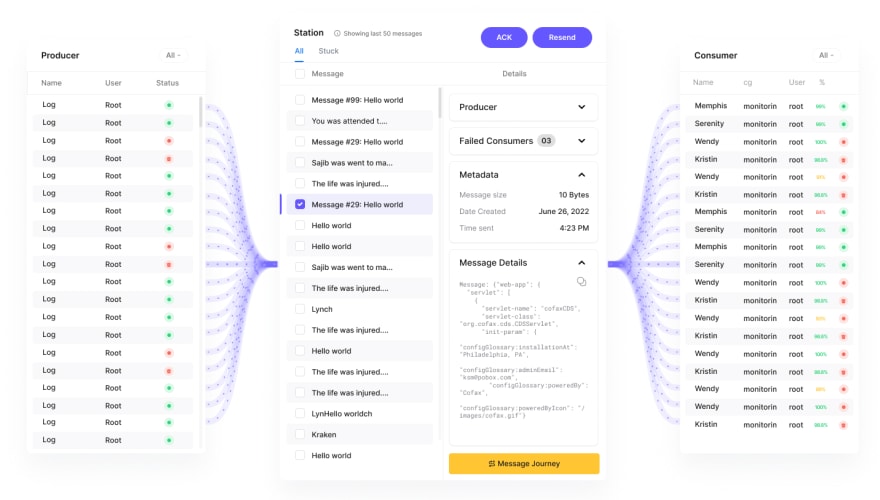

- Unlike other message brokers and queues that use topics and queues, Memphis uses stations.

A station provides easy-to-use messaging queues. It abstracts you from creating a never-ending stream of producers, consumers, orchestrations, manual scaling, and decentralized monitoring. A station handles all this for you. And that's what makes Memphis a unique platform. Check out how to create a station in just a few clicks.

Here is a basic example of Memphis station at work:

Memphis is great for cloud-native app development. It uses modern tools to create a development stack. These include:

- Docker - Allows your application to leverage the virtualized containers and isolated resources and run microservices at scale.

- Kubernetes - It offers orchestration services that let you decide how and where to run your containers.

- Terraform - an IaC (Infrastructure as Code) tool that defines resources as code.

- Node.js support. Node.js is a popular JavaScript runtime. It is great for creating real-time applications and microservices of any kind. Node.js allows you to create virtual servers and routes for connecting microservices.

Memphis has great support for Node.js and Typescript. Check out Memphis SDKs for Node.js and Typescript and start creating Producers/Consumers. Memphis also has support for other server-side languages such as Go and Python,

Have you deployed your Memphis message queue? Choose your preferred environment and run a Broker like never before. You can run Memphis on:

2. Rabbitmq

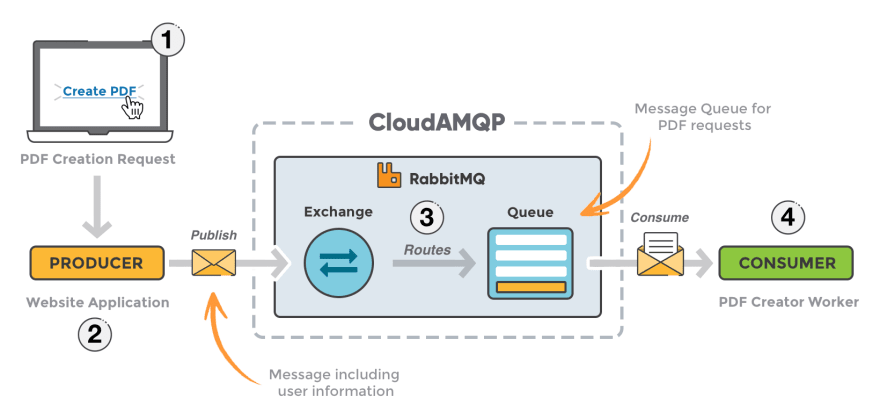

RabbitMQ is an open-source distributed message broker. Due to the rise of the microservice architecture, where every concern has its own run time that scales independently, RabbitMQ is a tool that allows these microservices to communicate asynchronously with a variety of different protocols to run large-scale applications.

RabbitMQ uses Advanced Message Queueing Protocol (AMQP). This allows middleware brokers such as RabbitMQ to conform with client applications and communicate effectively.

RabbitMQ uses Exchanges to manage messages. When a producer sends a message, it is not registered immediately to the queue. The message is quickly registered to RabbitMQ Exchange, which uses bindings and routing keys to determine which queue a message belongs to. Here is a basic example of RabbitMQ infrastructure:

Check this guide and learn what RabbitMQ is, and the important concept around it.

RabbitMQ uses distributed deployment mechanism. You can set up instances in a highly available manner. Just like Memphis, RabbitMQ is cloud available. This allows you to run a highly available cluster on top of infrastructure such as kubernetes in the cloud.

Leveraging such features creates huge cross-language support for favourite programming languages suitable for developing scalable apps. You can run your cloud support RabbitMQ architecture with popular Node.js and take advantage of its features.

Here are RabbitMQ features that you can comfortably run with Node.js

3. Kafka

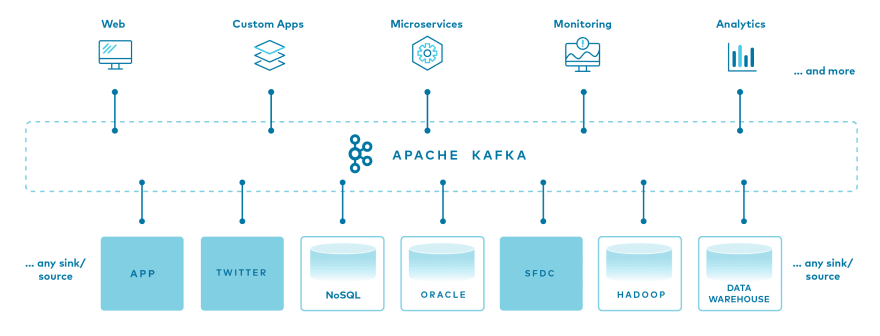

Kafka is a distributed platform for pushing events for high-performance data pipelines. Over 80% of all Fortune 100 organizations use Kafka to run their infrastructure events.

These events are generated from different services. Kafka then streams and persists these events to other targets. Kafka is made to connect hundreds of event sources.

Kafka is well known for her performance. As a distributed platform, building a message queuing system on top of Kafka becomes very easy. Check this A-Z Guide and learn about Apache Kafka Architecture and Its Components.

To build scale and speed streaming events, Kafka has five core functions. These are:

- Publisher - This forms the data source that publishes streams of events to Kafka topics.

- Consumer - forms a subscribed application that takes data from any subscribed Kafka topics.

- Process - Kafka uses streams API as a processor. This allows Kafka to consume incoming events from different sources and produce the outgoing data stream to one or more topics.

- Connect - Kafka Connect allows you to scale streaming data between Apache Kafka and other systems. This links Kafka topics to existing applications and brings data to the Kafka cluster and from other external sources.

- Store - Kafka provides a major data storage tool for your events.

So what makes Kafka popular?

Some advantages that make Kafka popular include:

- Scalability

- High Throughput

- Low Latency

- Fault Tolerance

- Reliability

- Durability

Despite its popularity and advantages, Kafka is a challenging infrastructure to set up, deploy, scale and manage for on-premises production.

To avoid such challenges, Kafka is now built and can be managed as a service in the cloud. This allows the cloud to perform hard tasks, such as complex cluster sizing and provisioning, building, and maintaining the Kafka infrastructure. Instead, you focus on building your application logic as the cloud handles the heavy lifting. This makes it easy to deploy Kafka without requiring specific Kafka infrastructure management expertise.

To showcase your producers and consumers, Kafka supports various programming languages. Kafka cloud platforms, such as Confluent Cloud, allow you to build and consume Kafka using Node.js.

4. Strimzi

Given that many message queue technologies are leveraging dynamic technologies such as Kubernetes. We have seen it's easier to run your Kafka streams using a cloud-native platform. Strimzi provides deployment configurations for Kafka. Strimzi allows you to run Apache Kafka cluster on Kubernetes. This allows you to spread brokers across availability zones and dedicated nodes.

Strimzi is a Cloud Native Computing Foundation sandbox project. Basically, it bridges Kube-native management of Kafka's wide features such as cluster, topics, users, Kafka MirrorMaker and Kafka Connect. This way, you can expose Kafka outside Kubernetes using NodePort, OpenShift Routes, Ingress, and Load balancer.

Once Kafka runs on Kube-native support that Strimzi offers, you can now start utilizing Node.js to create your streams and use libraries such as KafkaJS, a modern Apache Kafka client for Node.js.

5. Redpanda

Kafka architecture uses Zookeeper to keep track of a cluster of Kafka brokers.

Redpanda is a streaming data platform that is Kafka-API compatible, ZooKeeper-free, and JVM-free. Kafka API is great. Redpanda is built to make Kafka fast. Redpanda allows you to pick the deployment option that best suits your needs.

This include:

Its capacity to utilize infrastructure such as Kubernetes allows you to run Kubernetes on Cloud and access Redpanda outside the Kubernetes network.

Redpanda allows you to create Kubernetes cluster and run your broker using cloud providers such as Google Kubernetes Engine, Amazon EKS, and DigitalOcean.

Redpanda, being Kafka API compatible, allows you leverage to the countless client libraries created for Kafka, such as KafkaJS client for Node.js.

Check this guide and learn how to use Node.js with Redpanda

Conclusion

There are many Message Queues that you can use. However, a message queue running as cloud-native outstands the rest. It gives you the capacity to run servers queuing with scalability at the core. This article helped you learn more about cloud-native MQs and the best choices you can use with Node.js.

This content originally appeared on DEV Community 👩💻👨💻 and was authored by Rose Chege

Rose Chege | Sciencx (2022-09-13T12:53:12+00:00) Top 5 Cloud-Native Message Queues (MQs) with Node.js Support. Retrieved from https://www.scien.cx/2022/09/13/top-5-cloud-native-message-queues-mqs-with-node-js-support/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.