This content originally appeared on Bits and Pieces - Medium and was authored by Vaibhav Moradiya

Exploring the basics of Node.js streams — and how they can be used to handle large datasets efficiently.

Good day, everyone. Today we are going to dive into an essential concept in Node.js called “streams.” In simple terms, streams are a way to handle data that is too large to be loaded into memory all at once. Instead of loading the entire data set, we can break it down into smaller chunks and process it bit by bit. This not only saves memory usage but also enables faster data processing.

Using writable streams

- Let us understand why streams are required. Suppose we need to write numbers from 1 to 1000000 in a file using fs module. The first solution that comes to mind is to open the file and loop through the numbers from 1 to 1000000, using the write method to write each number. However, this approach can take up to 9 seconds with 100% CPU usage on a single core.

const fs = require('node:fs/promises');

(async () => {

console.time("writeMany")

const fileHandle = await fs.open("test1.txt", "w")

for(let i=0;i<1000000;i++){

await fileHandle.write(` ${i} `)

}

console.timeEnd("writeMany")

})()- We can directly pass callback function in the write method

- Even if we reach the end(i.e. console.timeEnd(“writeMany”)), the write callback may still be executing in the event loop. As a result, the output file will not contain a sequence of numbers.

const fs = require('node:fs');

(async () => {

console.time("writeMany")

fs.open("test.txt", "w", (err, fd) => {

for(let i=0;i<1000000;i++){

fs.write(fd,` ${i} `, () => {})

}

console.timeEnd("writeMany")

})

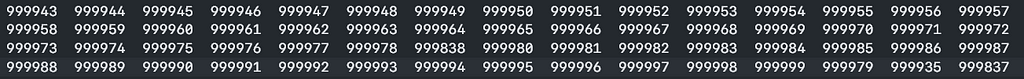

})()- We don’t know which callback is called first in the event loop. As shown in the image below, the numbers are not in any particular order.

If we increase the count of numbers then we might run out of memory using callback method

- We can use the writeSync function to write numbers in sequential order. The execution now takes 2 seconds and uses 100% of the CPU on a single core.

const fs = require('node:fs');

(async () => {

console.time("writeMany")

fs.open("test.txt", "w", (err, fd) => {

for(let i=0;i<1000000;i++){

fs.writeSync(fd,` ${i} `)

}

console.timeEnd("writeMany")

})

})()- Additionally, we can make it explicit by specifying the character encoding or alternatively by using a buffer. Only for below code, However, it’s important to keep in mind that utilizing a buffer will result in a slightly longer execution time compared to the previous code due to the constant declaration at each iteration.

const fs = require('node:fs');

(async () => {

console.time("writeMany")

fs.open("test.txt", "w", (err, fd) => {

for(let i=0;i<1000000;i++){

const buff = Buffer.from(` ${i} `,"utf-8")

fs.writeSync(fd,buff)

}

console.timeEnd("writeMany")

})

})()Thus far, we have demonstrated various methods to optimize the code for faster execution, resulting in a current execution time of 2 seconds.. is it possible to make it faster?

- Yes we can make it faster using the help of streams. Let’s take an example of stream. We will discuss what a stream is later in this article.

const fs = require('node:fs/promises');

(async () => {

console.time("writeMany")

const fileHandle = await fs.open("test.txt", "w")

const stream = fileHandle.createWriteStream()

for(let i=0;i<1000000;i++){

const buff =Buffer.from(` ${i} `,"utf-8")

stream.write(buff)

}

console.timeEnd("writeMany")

})()- In the above code, we have created stream using createWriteStream() method.

- stream.write() — We can either pass buffer or any string which we want to write in the file.

- Now, we have the execution time for above code is 270ms. still above code is not a good practice because it uses 200MB of memory which higher than the earlier discussed approach. So don’t use above method in production

Streams:

- an abstract interface for working with streaming data in Node.js

- Example: FFmpeg — it is video editor where we can cut the clip or adding text (normal video editing operation)

What will happened when we are writing 1 million number to the file

- We wait for data to gather and it gets big enough to get equal to the size of chunk and then we write. default size of chunk is 600kb

Type of streams

- writable

- readable

- duplex

- transform

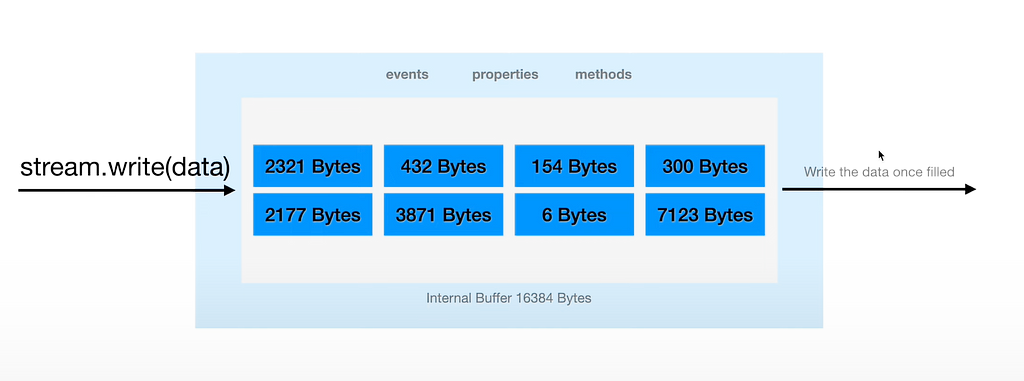

Let’s discuss what happens inside a writable stream.

- Suppose we have a writable stream object created using fs.createWriteStream(). Inside this object, there is an internal buffer with a default value of 16384 bytes. In addition to this, each writable object has events, properties, and methods.

- The stream.write() method allows us to push a specified amount of data into the internal buffer. For example, if we push 300 bytes of data into the buffer and continue to add more data, once the buffer is completely filled, the data will be considered as one chunk and written.

- A buffer is a location in memory that holds a specific amount of data. If we keep buffering data, we might end up with a memory issue. For example, if we write 800 MB of data, only 16484 bytes of data will stay in the buffer, and the rest of the data will be kept in memory by Node JS. This will cause our memory usage to jump up to 800 MB, which is too high.

- So, we need to wait for the internal buffer to become empty before pushing more data into it.

Let’s now take a look at readable stream object. let’s see how it works.

- Just like a writable stream, a readable stream also creates an object with an internal buffer that stores data. By default, the maximum capacity of the buffer is 16484 bytes. It has events, methods, and properties available in the object, the same as a writable stream.

- We can push data using stream.push(data), and we keep pushing data into the internal buffer. Once it becomes filled, we receive an event which we can handle by calling stream.on(‘data’, (chunk) => {…})

- We can use readable stream to make huge data into different chunk.

By using a readable stream, we can write 800 MB of data into a file, which will save memory. What the readable stream will do is create a chunk of 16484 bytes in size and send it to the event so that we can write that chunk to the file.

Now, let’s begin by fixing our solution where the memory usage is 200MB.

- We can create a writable stream object using the following code: const stream = fs.createWriteStream().

- This stream object has one property, writableHighWaterMark, which displays the size of the internal buffer.

- To check how much our buffer has been filled, we can use the writableLength property

- If the buffer size of the data to be written using stream.write(buff) is less than the size of the internal buffer, the method will return true. This indicates that more data can be allocated to the internal buffer. If false is returned, it means that writableLength is equal to writableHighWaterMark, and the internal buffer is full. In this case, we need to wait for the stream to empty the buffer and perform the final write

- So how could we let stream empty it self. we could just do listen for the event drain whenever this event occur that means that our internal buffer is now empty

- when the stream.write() method returns false, it means that the internal buffer of the stream object is full and it will take some time for the stream to empty the buffer. After the buffer has been completely emptied, the stream object will emit the drain event

console.log(stream.writableHighWaterMark) // print 16384

const buff = Buffer.alloc(16383,"A") // create buffer of size 16383

// Since the buffer size is less than the writableHighWaterMark

// value of the stream object, the method returns true

console.log(stream.write(buff))

// since the internal buffer of the stream object is already full

// with 16383 bytes of data, adding another byte will cause the size

// of the internal buffer to be equal to the writableHighWaterMark,

// which is 16384 bytes. Therefore, the method returns false

console.log(stream.write(Buffer.alloc(1,"A")))

// Once the internal buffer has been completely emptied,

// stream object will emit the drain event

stream.on("drain" , () => {

console.log("It is now safe to write write")

})

Updated code with lesser memory usage:

const fs = require("node:fs/promises");

(async () => {

console.time("writeMany");

const fileHandle = await fs.open("test.txt", "w");

const stream = fileHandle.createWriteStream();

let i = 0;

const solve = () => {

while (i < 1000000) {

const buff = Buffer.from(` ${i} `, "utf-8");

// Calling the end() method signals that no more data

// will be written to the Writable

if (i === 999999) {

return stream.end(buff);

}

if (!stream.write(buff)) break;

i++;

}

};

solve();

stream.on("drain", () => {

solve();

});

stream.on("finish", () => {

console.timeEnd("writeMany");

fileHandle.close();

});

})();What is duplex?

- A duplex stream allows for both writing and reading operations.

- The Writable and Readable streams have only one internal buffer, whereas the Duplex and Transform streams have two internal buffers — one for reading and one for writing.

What is transform stream?

- Transform streams are a type of duplex stream that first receives data, then modifies it, and finally outputs the modified data in a specific format.

In conclusion, we have explored the basics of Node.js streams and how they can be used to handle large datasets efficiently. But this is just the beginning. In our next post, we will dive deeper into advanced stream operations and explore more practical use cases. Stay tuned for more exciting content

LinkedIn:

https://in.linkedin.com/in/vaibhav-moradiya-177734169

Build Apps with reusable components, just like Lego

Bit’s open-source tool help 250,000+ devs to build apps with components.

Turn any UI, feature, or page into a reusable component — and share it across your applications. It’s easier to collaborate and build faster.

Split apps into components to make app development easier, and enjoy the best experience for the workflows you want:

→ Micro-Frontends

→ Design System

→ Code-Sharing and reuse

→ Monorepo

Learn more:

- How We Build Micro Frontends

- How we Build a Component Design System

- How to reuse React components across your projects

- 5 Ways to Build a React Monorepo

- How to Create a Composable React App with Bit

What Are Streams in Node.js? was originally published in Bits and Pieces on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Bits and Pieces - Medium and was authored by Vaibhav Moradiya

Vaibhav Moradiya | Sciencx (2023-03-06T06:24:33+00:00) What Are Streams in Node.js?. Retrieved from https://www.scien.cx/2023/03/06/what-are-streams-in-node-js/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.