This content originally appeared on Bits and Pieces - Medium and was authored by Fernando Doglio

It might sound blunt, but it’s a very effective technique!

The name of the technique sounds incredibly cool, as if all the architects of the company were to meet together inside a situation room and plan the strategy to conquer the enemy (the monolith).

Truth be told, this is a pretty interesting and practical way of transitioning your monolithic solution into a microservices-based one.

Hopefully, with the aim of achieving greater scalability and an overall improved code quality and maintainability of the platform.

So let’s talk about it.

What problem are we trying to solve?

Let’s first settle on the type of problem we’re trying to solve, because Tactical Forking is not a technique that can (or should) be used when you’re starting from scratch.

This is only useful if you’re dealing with a legacy system that has been working as a monolith for some time now, and while your team sees the need to move into a microservices-based architecture, the effort and time required to re-write the entire thing makes it impractical.

So what can you do? Especially if the option of leaving a monolith is not valid?

Well, tactical forking can answer that question.

What is tactical forking?

As I already mentioned, tactical forking is a very practical way of migrating a monolith into a set of microservices. It does so in multiple stages and over time while letting you continue operations in the meantime.

That last part is crucial, because even at an early stage, your platform is already able to continue working even if the migration isn’t over. This is a huge advantage over other techniques, like a complete rewrite.

The process isn’t magical though, here is how it works.

- Identify the set of services you’ll want to create. You’ll do this however you see fit, essentially you’ll have to identify which sections of your business logic are less coupled, and can leave independently. You can also split them up by responsibilities (like having user management on one side, stock management on the other, and so on).

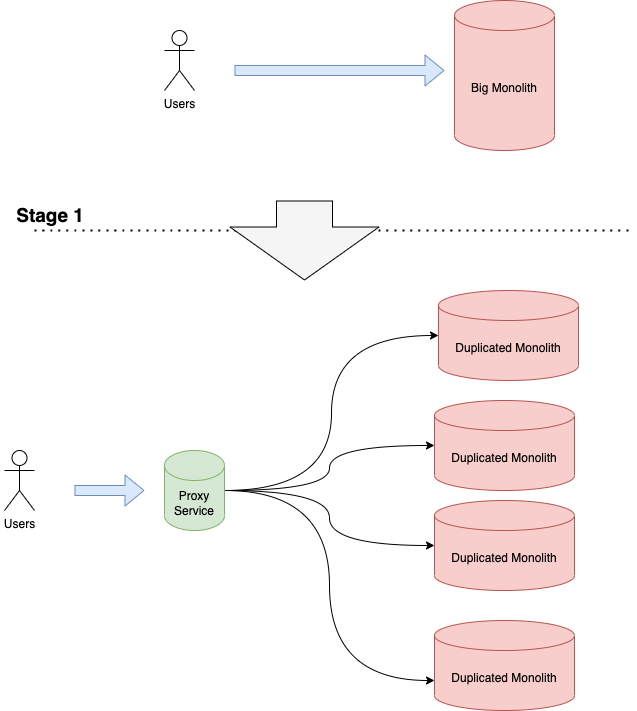

- Once the services are identified, you’ll duplicate the application, once per service. Yes, I said “duplicate”, bare with me for a second.

- You’ll create a proxy service that will act as the new interface between your front-end and the now, duplicated back-end. This proxy service will have the same public interface that the old monolith had, but in the background, it’ll map each request to a different service.

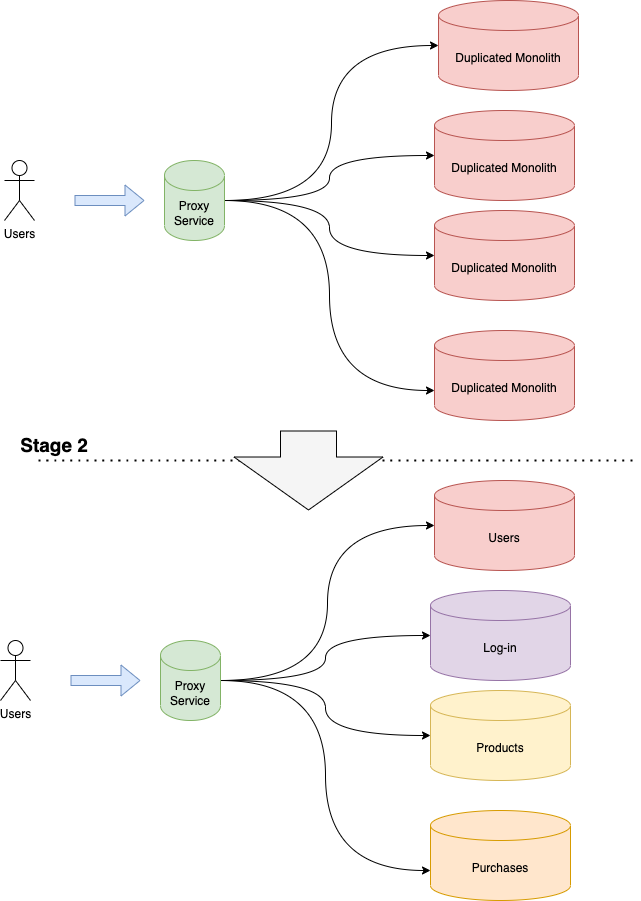

- Now that you have multiple services running in parallel, you’ll create separate teams and each one will take care of one service. Slowly stripping away unneeded code and features, leaving only the parts they need.

To make things simple, here is the process. First, you duplicate:

Now that everything’s been duplicated, the proxy service will take care of calling the right service. Each of the duplicated monoliths will take care of one responsibility. That’s the idea, they’ll act as microservices, even though they’re.

Next, you’ll assign your individual teams and you’ll start the individual migrations, to eventually reach a point that looks like this:

This doesn’t happen overnight, and it’s a process that will surface a lot of issues related to dependencies, duplication of data and even coupled functionalities and code that need to be resolved.

This is why while it’s important to have different teams handling each migration, it’s also important to have an overseeing team that can guide those migrations from afar. Ideally, this common team should have members of the original team that created or maintained the monolith. That way they’ll have first-hand knowledge about the internal structure of the project and its limitations.

Tactical forking and Bit go hand in hand for breaking down a monolithic app into microservices. With Bit, you can isolate components within your codebase and define them as independent entities that can be developed and tested separately, then versioned, documented, tested, and shared via Bit’s central registry.

Learn more here:

Component-Driven Microservices with NodeJS and Bit

Now, let’s take a look at what it takes to adopt this approach.

Adopting tactical forking in your project

While this approach might sound like a blunt one, it gets the job done.

The thing is, like everything in life, there is a cost to it. Actually, there are many costs to this approach and you have to be willing to pay them to get the most out of it.

The cost of resources

For this approach to work, you have to clone your app multiple times, but each one will have to have an independent development workflow. This means you’ll also have to clone:

- The code repositories

- The deployment servers

- The testing environments

- The deployment pipelines

And on top of that, you’ll also have to add more developers. After all, the aim is for you to have multiple independent teams working in parallel. That is the optimal setup, so you don’t have delays in the migration.

So yes, you’ll have to multiply the number of “human resources” (I hate that term) several times to make sure each team can work on its own.

The cost of knowledge transfer

The new teams you’re creating are not working on a brand new project, in fact, they’re going to have to get deep into the legacy code that is your monolith to refactor the heck out of it.

That means there is a considerable amount of time you’ll have to spend teaching them (i.e doing knowledge transfer) about the internal architecture of your monolith, why things work the way they do and all the little details and patches you’ve added over the years.

This is not a job that can be done in a single sitting, most likely depending on the size of the system it could take several days to a couple of weeks.

I call it a “cost”, because it’s time that most of the teams will spend “not working”, but rather simply prepping for what’s to come.

The cost of keeping everything organized

This one is subjective, you might not see it as a cost, but chances are, this is something extra that you didn’t have before: an overseeing team.

Put another way, instead of having a single team that can be managed by a single team lead, or a project manager, you now have multiple teams working in parallel trying to refactor their version of the monolith on their own, but all working towards having a platform that can integrate and work together.

You have several options here:

- You can go all the way and create an overseeing team composed of some managers and architects that will help guide the overall view and direction of the new platform. These people won’t be directly involved in any of the projects but they’ll know about them enough to make high-level decisions.

- You can instead have one person from each team come together into a type of “council” that will try to help organize the overall direction and guide the development without showing bias towards any of the projects.

The first approach will probably yield the most impartial results and there will be little to no danger of having someone biased towards their own bit of code, however, it would also add extra costs, yet again, thanks to the extra set of people that you have to maintain to form this committee.

On the other hand, the second approach would be a smart way to cut costs by re-utilizing some of the most senior members of each team as part of the overseeing committee.

This would solve the extra costs issue but it could introduce further conflicts within the project if the members of said group can’t decide on how to solve conflicting priorities between projects. By not having an impartial set of members, there is always a small chance that each one will try to benefit their own team.

Which approach works better will depend on your context and the type of developers you have working with you.

Did you like what you read? Consider subscribing to my FREE newsletter where I share my 2 decades’ worth of wisdom in the IT industry with everyone. Join “The Rambling of an old developer” !

Tactical Forking might mess with your data strategy

Other than the associated costs and the potential internal power struggles you might run into, there are some technical difficulties associated with duplicating a monolithic application and running it as if it was a distributed one.

The most relevant is probably the one related to data and data storage solutions. After all, if you’re going to be duplicating your app, your database will also have to be duplicated. Every service will eventually need its own individual database to store its own information, that’s one way to help them individually scale anyway.

Of course, that’s the “ideal” goal, you’ll have to see how close your context allows you to get.

That said, a good first step is to duplicate the databases and let each copy work with its own version.

What’s the problem with that?

Simple, if so far you’ve developed a system that was considered a monolith, chances are, you designed your data-model the same way.

This means you most likely have coupled data, tables that have information that belongs to multiple different services.

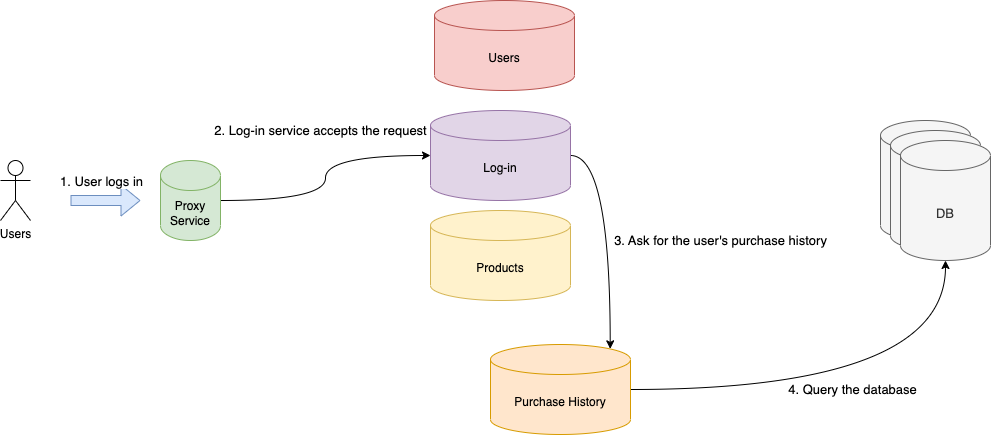

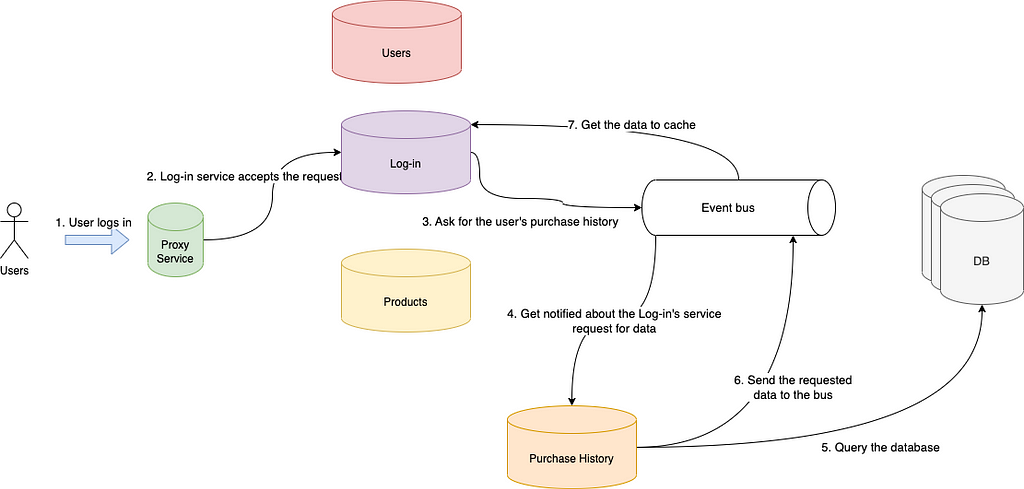

Or perhaps from your code you’re directly querying data that is not strictly related to that bit of logic simply because doing it like that was faster (I’m talking about querying the address of a user and their last purchase, right when they login because you want to cache that info for later, and things like that).

Part of your tactical forking journey involves you and all your teams deciding which services own which part of the data, and then figuring out a way to share data when it’s needed. After all, your services will need other services data at some point, the key is figuring out a clean way to access it.

Going the API route

The most obvious way to solve this problem, is to share it internally through their public APIs. In other words, if the “Log-in” service needs a piece of data that is owned by the “Purchase History” service, then have it make a request to it and get the data.

It’s that simple, and clean.

It also adds one or potentially several more HTTP requests into the mix, which could significantly slow down the user and hurt the user experience.

The point of moving away from a monolith was to help the platform grow and run faster (at least one of the points). We could be going the other way with this solution.

What else can we do?

We can take advantage that we’re dealing with microservices now, and there are plenty of ways for them to communicate with each other.

My Favorite Interservice Communication Patterns for Microservices

Going the event-bus route

As I said, there are many solutions and you should pick the one that fits your needs the best.

That said, I’m a fan of event buses so here is my recommendation.

This process looks more elaborate than the previous one, but that’s because now I’m also adding the steps for the data to be returned. With the API approach, the communication was synchronous (that’s how HTTP works after all), so you know that for every request there will be a response.

However, event buses work differently. They give you a chance to build an asynchronous communication scheme, which means your workflow now has the potential to be faster and you can provide a better user experience.

Now you’ll need an event bus, something like Kafka or Redis will do just fine. Your services will connect to it and subscribe to the type of messages (events) they care about.

And then other services will post those events into the bus. That’s it, there is no more service-to-service direct communication. This means that now the moment a piece of data requested by a service is ready, that microservice will be notified and it’ll receive the data. There is no more need to keep an active connection waiting.

Of course, architecturally speaking, this approach is a bit more complex than the previous one, but the benefits far outweigh the cons, trust me. This approach will also give you a lot more freedom to scale each service individually, which means it’s great for our initial goal!

Tactical forking might feel like you’re trying to cut a piece of paper with a sword, but once you pass the initial “this approach is crazy” feeling, you’ll notice that it poses a great plan for a gradual migration from a monolithic setup into a scalable and more resilient microservice-based architecture.

And it does so while giving you a chance to remain functional, which is always something your managers and product owners will want to hear when you come to them with the proposal for migrating their platform.

Have you tried Tactical Forking before? What did you think about it?

Finally, if you liked the topic, you might want to consider checking out this short course on LinkedIn Learning about Tactical Forking, it has some more details I might’ve left out.

Doing tactical forking with Bit

While you can definitely do tactical forking manually, having a tool that gives you a headstart and improves the development experience of your teams can be a great plus.

One of these tools is Bit, this tool allows you to abstract the entire development workflow and streamline it so that all your teams work the same way (even when in the background they actually use different tooling).

For instance, you can have a team using JavaScript with Jest, and another one using TypeScript with Mocha, and the development workflow (the building, testing and publishing of the components) would be identical.

On top of that:

- Bit provides version control for your forked components, which means that you can easily track changes to your forked components over time. This makes it easy to roll back to previous versions if necessary.

- Bit enables collaboration between developers on shared components. By using Bit, you can share your forked components with others and allow them to contribute changes. Bit keeps track of all changes and ensures that everyone is working with the same version of the component. This is major when you have multiple individual teams having the same cross-component dependency.

- Bit integrates with other tools such as GitHub, GitLab, and Bitbucket. This means that you can easily import and export components between Bit and other tools. While this would be a strange scenario, you could potentially have different teams using completely different repositories (like one using Github and the other using Gitlabs), and Bit would abstract that from the workflow making it invisible to everyone.

- Bit supports semantic versioning, which is a widely used versioning scheme in the software development industry. Semantic versioning allows you to communicate the scope and nature of changes to your components through version numbers. This makes it easier for users of your components to understand the impact of changes and decide whether to upgrade to a new version.

You can read this article from Bit’s blog to understand how to create your own components from existing code.

How would it work?

At a high level, the process would work like this:

- After duplicating the monolith as many times as you need, you’ll start identifying parts of your code that can be turned into reusable components.

- Through inter-team coordination, one of them will publish the actual component and the others will import them into their own projects.

- After that, the process of collaborating in the development of these components is trivial through Bit, given how it has collaborative support out of the box. Teams will be able to add and change code into these reusable components while using them and publish the new versions for others to use.

Overall, Bit is a great tool to perform tactical forking because it provides lightweight forking, version control, collaboration, integration with other tools, and support for semantic versioning.

From monolithic to microservices with Bit

Bit’s open-source tool help 250,000+ devs to build apps with components.

Turn any UI, feature, or page into a reusable component — and share it across your applications. It’s easier to collaborate and build faster.

Split apps into components to make app development easier, and enjoy the best experience for the workflows you want:

→ Micro-Frontends

→ Design System

→ Code-Sharing and reuse

→ Monorepo

Learn more

- How We Build Micro Frontends

- How we Build a Component Design System

- How to reuse React components across your projects

- 5 Ways to Build a React Monorepo

- How to Create a Composable React App with Bit

From Monolith to Microservices Using Tactical Forking was originally published in Bits and Pieces on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Bits and Pieces - Medium and was authored by Fernando Doglio

Fernando Doglio | Sciencx (2023-03-10T07:02:02+00:00) From Monolith to Microservices Using Tactical Forking. Retrieved from https://www.scien.cx/2023/03/10/from-monolith-to-microservices-using-tactical-forking/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.