This content originally appeared on HackerNoon and was authored by Suleiman Dibirov

\ As a developer with Docker experience, you already understand the basics of containerization—packaging applications with their dependencies into a standardized unit.

\ Now, it’s time to take the next step: Kubernetes.

\ Kubernetes, or K8s, is a powerful container orchestration system that can manage your containerized applications at scale.

This guide will help you move from Docker to Kubernetes. It will show you how to use Kubernetes to improve your development and deployment work.

Docker vs. Kubernetes

Docker

- Containers: Self-contained, lightweight units that bundle an application with all its dependencies, ensuring it runs consistently across environments.

- Docker Compose: A tool for defining and managing multi-container applications, allowing you to easily start, stop, and configure services in a Docker environment.

- Docker Swarm: Docker's built-in tool for clustering and orchestrating containers, enabling you to deploy and manage containerized applications across a cluster of Docker nodes.

Kubernetes

- Pods: The fundamental deployment unit in Kubernetes, consisting of one or more tightly coupled containers that share resources and are managed as a single entity.

- Services: Abstract ways to expose and route traffic to pods, ensuring reliable access and load balancing for your applications.

- Deployments: Controllers that handle the creation, scaling, and updating of pods, ensuring that the desired state of your application is maintained.

- Namespaces: Logical partitions within a Kubernetes cluster that allow you to organize and isolate resources, enabling multiple users or teams to work within the same cluster without interference.

Practical Guide to Kubernetes for Docker Users

Step 1: Install Kubernetes

To get started with Kubernetes, you need to install it. The easiest way to do this locally is using Minikube, which sets up a local Kubernetes cluster. Below are the instructions for Windows, Linux, and macOS.

\ For Windows:

# Download the Minikube installer from the official Minikube releases page

# https://github.com/kubernetes/minikube/releases

# Run the installer and follow the instructions.

# Start Minikube

minikube start

\ For Linux:

# First, install the required dependencies

sudo apt-get update -y

sudo apt-get install -y curl apt-transport-https

# Download the Minikube binary

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

# Install Minikube

sudo install minikube-linux-amd64 /usr/local/bin/minikube

# Start Minikube

minikube start

\ For macOS:

# Install Minikube

brew install minikube

# Start Minikube

minikube start

\ Alternatives to Minikube

While Minikube is a popular choice for running Kubernetes locally, there are other alternatives you might consider:

\

- Kind (Kubernetes IN Docker):

- Kind runs Kubernetes clusters in Docker containers, which makes it very lightweight and easy to set up. It’s particularly useful for testing Kubernetes itself

- Kind Installation Guide

- k3s:

- k3s is a lightweight Kubernetes distribution by Rancher. It’s designed to be easy to install and use, especially for IoT and edge computing.

- k3s Installation Guide

- Docker Desktop (with Kubernetes support):

- Docker Desktop includes a built-in Kubernetes cluster that you can enable in the settings. This is a convenient option if you’re already using Docker Desktop.

- Docker Desktop Installation Guide \n

Following these instructions, you can have a local Kubernetes cluster up and running on your preferred operating system. Additionally, you can explore the alternatives if Minikube does not meet your specific needs.

Step 2: Understanding Pods

In Docker, you typically run a single container using the docker run command. In Kubernetes, the equivalent is running a pod. A pod is the smallest deployable unit in Kubernetes and can contain one or more containers.

\ Sample Pod Configuration:

# pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: my-container

image: nginx

\

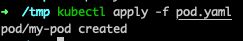

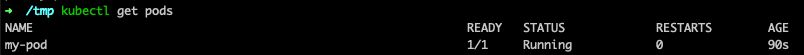

To create this pod, use the kubectl command:

kubectl apply -f pod.yaml

You can check the status of the pod with:

kubectl get pods

NAME: The name of the pod (my-pod in this example).

READY: Indicates how many containers are ready in the pod. 1/1 means one container is ready out of one total container.

STATUS: The pod's current status is Running in this example.

RESTARTS: The number of times the pod has been restarted. 0 means it has not been restarted.

AGE: The duration for which the pod has been running. 90s indicates that the pod has been running for 90 seconds.

\

To get detailed information about the pod, use:

kubectl describe pod my-pod

\ To view the logs for the pod, use:

kubectl logs my-pod

\ To run commands inside a running container of the pod, use:

kubectl exec -it my-pod -- /bin/bash

Step 3: Deployments

Deployments in Kubernetes provide a higher-level abstraction for managing a group of pods, including features for scaling and updating your application.

\ Sample Deployment Configuration:

# deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-deployment

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-container

image: nginx

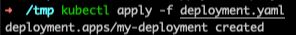

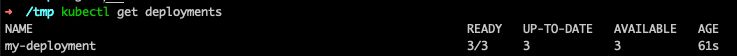

\ Create the deployment with:

kubectl apply -f deployment.yaml

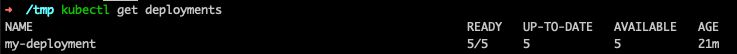

You can check the status of your deployment with:

kubectl get deployments

NAME: The name of the deployment (my-deployment in this example).

READY: Indicates the number of pods ready out of the desired number. 3/3 means all three pods are ready.

UP-TO-DATE: The number of pods that are updated to the desired state. Here, all three pods are up-to-date.

AVAILABLE: The number of pods that are available and ready to serve requests. All three pods are available.

AGE: The duration for which the deployment has been running. 61s indicates that the deployment has been running for 61 seconds.

\

To get detailed information about the deployment, use the following command:

kubectl describe deployment my-deployment

Step 4: Services

Services in Kubernetes expose your pods to the network. There are different types of services:

ClusterIP

Description: The default type of service, which exposes the service on a cluster-internal IP. This means the service is only accessible within the Kubernetes cluster.

Use Case: Ideal for internal communication between services within the same cluster.

\

NodePort

Description: Exposes the service on each node’s IP at a static port (the NodePort). A ClusterIP service, to which the NodePort service routes, is automatically created.

Use Case: Useful for accessing the service from outside the cluster, primarily for development and testing purposes.

\

LoadBalancer

Description: Exposes the service externally using a cloud provider’s load balancer. It creates a NodePort and ClusterIP service and routes external traffic to the NodePort.

Use Case: Best for production environments where external traffic needs to be balanced across multiple nodes and pods.

\ Sample Service Configuration:

# service.yaml

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 80

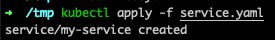

\ Apply the service configuration:

kubectl apply -f service.yaml

Check the service with:

kubectl get services

\

- NAME: The name of the service (my-service in this example).

- TYPE: The type of the service, which is ClusterIP in this case. This means the service is only accessible within the cluster.

- CLUSTER-IP: The internal IP address of the service (10.234.6.53 here), which is used for communication within the cluster.

- EXTERNAL-IP: The external IP address of the service, if any.

indicates that the service is not exposed externally. - PORT(S): The port(s) that the service is exposing (80/TCP in this example), which maps to the target port on the pods.

- AGE: The duration for which the service has been running. 82s indicates that the service has been running for 82 seconds.

\ Now we can access the service, and forward a port from your local machine to the service port in the cluster:

kubectl port-forward service/my-service 8080:80

\ This command forwards the local port 8080 to port 80 of the service my-service.

\ Open your web browser and navigate to http://127.0.0.1:8080.

\ You should see the Nginx welcome page, confirming that the service is accessible.

Step 5: Scaling Applications

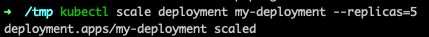

Scaling an application in Kubernetes is straightforward and can be done using the kubectl scale command. This allows you to easily increase or decrease the number of pod replicas for your deployment.

\ Scaling Command:

kubectl scale deployment my-deployment --replicas=5

To verify the scaling operation:

kubectl get deployments

As you can see on the screen, the number of replicas was increased from 3 to 5

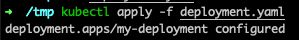

Step 6: Updating Applications

One of the key features of Kubernetes is the ability to perform rolling updates. This allows you to update the application version without downtime.

\ Updating Deployment:

\ Edit the deployment to update the container image:

# deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-deployment

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-container

image: nginx:latest # Update the image tag here

\ Apply the changes:

kubectl apply -f deployment.yaml

Kubernetes will perform a rolling update, replacing the old pods with new ones gradually.

\

Step 7: Namespace Management

Namespaces in Kubernetes provide a way to divide cluster resources between multiple users or applications. This is particularly useful in large environments.

\ Creating a Namespace

kubectl create namespace my-namespace

\

You can then create resources within this namespace by specifying the -n flag:

kubectl apply -f pod.yaml -n my-namespace

\ Check the resources within a namespace:

kubectl get pods -n my-namespace

\

Step 8: Clean up

To delete everything you created, execute the following commands:

kubectl delte -f pod.yaml

kubectl delete -f deployment.yaml

kubectl delete -f service.yaml

These commands will remove the pod, deployment, and service you created during this guide.

Conclusion

Migrating from Docker to Kubernetes involves understanding new concepts and tools, but the transition is manageable with the right guidance. Kubernetes offers powerful features for scaling, managing, and updating your applications, making it an essential tool for modern cloud-native development. By following this practical guide, you’ll be well on your way to harnessing the full potential of Kubernetes.

\ Happy orchestrating!

This content originally appeared on HackerNoon and was authored by Suleiman Dibirov

Suleiman Dibirov | Sciencx (2024-08-29T16:15:26+00:00) An Intro to Kubernetes for Docker Developers. Retrieved from https://www.scien.cx/2024/08/29/an-intro-to-kubernetes-for-docker-developers/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.