This content originally appeared on DEV Community and was authored by vishwasnarayanre

Docker is a development framework for developing applications based on containers, which are compact and lightweight execution environments that share the operating system kernel but otherwise run in isolation. Although the idea of containers has been around for a while, Docker, an open source community founded in 2013, was the first to implement it, helped to popularize the technology and has contributed to the cloud-native architecture pattern with containerization and microservices of software development.

What are containers?

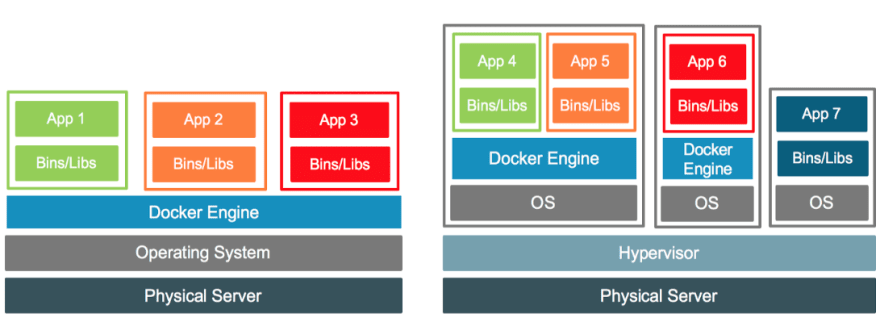

One aim of modern software architecture is to maintain programs on the same host or cluster separated from one another so that they do not interfere with one another's activity or maintenance. Because of the packages, libraries, and other program components needed for them to run, this can be challenging.Virtual machines, which keep applications on the same hardware completely separate and minimise conflicts among software components and competition for hardware resources, have become one solution to this issue. However, virtual machines are large (each needs their own operating system, so they are usually gigabytes in size) and difficult to manage and update.

Containers, on the other hand, separate the execution environments of programs while sharing the underlying OS kernel. They are usually represented in megabytes, consume much less resources than virtual machines, and boot up almost instantly.They can be stacked much more tightly on the same hardware and spun up and down in large numbers with much less time and overhead. Containers offer a highly effective and granular framework for integrating software components into the types of program and service stacks required in a modern organisation, as well as updating and maintaining certain software components.

What is Docker?

Docker is a free and open-source project that makes it simple to build containers and container-based applications. Docker was originally designed for Linux, but it now runs on Windows and MacOS as well. Let's look at some of the modules you'd need to build Docker-containerized applications to further understand how Docker functions.

download here

Dockerfile

A Dockerfile is the foundation of every Docker container. A Dockerfile is a text file written in simple syntax that contains instructions for creating a Docker image (more on that in a moment). A Dockerfile defines the operating system that will run the container, as well as the languages, environmental variables, file locations, network ports, and other components it will require—and, of course, the container itself.

Remember This file has no file extension

Docker image

After you've written your Dockerfile, you use the Docker build tool to generate an image based on it. A Docker image is a lightweight file that contains the requirements on which software components the container can run and how. A Dockerfile is a series of instructions that tells construct how to create the image.Since a Dockerfile would almost always contain instructions for obtaining software packages from online libraries, you should take care to expressly specify the correct versions, otherwise your Dockerfile will generate different images based on when it is invoked. However, once an image is generated, it remains static. Codefresh provides a more detailed look at how to create a picture.

Docker run

The order that ultimately launches a container is Docker's run utility. Each container is an image case. Containers are intended to be transient and temporary, but they may be halted and restarted, restoring the container to its previous state.Furthermore, several container instances of the same image will operate concurrently (as long as each container has a unique name). The Code Review has a perfect rundown of the various run command choices to give you a sense of how it functions.

Docker Hub

Docker Engine is the fundamental client-server infrastructure that builds and manages containers, and it is at the heart of Docker. In general, when anyone says Docker without referring to the organisation or the overall project, they are referring to Docker Engine. Docker Engine is available in two flavours: Docker Engine Enterprise and Docker Engine Community.

Docker Engine

Docker Engine is the fundamental client-server infrastructure that builds and manages containers, and it is at the heart of Docker. If anyone says Docker in a general sense and isn't referring to the business or the project as a whole, they're usually referring to Docker Engine. Docker Engine Enterprise and Docker Engine Community are the two models available.

Docker Compose, Docker Swarm, and Kubernetes

Docker also makes it easy to coordinate container activities and, as a result, create framework stacks by connecting containers. Docker Compose was developed by Docker to make the development and testing of multi-container software easier. It's a command-line interface that looks a lot like the Docker app,that reads a specially formatted descriptor file and assembles applications from multiple containers to run on a single host.

Other products, such as Docker Swarm and Kubernetes, have more complex variations of these activities, such as container orchestration. Docker, on the other hand, contains the essentials. About the fact that Kubernetes arose from the Docker community, it has become the de facto Docker orchestration tool of preference.

Lets Discuss the Pros and Cons of the Docker

Docker is a fantastic tool to work on building the containerized application, thus they are easy to use and a very powerful tool has some backdrops that might not make it that flexible to use,here are some of the list of the pros and cons of the Docker.

Pros

Docker has isolation and throttling features

Docker containers detach applications not only from one another, but also from the underlying device. This not only results in a simpler program stack, but it also makes it easy to control how a containerized device uses machine resources like CPU, GPU, memory, I/O, and networking. It's much more straightforward to keep data and code apart.

Containers are more portable

Any computer that supports the container's runtime environment will run a Docker container. Since applications aren't bound to the host operating system, both the program and the underlying operating environment can be kept clean and simple.(CMD interface and for that to be up and runnong for some conainer types use -it tag to get the CMD interface)

A python for Linux container, for example, can run on almost every Linux system that supports containers. Usually, all of the app's dependencies are shipped in the same container.Container-based applications can conveniently be migrated from on-premises platforms to cloud environments or from developers' laptops to servers, as long as the target device supports Docker and any third-party software used for it, such as Kubernetes.

Docker container images are typically designed for a given framework. For example, a Windows container would not run on Linux, and vice versa. Previously, one solution was to launch a virtual machine that ran an instance of the required operating system, and then run the container inside the virtual machine.

The Docker team has since developed a more sophisticated approach known as manifests, which allows images for different operating systems to be packed side by side in the same image. Manifests are still considered experimental, but they show how containers may become a cross-platform and cross-environment framework solution.

Dockers are composible just a commit can add the changes to the cotnainers

Most enterprise systems are made up of several different components arranged in a stack—a web server, a database, and an in-memory cache, for example. Containers allow these components to be assembled into a functional unit of easily interchangeable parts. Each component is supported by its own container and can be retained, revamped, switched out, and tweaked independently of the others.

This is basically the application architecture microservices model. The microservices model, which divides device functionality into discrete, self-contained services, is an alternative to sluggish conventional development processes and rigid monolithic applications. Containers that are lightweight and compact make it easy to create and manage microservices-based applications.

Docker containers have orchestration and scaling

Since containers are lightweight and have low overhead, it is possible to deploy a large number of them on a single device. Containers, on the other hand, may be used to spread an application across clusters of facilities, as well as to ramp services up or down to accommodate surges in demand or save energy.

Third-party developers have the most enterprise-grade implementations of software for container implementation, management, and scaling. Among them is Google's Kubernetes, a framework for automating not just container deployment and scaling, but also how they're linked, load-balanced, and controlled.

Kubernetes also allows you to build and reuse multi-container framework concepts, also known as "Helm maps," so that large app stacks can be designed and handled on demand.

Docker still has its own built-in orchestration system, Swarm mode, which is still used for less difficult scenarios. However, Kubernetes has become the de facto standard; in particular, Kubernetes is included with Docker Enterprise Edition.

Cons

Docker containers don’t work at a bare-metal speed

Containers do not have the overhead of virtual computers, but their effect on efficiency is also observable. If your workload necessitates bare-metal performance, a container will be able to bring you near enough—much closer than a VM—but you'll still see some overhead.

Docker container are stateless and immuntable.

Containers boot and run from an illustration that specifies the contents of the container. That picture is timeless by default—it does not alter until it is made.

As a result, containers lack persistency. If you start a container instance, then destroy it and restart it, the new container instance will not have all of the old one's stateful knowledge.

There is also another distinction between containers and virtual machines. Since it has its own file system, a virtual machine has persistent persistence through sessions by default. The picture used to boot the program that runs in the container is the only thing that exists with a container; the only way to modify that is to produce a new, updated container image.

Container statelessness makes container contents more stable and simpler to compose predictably into program stacks. It also requires developers to keep device data and code separate, this is a very big advantage. If we expect a container to have some sort of continuous state, the state must be stored somewhere else. This may be a database or a separate storage volume that is attached to the container at boot time.

Companies irrespective of what they can deliver IaaS ,PaaS,SaaS all the companies extensively use it somehow or another way thus this Docker Engine is making the world more unique in terms of the development i.e. Software Development.

Thus never confuse between the containers being a VM or microservice they can be misleading concepts for us all.

This content originally appeared on DEV Community and was authored by vishwasnarayanre

vishwasnarayanre | Sciencx (2021-04-21T04:09:31+00:00) Docker in making the world more unique in terms of the development. Retrieved from https://www.scien.cx/2021/04/21/docker-in-making-the-world-more-unique-in-terms-of-the-development/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.