This content originally appeared on raganwald.com and was authored by Reginald Braithwaite

Prelude

In this essay, we’re going to explore regular expressions by implementing regular expressions.

This essay will be of interest to anyone who would like a refresher on the fundamentals of regular expressions and pattern matching. It is not intended as a practical “how-to” for using modern regexen, but the exercise of implementing basic regular expressions is a terrific foundation for understanding how to use and optimize regular expressions in production code.

As we develop our implementation, we will demonstrate a number of important results concerning regular expressions, regular languages, and finite-state automata, such as:

- For every formal regular expression, there exists an equivalent finite-state recognizer.

- For every finite-state recognizer with epsilon-transitions, there exists a finite-state recognizer without epsilon-transitions.

- For every finite-state recognizer, there exists an equivalent deterministic finite-state recognizer.

- The set of finite-state recognizers is closed under union, catenation, and kleene*.

- Every regular language can be recognized by a finite-state recognizer.

Then, in Part II, we will explore more features of regular expressions, and show that if a finite-state automaton recognizes a language, that language is regular.

All of these things have been proven, and there are numerous explanations of the proofs available in literature and online. In this essay, we will demonstrate these results in a constructive proof style. For example, to demonstrate that for every formal regular expression, there exists an equivalent finite-state recognizer, we will construct a function that takes a formal regular expression as an argument, and returns an equivalent finite-state recognizer.1

We’ll also look at various extensions to formal regular languages that make it easier to write regular expressions. Some–like + and ?–will mirror existing regex features, while others–like ∩ (intersection), \ (difference), and ¬ (complement)–do not have direct regex equivalents.

When we’re finished, we’ll know a lot more about regular expressions, finite-state recognizers, and pattern matching.

If you are somewhat familiar with formal regular expressions (and the regexen we find in programming tools), feel free to skip the rest of the prelude and jump directly to the Table of Contents.

what is a regular expression?

In programming jargon, a regular expression, or regex (plural “regexen”),2 is a sequence of characters that define a search pattern. They can also be used to validate that a string has a particular form. For example, /ab*c/ is a regex that matches an a, zero or more bs, and then a c, anywhere in a string.

Regexen are–fundamentally–descriptions of sets of strings. A simple example is the regex /^0|1(0|1)*$/, which describes the set of all strings that represent whole numbers in base 2, also known as the “binary numbers.”

In computer science, the strings that a regular expression matches are known as “sentences,” and the set of all strings that a regular expression matches is known as the “language” that a regular expression matches.

So for the regex /^0|1(0|1)*$/, its language is “The set of all binary numbers,” and strings like 0, 11, and 1010101 are sentences in its language, while strings like 01, two, and Kltpzyxm are sentences that are not in its language.3

Regexen are not descriptions of machines that recognize strings. Regexen describe “what,” but not “how.” To actually use regexen, we need an implementation, a machine that takes a regular expression and a string to be scanned, and returns–at the very minimum–whether or not the string matches the expression.

Regexen implementations exist on most programming environments and most command-line environments. grep is a regex implementation. Languages like Ruby and JavaScript have regex libraries built in and provide syntactic support for writing regex literals directly in code.

The syntactic style of wrapping a regex in / characters is a syntactic convention in many languages that support regex literals, and we repeat them here to help distinguish them from formal regular expressions.

formal regular expressions

Regex programming tools evolved as a practical application for Formal Regular Expressions, a concept discovered by Stephen Cole Kleene, who was exploring Regular Languages. Regular Expressions in the computer science sense are a tool for describing Regular Languages: Any well-formed regular expression describes a regular language, and every regular language can be described by a regular expression.

Formal regular expressions are made with three “atomic” or indivisible expressions:

- The symbol

∅describes the language with no sentences,{ }, also called “the empty set.” - The symbol

εdescribes the language containing only the empty string,{ '' }. - Literals such as

x,y, orzdescribe languages containing single sentences, containing single symbols. e.g. The literalrdescribes the language{ 'r' }.

What makes formal regular expressions powerful, is that we have operators for alternating, catenating, and quantifying regular expressions. Given that x is a regular expression describing some language X, and y is a regular expression describing some language Y:

- The expression x

|y describes the union of the languagesXandY, meaning, the sentencewbelongs tox|yif and only ifwbelongs to the languageX, orwbelongs to the languageY. We can also say that x|y represents the alternation of x and y. - The expression xy describes the language

XY, where a sentenceabbelongs to the languageXYif and only ifabelongs to the languageX, andbbelongs to the languageY. We can also say that xy represents the catenation of the expressions x and y. - The expression x

*describes the languageZ, where the sentenceε(the empty string) belongs toZ, and, the sentencepqbelongs toZif and only ifpis a sentence belonging toX, andqis a sentence belonging toZ. We can also say that x*represents a quantification of x.

Before we add the last rule for regular expressions, let’s clarify these three rules with some examples. Given the constants a, b, and c, resolving to the languages { 'a' }, { 'b' }, and { 'b' }:

- The expression

b|cdescribes the language{ 'b', 'c' }, by rule 1. - The expression

abdescribes the language{ 'ab' }by rule 2. - The expression

a*describes the language{ '', 'a', 'aa', 'aaa', ... }by rule 3.

Our operations have a precedence, and it is the order of the rules as presented. So:

- The expression

a|bcdescribes the language{ 'a', 'bc' }by rules 1 and 2. - The expression

ab*describes the language{ 'a', 'ab', 'abb', 'abbb', ... }by rules 2 and 3. - The expression

b|c*describes the language{ '', 'b', 'c', 'cc', 'ccc', ... }by rules 1 and 3.

As with the algebraic notation we are familiar with, we can use parentheses:

- Given a regular expression x, the expression

(x)describes the language described by x.

This allows us to alter the way the operators are combined. As we have seen, the expression b|c* describes the language { '', 'b', 'c', 'cc', 'ccc', ... }. But the expression (b|c)* describes the language { '', 'b', 'c', 'bb', 'cc', 'bbb', 'ccc', ... }.

It is quite obvious that regexen borrowed a lot of their syntax and semantics from regular expressions. Leaving aside the mechanism of capturing and extracting portions of a match, almost every regular expressions is also a regex. For example, /reggiee*/ is a regular expression that matches words like reggie, reggiee, and reggieee anywhere in a string.

Regexen add a lot more affordances like character classes, the dot operator, decorators like ? and +, and so forth, but at their heart, regexen are based on regular expressions.

And now to the essay.

Table of Contents

Prelude

Our First Goal: “For every regular expression, there exists an equivalent finite-state recognizer”

Evaluating Arithmetic Expressions

- converting infix to reverse-polish representation

- handling a default operator

- evaluating the reverse-polish representation with a stack machine

Finite-State Recognizers

Building Blocks

Alternating Regular Expressions

Taking the Product of Two Finite-State Automata

Catenating Regular Expressions

- catenating descriptions with epsilon-transitions

- removing epsilon-transitions

- implementing catenation

- unreachable states

- the catch with catenation

Converting Nondeterministic to Deterministic Finite-State Recognizers

- taking the product of a recognizer… with itself

- computing the powerset of a nondeterministic finite-state recognizer

- catenation without the catch

- the fan-out problem

- summarizing catenation (and an improved union)

Quantifying Regular Expressions

What We Have Learned So Far

- for every finite-state recognizer with epsilon-transitions, there exists a finite-state recognizer without epsilon-transitions

- for every finite-state recognizer, there exists an equivalent deterministic finite-state recognizer

- every regular language can be recognized in linear time

Our First Goal: “For every regular expression, there exists an equivalent finite-state recognizer”

As mentioned in the Prelude, Stephen Cole Kleene developed the concept of formal regular expressions and regular languages, and published a seminal theorem about their behaviour in 1951.

Regular expressions are not machines. In and of themselves, they don’t generate sentences in a language, nor do they recognize whether sentences belong to a language. They define the language, and it’s up to us to build machines that do things like generate or recognize sentences.

Kleene studied machines that can recognize sentences in languages. Studying such machines informs us about the fundamental nature of the computation involved. In the case of formal regular expressions and regular languages, Kleene established that for every regular language, there is a finite-state automaton that recognizes sentences in that language.

(Finite-state automatons that are arranged to recognize sentences in languages are also called “finite-state recognizers,” and that is the term we will use from here on.)

Kleene also established that for every finite-state recognizer, there is a formal regular expression that describes the language that the finite-state recognizer accepts. In proving these two things, he proved that the set of all regular expressions and the set of all finite-state recognizers is equivalent.

In the first part of this essay, we are going to demonstrate these two important components of Kleene’s theorem by writing JavaScript code, starting with a demonstration that “For every regular expression, there exists an equivalent finite-state recognizer.”

our approach

Our approach to demonstrating that for every regular expression, there exists an equivalent finite-state recognizer will be to write a program that takes as its input a regular expression, and produces as its output a description of a finite-state recognizer that accepts sentences in the language described by the regular expression.

Our in computer jargon, we’re going to write a regular expression to finite-state recognizer compiler. Compilers and interpreters are obviously an extremely interesting tool for practical programming: They establish an equivalency between expressing an algorithm in a language that humans understand, and expressing an equivalent algorithm in a language a machine understands.

Our compiler will work like this: Instead of thinking of a formal regular expression as a description of a language, we will think of it as an expression, that when evaluated, returns a finite-state recognizer.

Our “compiler” will thus be an algorithm that evaluates regular expressions.

Evaluating Arithmetic Expressions

We needn’t invent our evaluation algorithm from first principles. There is a great deal of literature about evaluating expressions, especially expressions that consist of values, operators, and parentheses.

One simple and easy-to work-with approach works like this:

- Take an expression in infix notation (when we say “infix notation,” we include expressions that contain prefix operators, postfix operators, and parentheses).

- Convert the expression to reverse-polish representation, also called reverse-polish notation, or “RPN.”

- Push the RPN onto a stack.

- Evaluate the RPM using a stack machine.

Before we write code to do this, we’ll do it by hand for a small expression, 3*2+4!:

Presuming that the postfix ! operator has the highest precedence, followed by the infix * and then the infix + has the lowest precedence, 3*2+4! in infix notation becomes [3, 2, *, 4, !, +] in reverse-polish representation.

Evaluating [3, 2, *, 4, !, +] with a stack machine works by taking each of the values and operators in order. If a value is next, push it onto the stack. If an operator is next, pop the necessary number of arguments off apply the operator to the arguments, and push the result back onto the stack. If the reverse-polish representation is well-formed, after processing the last item from the input, there will be exactly one value on the stack, and that is the result of evaluating the reverse-polish representation.

Let’s try it:

- The first item is a

3. We push it onto the stack, which becomes[3]. - The next item is a

2. We push it onto the stack, which becomes[3, 2]. - The next item is a

*, which is an operator with an arity of two. - We pop

2and3off the stack, which becomes[]. - We evaluate

*(3, 2)(in a pseudo-functional form). The result is6. - We push

6onto the stack, which becomes[6]. - The next item is

4. We push it onto the stack, which becomes[6, 4]. - The next item is a

!, which is an operator with an arity of one. - We pop

4off the stack, which becomes[6]. - We evaluate

!(4)(in a pseudo-functional form). The result is24. - We push

24onto the stack, which becomes[6, 24]. - The next item is a

+, which is an operator with an arity of two. - We pop

24and6off the stack, which becomes[]. - We evaluate

+(6, 24)(in a pseudo-functional form). The result is30. - We push

30onto the stack, which becomes[30]. - There are no more items to process, and the stack contains one value,

[30]. We therefore return[30]as the result of evaluating[3, 2, *, 4, !, +].

Let’s write this in code. We’ll start by writing an infix-to-reverse-polish representation converter. We are not writing a comprehensive arithmetic evaluator, so we will make a number of simplifying assumptions, including:

- We will only handle singe-digit values and single-character operators.

- We will not allow ambiguos operators. For example, in ordinary arithmetic, the

-operator is both a prefix operator that negates integer values, as well as an infix operator for subtraction. In our evaluator,-can be one, or the other, but not both. - We’ll only process strings when converting to reverse-polish representation. It’ll be up to the eventual evaluator to know that the string

'3'is actually the number 3. - We aren’t going to allow whitespace.

1 + 1will fail,1+1will not.

We’ll also parameterize the definitions for operators. This will allow us to reuse our evaluator for regular expressions simply by changing the operator definitions.

converting infix to reverse-polish representation

Here’s our definition for arithmetic operators:

const arithmetic = {

operators: {

'+': {

symbol: Symbol('+'),

type: 'infix',

precedence: 1,

fn: (a, b) => a + b

},

'-': {

symbol: Symbol('-'),

type: 'infix',

precedence: 1,

fn: (a, b) => a - b

},

'*': {

symbol: Symbol('*'),

type: 'infix',

precedence: 3,

fn: (a, b) => a * b

},

'/': {

symbol: Symbol('/'),

type: 'infix',

precedence: 2,

fn: (a, b) => a / b

},

'!': {

symbol: Symbol('!'),

type: 'postfix',

precedence: 4,

fn: function factorial(a, memo = 1) {

if (a < 2) {

return a * memo;

} else {

return factorial(a - 1, a * memo);

}

}

}

}

};

Note that for each operator, we define a symbol. We’ll use that when we push things into the output queue so that our evaluator can disambiguate symbols from values (Meaning, of course, that these symbols can’t be values.) We also define a precedence, and an eval function that the evaluator will use later.

Armed with this, how do we convert infix expressions to reverse-polish representation? With a “shunting yard.”

The Shunting Yard Algorithm is a method for parsing mathematical expressions specified in infix notation with parentheses. As we implement it here, it will produce a reverse-polish representation without parentheses. The shunting yard algorithm was invented by Edsger Dijkstra, and named the “shunting yard” algorithm because its operation resembles that of a railroad shunting yard.

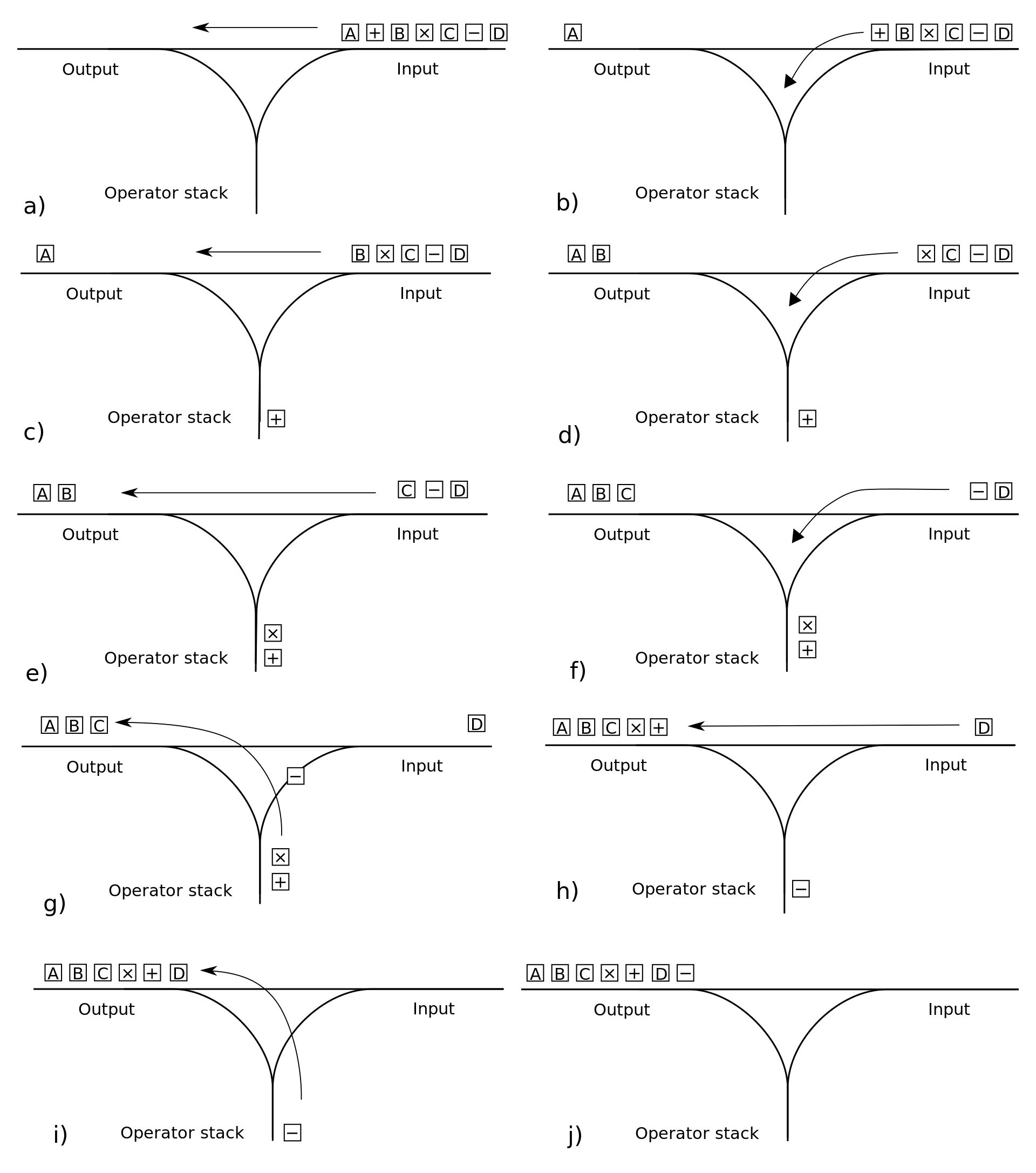

The shunting yard algorithm is stack-based. Infix expressions are the form of mathematical notation most people are used to, for instance 3 + 4 or 3 + 4 × (2 − 1). For the conversion there are two lists, the input and the output. There is also a stack that holds operators not yet added to the output queue. To convert, the program reads each symbol in order and does something based on that symbol. The result for the above examples would be (in Reverse Polish notation) 3 4 + and 3 4 2 1 − × +, respectively.

Here’s our shunting yard implementation. There are a few extra bits and bobs we’ll fill in in a moment:

function shuntingYardFirstCut (infixExpression, { operators }) {

const operatorsMap = new Map(

Object.entries(operators)

);

const representationOf =

something => {

if (operatorsMap.has(something)) {

const { symbol } = operatorsMap.get(something);

return symbol;

} else if (typeof something === 'string') {

return something;

} else {

error(`${something} is not a value`);

}

};

const typeOf =

symbol => operatorsMap.has(symbol) ? operatorsMap.get(symbol).type : 'value';

const isInfix =

symbol => typeOf(symbol) === 'infix';

const isPostfix =

symbol => typeOf(symbol) === 'postfix';

const isCombinator =

symbol => isInfix(symbol) || isPostfix(symbol);

const input = infixExpression.split('');

const operatorStack = [];

const reversePolishRepresentation = [];

let awaitingValue = true;

while (input.length > 0) {

const symbol = input.shift();

if (symbol === '(' && awaitingValue) {

// opening parenthesis case, going to build

// a value

operatorStack.push(symbol);

awaitingValue = true;

} else if (symbol === '(') {

// value catenation

error(`values ${peek(reversePolishRepresentation)} and ${symbol} cannot be catenated`);

} else if (symbol === ')') {

// closing parenthesis case, clear the

// operator stack

while (operatorStack.length > 0 && peek(operatorStack) !== '(') {

const op = operatorStack.pop();

reversePolishRepresentation.push(representationOf(op));

}

if (peek(operatorStack) === '(') {

operatorStack.pop();

awaitingValue = false;

} else {

error('Unbalanced parentheses');

}

} else if (isCombinator(symbol)) {

const { precedence } = operatorsMap.get(symbol);

// pop higher-precedence operators off the operator stack

while (isCombinator(symbol) && operatorStack.length > 0 && peek(operatorStack) !== '(') {

const opPrecedence = operatorsMap.get(peek(operatorStack)).precedence;

if (precedence < opPrecedence) {

const op = operatorStack.pop();

reversePolishRepresentation.push(representationOf(op));

} else {

break;

}

}

operatorStack.push(symbol);

awaitingValue = isInfix(symbol);

} else if (awaitingValue) {

// as expected, go straight to the output

reversePolishRepresentation.push(representationOf(symbol));

awaitingValue = false;

} else {

// value catenation

error(`values ${peek(reversePolishRepresentation)} and ${symbol} cannot be catenated`);

}

}

// pop remaining symbols off the stack and push them

while (operatorStack.length > 0) {

const op = operatorStack.pop();

if (operatorsMap.has(op)) {

const { symbol: opSymbol } = operatorsMap.get(op);

reversePolishRepresentation.push(opSymbol);

} else {

error(`Don't know how to push operator ${op}`);

}

}

return reversePolishRepresentation;

}

Naturally, we need to test our work before moving on:

function deepEqual(obj1, obj2) {

function isPrimitive(obj) {

return (obj !== Object(obj));

}

if(obj1 === obj2) // it's just the same object. No need to compare.

return true;

if(isPrimitive(obj1) && isPrimitive(obj2)) // compare primitives

return obj1 === obj2;

if(Object.keys(obj1).length !== Object.keys(obj2).length)

return false;

// compare objects with same number of keys

for(let key in obj1) {

if(!(key in obj2)) return false; //other object doesn't have this prop

if(!deepEqual(obj1[key], obj2[key])) return false;

}

return true;

}

const pp = list => list.map(x=>x.toString());

function verifyShunter (shunter, tests, ...additionalArgs) {

try {

const testList = Object.entries(tests);

const numberOfTests = testList.length;

const outcomes = testList.map(

([example, expected]) => {

const actual = shunter(example, ...additionalArgs);

if (deepEqual(actual, expected)) {

return 'pass';

} else {

return `fail: ${JSON.stringify({ example, expected: pp(expected), actual: pp(actual) })}`;

}

}

)

const failures = outcomes.filter(result => result !== 'pass');

const numberOfFailures = failures.length;

const numberOfPasses = numberOfTests - numberOfFailures;

if (numberOfFailures === 0) {

console.log(`All ${numberOfPasses} tests passing`);

} else {

console.log(`${numberOfFailures} tests failing: ${failures.join('; ')}`);

}

} catch(error) {

console.log(`Failed to validate the description: ${error}`)

}

}

verifyShunter(shuntingYardFirstCut, {

'3': [ '3' ],

'2+3': ['2', '3', arithmetic.operators['+'].symbol],

'4!': ['4', arithmetic.operators['!'].symbol],

'3*2+4!': ['3', '2', arithmetic.operators['*'].symbol, '4', arithmetic.operators['!'].symbol, arithmetic.operators['+'].symbol],

'(3*2+4)!': ['3', '2', arithmetic.operators['*'].symbol, '4', arithmetic.operators['+'].symbol, arithmetic.operators['!'].symbol]

}, arithmetic);

//=> All 5 tests passing

handling a default operator

In mathematical notation, it is not always necessary to write a multiplication operator. For example, 2(3+4) is understood to be equivalent to 2 * (3 + 4).

Whenever two values are adjacent to each other in the input, we want our shunting yard to insert the missing * just as if it had been explicitly included. We will call * a “default operator,” as our next shunting yard will default to * if there is a missing infix operator.

shuntingYardFirstCut above has two places where it reports this as an error. Let’s modify it as follows: Whenever it encounters two values in succession, it will re-enqueue the default operator, re-enqueue the second value, and then proceed.

We’ll start with a way to denote which is the default operator, and then update our shunting yard code:4

const arithmeticB = {

operators: arithmetic.operators,

defaultOperator: '*'

}

function shuntingYardSecondCut (infixExpression, { operators, defaultOperator }) {

const operatorsMap = new Map(

Object.entries(operators)

);

const representationOf =

something => {

if (operatorsMap.has(something)) {

const { symbol } = operatorsMap.get(something);

return symbol;

} else if (typeof something === 'string') {

return something;

} else {

error(`${something} is not a value`);

}

};

const typeOf =

symbol => operatorsMap.has(symbol) ? operatorsMap.get(symbol).type : 'value';

const isInfix =

symbol => typeOf(symbol) === 'infix';

const isPrefix =

symbol => typeOf(symbol) === 'prefix';

const isPostfix =

symbol => typeOf(symbol) === 'postfix';

const isCombinator =

symbol => isInfix(symbol) || isPrefix(symbol) || isPostfix(symbol);

const awaitsValue =

symbol => isInfix(symbol) || isPrefix(symbol);

const input = infixExpression.split('');

const operatorStack = [];

const reversePolishRepresentation = [];

let awaitingValue = true;

while (input.length > 0) {

const symbol = input.shift();

if (symbol === '(' && awaitingValue) {

// opening parenthesis case, going to build

// a value

operatorStack.push(symbol);

awaitingValue = true;

} else if (symbol === '(') {

// value catenation

input.unshift(symbol);

input.unshift(defaultOperator);

awaitingValue = false;

} else if (symbol === ')') {

// closing parenthesis case, clear the

// operator stack

while (operatorStack.length > 0 && peek(operatorStack) !== '(') {

const op = operatorStack.pop();

reversePolishRepresentation.push(representationOf(op));

}

if (peek(operatorStack) === '(') {

operatorStack.pop();

awaitingValue = false;

} else {

error('Unbalanced parentheses');

}

} else if (isPrefix(symbol)) {

if (awaitingValue) {

const { precedence } = operatorsMap.get(symbol);

// pop higher-precedence operators off the operator stack

while (isCombinator(symbol) && operatorStack.length > 0 && peek(operatorStack) !== '(') {

const opPrecedence = operatorsMap.get(peek(operatorStack)).precedence;

if (precedence < opPrecedence) {

const op = operatorStack.pop();

reversePolishRepresentation.push(representationOf(op));

} else {

break;

}

}

operatorStack.push(symbol);

awaitingValue = awaitsValue(symbol);

} else {

// value catenation

input.unshift(symbol);

input.unshift(defaultOperator);

awaitingValue = false;

}

} else if (isCombinator(symbol)) {

const { precedence } = operatorsMap.get(symbol);

// pop higher-precedence operators off the operator stack

while (isCombinator(symbol) && operatorStack.length > 0 && peek(operatorStack) !== '(') {

const opPrecedence = operatorsMap.get(peek(operatorStack)).precedence;

if (precedence < opPrecedence) {

const op = operatorStack.pop();

reversePolishRepresentation.push(representationOf(op));

} else {

break;

}

}

operatorStack.push(symbol);

awaitingValue = awaitsValue(symbol);

} else if (awaitingValue) {

// as expected, go straight to the output

reversePolishRepresentation.push(representationOf(symbol));

awaitingValue = false;

} else {

// value catenation

input.unshift(symbol);

input.unshift(defaultOperator);

awaitingValue = false;

}

}

// pop remaining symbols off the stack and push them

while (operatorStack.length > 0) {

const op = operatorStack.pop();

if (operatorsMap.has(op)) {

const { symbol: opSymbol } = operatorsMap.get(op);

reversePolishRepresentation.push(opSymbol);

} else {

error(`Don't know how to push operator ${op}`);

}

}

return reversePolishRepresentation;

}

verifyShunter(shuntingYardSecondCut, {

'3': [ '3' ],

'2+3': ['2', '3', arithmetic.operators['+'].symbol],

'4!': ['4', arithmetic.operators['!'].symbol],

'3*2+4!': ['3', '2', arithmetic.operators['*'].symbol, '4', arithmetic.operators['!'].symbol, arithmetic.operators['+'].symbol],

'(3*2+4)!': ['3', '2', arithmetic.operators['*'].symbol, '4', arithmetic.operators['+'].symbol, arithmetic.operators['!'].symbol],

'2(3+4)5': ['2', '3', '4', arithmeticB.operators['+'].symbol, '5', arithmeticB.operators['*'].symbol, arithmeticB.operators['*'].symbol],

'3!2': ['3', arithmeticB.operators['!'].symbol, '2', arithmeticB.operators['*'].symbol]

}, arithmeticB);

//=> All 7 tests passing

We now have enough to get started with evaluating the reverse-polish representation produced by our shunting yard.

evaluating the reverse-polish representation with a stack machine

Our first cut at the code for evaluating the reverse-polish representation produced by our shunting yard, will take the definition for operators as an argument, and it will also take a function for converting strings to values.

function stateMachine (representationList, {

operators,

toValue

}) {

const functions = new Map(

Object.entries(operators).map(

([key, { symbol, fn }]) => [symbol, fn]

)

);

const stack = [];

for (const element of representationList) {

if (typeof element === 'string') {

stack.push(toValue(element));

} else if (functions.has(element)) {

const fn = functions.get(element);

const arity = fn.length;

if (stack.length < arity) {

error(`Not enough values on the stack to use ${element}`)

} else {

const args = [];

for (let counter = 0; counter < arity; ++counter) {

args.unshift(stack.pop());

}

stack.push(fn.apply(null, args))

}

} else {

error(`Don't know what to do with ${element}'`)

}

}

if (stack.length === 0) {

return undefined;

} else if (stack.length > 1) {

error(`should only be one value to return, but there were ${stack.length} values on the stack`);

} else {

return stack[0];

}

}

We can then wire the shunting yard up to the postfix evaluator, to make a function that evaluates infix notation:

function evaluateFirstCut (expression, definition) {

return stateMachine(

shuntingYardSecondCut(

expression, definition

),

definition

);

}

const arithmeticC = {

operators: arithmetic.operators,

defaultOperator: '*',

toValue: string => Number.parseInt(string, 10)

};

verify(evaluateFirstCut, {

'': undefined,

'3': 3,

'2+3': 5,

'4!': 24,

'3*2+4!': 30,

'(3*2+4)!': 3628800,

'2(3+4)5': 70,

'3!2': 12

}, arithmeticC);

//=> All 8 tests passing

This extremely basic function for evaluates:

- infix expressions;

- with parentheses, and infix operators (naturally);

- with postfix operators;

- with a default operator that handles the case when values are catenated.

That is enough to begin work on compiling regular expressions to finite-state recognizers.

Finite-State Recognizers

If we’re going to compile regular expressions to finite-state recognizers, we need a representation for finite-state recognizers. There are many ways to notate finite-state automata. For example, state diagrams are particularly easy to read for smallish examples:

Of course, diagrams are not particularly easy to work with in JavaScript. If we want to write JavaScript algorithms that operate on finite-state recognizers, we need a language for describing finite-state recognizers that JavaScript is comfortable manipulating.

describing finite-state recognizers in JSON

We don’t need to invent a brand-new format, there is already an accepted formal definition. Mind you, it involves mathematical symbols that are unfamiliar to some programmers, so without dumbing it down, we will create our own language that is equivalent to the full formal definition, but expressed in a subset of JSON.

JSON has the advantage that it is a language in the exact sense we want: An ordered set of symbols.

Now what do we need to encode? Finite-state recognizers are defined as a quintuple of (Σ, S, s, ẟ, F), where:

Σis the alphabet of symbols this recognizer operates upon.Sis the set of states this recognizer can be in.sis the initial or “start” state of the recognizer.ẟis the recognizer’s “state transition function” that governs how the recognizer changes states while it consumes symbols from the sentence it is attempting to recognize.Fis the set of “final” states. If the recognizer is in one of these states when the input ends, it has recognized the sentence.

For our immediate purposes, we do not need to encode the alphabet of symbols, and the set of states can always be derived from the rest of the description, so we don’t need to encode that either. This leaves us with describing the start state, transition function, and set of final states.

We can encode these with JSON. We’ll use descriptive words rather than mathematical symbols, but note that if we wanted to use the mathematical symbols, everything we’re doing would work just as well.

Or JSON representation will represent the start state, transition function, and set of final states as a Plain Old JavaScript Object (or “POJO”), rather than an array. This makes it easier to document what each element means:

{

// elements...

}

The recognizer’s initial, or start state is required. It is a string representing the name of the initial state:

{

"start": "start"

}

The recognizer’s state transition function, ẟ, is represented as a set of transitions, encoded as a list of POJOs, each of which represents exactly one transition:

{

"transitions": [

]

}

Each transition defines a change in the recognizer’s state. Transitions are formally defined as triples of the form (p, a, q):

pis the state the recognizer is currently in.ais the input symbol consumed.qis the state the recognizer will be in after completing this transition. It can be the same asp, meaning that it consumes a symbol and remains in the same state.

We can represent this with POJOs. For readability by those unfamiliar with the formal notation, we will use the words from, consume, and to. This may feel like a lot of typing compared to the formal symbols, but we’ll get the computer do do our writing for us, and it doesn’t care.

Thus, one possible set of transitions might be encoded like this:

{

"transitions": [

{ "from": "start", "consume": "0", "to": "zero" },

{ "from": "start", "consume": "1", "to": "notZero" },

{ "from": "notZero", "consume": "0", "to": "notZero" },

{ "from": "notZero", "consume": "1", "to": "notZero" }

]

}

The recognizer’s set of final, or accepting states is required. It is encoded as a list of strings representing the names of the final states. If the recognizer is in any of the accepting (or “final”) states when the end of the sentence is reached (or equivalently, when there are no more symbols to consume), the recognizer accepts or “recognizes” the sentence.

{

"accepting": ["zero", "notZero"]

}

Putting it all together, we have:

const binary = {

"start": "start",

"transitions": [

{ "from": "start", "consume": "0", "to": "zero" },

{ "from": "start", "consume": "1", "to": "notZero" },

{ "from": "notZero", "consume": "0", "to": "notZero" },

{ "from": "notZero", "consume": "1", "to": "notZero" }

],

"accepting": ["zero", "notZero"]

}

Our representation translates directly to this simplified state diagram:

This finite-state recognizer recognizes binary numbers.

verifying finite-state recognizers

It’s all very well to say that a description recognizes binary numbers (or have any other expectation for it, really). But how do we have confidence that the finite-state recognizer we describe recognizes the language what we think it recognizes?

There are formal ways to prove things about recognizers, and there is the informal technique of writing tests we can run. Since we’re emphasizing working code, we’ll write tests.

Here is a function that takes as its input the definition of a recognizer, and returns a Javascript recognizer function:56

function automate (description) {

if (description instanceof RegExp) {

return string => !!description.exec(string)

} else {

const {

stateMap,

start,

acceptingSet,

transitions

} = validatedAndProcessed(description);

return function (input) {

let state = start;

for (const symbol of input) {

const transitionsForThisState = stateMap.get(state) || [];

const transition =

transitionsForThisState.find(

({ consume }) => consume === symbol

);

if (transition == null) {

return false;

}

state = transition.to;

}

// reached the end. do we accept?

return acceptingSet.has(state);

}

}

}

Here we are using automate with our definition for recognizing binary numbers. We’ll use the verify function throughout our exploration to build simple tests-by-example:

function verifyRecognizer (recognizer, examples) {

return verify(automate(recognizer), examples);

}

const binary = {

"start": "start",

"transitions": [

{ "from": "start", "consume": "0", "to": "zero" },

{ "from": "start", "consume": "1", "to": "notZero" },

{ "from": "notZero", "consume": "0", "to": "notZero" },

{ "from": "notZero", "consume": "1", "to": "notZero" }

],

"accepting": ["zero", "notZero"]

};

verifyRecognizer(binary, {

'': false,

'0': true,

'1': true,

'00': false,

'01': false,

'10': true,

'11': true,

'000': false,

'001': false,

'010': false,

'011': false,

'100': true,

'101': true,

'110': true,

'111': true,

'10100011011000001010011100101110111': true

});

//=> All 16 tests passing

We now have a function, automate, that takes a data description of a finite-state automaton/recognizer, and returns a Javascript recognizer function we can play with and verify.

Verifying recognizers will be extremely important when we want to verify that when we compile a regular expression to a finite-state recognizer, that the finite-state recognizer is equivalent to the regular expression.

Building Blocks

Regular expressions have a notation for the empty set, the empty string, and single characters:

- The symbol

∅describes the language with no sentences, also called “the empty set.” - The symbol

εdescribes the language containing only the empty string. - Literals such as

x,y, orzdescribe languages containing single sentences, containing single symbols. e.g. The literalrdescribes the languageR, which contains just one sentence:'r'.

In order to compile such regular expressions into finite-state recognizers, we begin by defining functions that return the empty language, the language containing only the empty string, and languages with just one sentence containing one symbol.

∅ and ε

Here’s a function that returns a recognizer that doesn’t recognize any sentences:

const names = (() => {

let i = 0;

return function * names () {

while (true) yield `G${++i}`;

};

})();

function emptySet () {

const [start] = names();

return {

start,

"transitions": [],

"accepting": []

};

}

verifyRecognizer(emptySet(), {

'': false,

'0': false,

'1': false

});

//=> All 3 tests passing

It’s called emptySet, because the the set of all sentences this language recognizes is empty. Note that while hand-written recognizers can have any arbitrary names for their states, we’re using the names generator to generate state names for us. This automatically avoid two recognizers ever having state names in common, which makes some of the code we write later a great deal simpler.

Now, how do we get our evaluator to handle it? Our evaluate function takes a definition object as a parameter, and that’s where we define operators. We’re going to define ∅ as an atomic operator.7

const regexA = {

operators: {

'∅': {

symbol: Symbol('∅'),

type: 'atomic',

fn: emptySet

}

},

// ...

};

Next, we need a recognizer that recognizes the language containing only the empty string, ''. Once again, we’ll write a function that returns a recognizer:

function emptyString () {

const [start] = names();

return {

start,

"transitions": [],

"accepting": [start]

};

}

verifyRecognizer(emptyString(), {

'': true,

'0': false,

'1': false

});

//=> All 3 tests passing

And then we’ll add it to the definition:

const regexA = {

operators: {

'∅': {

symbol: Symbol('∅'),

type: 'atomic',

fn: emptySet

},

'ε': {

symbol: Symbol('ε'),

type: 'atomic',

fn: emptyString

}

},

// ...

};

literal

What makes recognizers really useful is recognizing non-empty strings of one kind or another. This use case is so common, regexen are designed to make recognizing strings the easiest thing to write. For example, to recognize the string abc, we write /^abc$/:

verify(/^abc$/, {

'': false,

'a': false,

'ab': false,

'abc': true,

'_abc': false,

'_abc_': false,

'abc_': false

})

//=> All 7 tests passing

Here’s an example of a recognizer that recognizes a single zero:

We could write a function that returns a recognizer for 0, and then write another a for every other symbol we might want to use in a recognizer, and then we could assign them all to atomic operators, but this would be tedious. Instead, here’s a function that makes recognizers that recognize a literal symbol:

function literal (symbol) {

return {

"start": "empty",

"transitions": [

{ "from": "empty", "consume": symbol, "to": "recognized" }

],

"accepting": ["recognized"]

};

}

verifyRecognizer(literal('0'), {

'': false,

'0': true,

'1': false,

'01': false,

'10': false,

'11': false

});

//=> All 6 tests passing

Now clearly, this cannot be an atomic operator. But recall that our function for evaluating postfix expressions has a special function, toValue, for translating strings into values. In a calculator, the values were integers. In our compiler, the values are finite-state recognizers.

Our approach to handling constant literals will be to use toValue to perform the translation for us:

const regexA = {

operators: {

'∅': {

symbol: Symbol('∅'),

type: 'atomic',

fn: emptySet

},

'ε': {

symbol: Symbol('ε'),

type: 'atomic',

fn: emptyString

}

},

defaultOperator: undefined,

toValue (string) {

return literal(string);

}

};

using ∅, ε, and literal

Now that we have defined operators for ∅ and ε, and now that we have written toValue to use literal, we can use evaluate to generate recognizers from the most basic of regular expressions:

const emptySetRecognizer = evaluate(`∅`, regexA);

const emptyStringRecognizer = evaluate(`ε`, regexA);

const rRecognizer = evaluate('r', regexA);

verifyRecognizer(emptySetRecognizer, {

'': false,

'0': false,

'1': false

});

//=> All 3 tests passing

verifyRecognizer(emptyStringRecognizer, {

'': true,

'0': false,

'1': false

});

//=> All 3 tests passing

verifyRecognizer(rRecognizer, {

'': false,

'r': true,

'R': false,

'reg': false,

'Reg': false

});

//=> All 5 tests passing

We’ll do this enough that it’s worth building a helper for verifying our work:

function verifyEvaluateFirstCut (expression, definition, examples) {

return verify(

automate(evaluateFirstCut(expression, definition)),

examples

);

}

verifyEvaluateFirstCut('∅', regexA, {

'': false,

'0': false,

'1': false

});

//=> All 3 tests passing

verifyEvaluateFirstCut(`ε`, regexA, {

'': true,

'0': false,

'1': false

});

//=> All 3 tests passing

verifyEvaluateFirstCut('r', regexA, {

'': false,

'r': true,

'R': false,

'reg': false,

'Reg': false

});

//=> All 5 tests passing

Great! We have something to work with, namely constants. Before we get to building expressions using operators and so forth, let’s solve the little problem we hinted at when making ∅ and ε into operators.

recognizing special characters

There is a bug in our code so far. Or rather, a glaring omission: How do we write a recognizer that recognizes the characters ∅ or ε?

This is not really necessary for demonstrating the general idea that we can compile any regular expression into a finite-state recognizer, but once we start adding operators like * and ?, not to mention extensions like + or ?, the utility of our demonstration code will fall dramatically.

Now we’ve already made ∅ and ε into atomic operators, so now the question becomes, how do we write a regular expression with literal ∅ or ε characters in it? And not to mention, literal parentheses?

Let’s go with the most popular approach, and incorporate an escape symbol. In most languages, including regexen, that symbol is a \. We could do the same, but JavaScript already interprets \ as an escape, so our work would be littered with double backslashes to get JavaScript to recognize a single \.

We’ll set it up so that we can choose whatever we like, but by default we’ll use a back-tick:

function shuntingYard (

infixExpression,

{

operators,

defaultOperator,

escapeSymbol = '`',

escapedValue = string => string

}

) {

const operatorsMap = new Map(

Object.entries(operators)

);

const representationOf =

something => {

if (operatorsMap.has(something)) {

const { symbol } = operatorsMap.get(something);

return symbol;

} else if (typeof something === 'string') {

return something;

} else {

error(`${something} is not a value`);

}

};

const typeOf =

symbol => operatorsMap.has(symbol) ? operatorsMap.get(symbol).type : 'value';

const isInfix =

symbol => typeOf(symbol) === 'infix';

const isPrefix =

symbol => typeOf(symbol) === 'prefix';

const isPostfix =

symbol => typeOf(symbol) === 'postfix';

const isCombinator =

symbol => isInfix(symbol) || isPrefix(symbol) || isPostfix(symbol);

const awaitsValue =

symbol => isInfix(symbol) || isPrefix(symbol);

const input = infixExpression.split('');

const operatorStack = [];

const reversePolishRepresentation = [];

let awaitingValue = true;

while (input.length > 0) {

const symbol = input.shift();

if (symbol === escapeSymbol) {

if (input.length === 0) {

error('Escape symbol ${escapeSymbol} has no following symbol');

} else {

const valueSymbol = input.shift();

if (awaitingValue) {

// push the escaped value of the symbol

reversePolishRepresentation.push(escapedValue(valueSymbol));

} else {

// value catenation

input.unshift(valueSymbol);

input.unshift(escapeSymbol);

input.unshift(defaultOperator);

}

awaitingValue = false;

}

} else if (symbol === '(' && awaitingValue) {

// opening parenthesis case, going to build

// a value

operatorStack.push(symbol);

awaitingValue = true;

} else if (symbol === '(') {

// value catenation

input.unshift(symbol);

input.unshift(defaultOperator);

awaitingValue = false;

} else if (symbol === ')') {

// closing parenthesis case, clear the

// operator stack

while (operatorStack.length > 0 && peek(operatorStack) !== '(') {

const op = operatorStack.pop();

reversePolishRepresentation.push(representationOf(op));

}

if (peek(operatorStack) === '(') {

operatorStack.pop();

awaitingValue = false;

} else {

error('Unbalanced parentheses');

}

} else if (isPrefix(symbol)) {

if (awaitingValue) {

const { precedence } = operatorsMap.get(symbol);

// pop higher-precedence operators off the operator stack

while (isCombinator(symbol) && operatorStack.length > 0 && peek(operatorStack) !== '(') {

const opPrecedence = operatorsMap.get(peek(operatorStack)).precedence;

if (precedence < opPrecedence) {

const op = operatorStack.pop();

reversePolishRepresentation.push(representationOf(op));

} else {

break;

}

}

operatorStack.push(symbol);

awaitingValue = awaitsValue(symbol);

} else {

// value catenation

input.unshift(symbol);

input.unshift(defaultOperator);

awaitingValue = false;

}

} else if (isCombinator(symbol)) {

const { precedence } = operatorsMap.get(symbol);

// pop higher-precedence operators off the operator stack

while (isCombinator(symbol) && operatorStack.length > 0 && peek(operatorStack) !== '(') {

const opPrecedence = operatorsMap.get(peek(operatorStack)).precedence;

if (precedence < opPrecedence) {

const op = operatorStack.pop();

reversePolishRepresentation.push(representationOf(op));

} else {

break;

}

}

operatorStack.push(symbol);

awaitingValue = awaitsValue(symbol);

} else if (awaitingValue) {

// as expected, go straight to the output

reversePolishRepresentation.push(representationOf(symbol));

awaitingValue = false;

} else {

// value catenation

input.unshift(symbol);

input.unshift(defaultOperator);

awaitingValue = false;

}

}

// pop remaining symbols off the stack and push them

while (operatorStack.length > 0) {

const op = operatorStack.pop();

if (operatorsMap.has(op)) {

const { symbol: opSymbol } = operatorsMap.get(op);

reversePolishRepresentation.push(opSymbol);

} else {

error(`Don't know how to push operator ${op}`);

}

}

return reversePolishRepresentation;

}

function evaluate (expression, definition) {

return stateMachine(

shuntingYard(

expression, definition

),

definition

);

}

And now to test it:

function verifyEvaluate (expression, definition, examples) {

return verify(

automate(evaluate(expression, definition)),

examples

);

}

verifyEvaluate('∅', regexA, {

'': false,

'∅': false,

'ε': false

});

//=> All 3 tests passing

verifyEvaluate('`∅', regexA, {

'': false,

'∅': true,

'ε': false

});

//=> All 3 tests passing

verifyEvaluate('ε', regexA, {

'': true,

'∅': false,

'ε': false

});

//=> All 3 tests passing

verifyEvaluate('`ε', regexA, {

'': false,

'∅': false,

'ε': true

});

//=> All 3 tests passing

And now it’s time for what we might call the main event: Expressions that use operators.

Composeable recognizers and patterns are particularly interesting. Just as human languages are built by layers of composition, all sorts of mechanical languages are structured using composition. JSON is a perfect example: A JSON element like a list is composed of zero or more arbitrary JSON elements, which themselves could be lists, and so forth.

Regular expressions and regexen are both built with composition. If you have two regular expressions, a and b, you can create a new regular expression that is the union of a and b with the expression a|b, and you can create a new regular expression that is the catenation of a and b with ab.

Our evaluate functions don’t know how to do that, and we aren’t going to update them to try. Instead, we’ll write combinator functions that take two recognizers and return the finite-state recognizer representing the alternation, or catenation of their arguments.

We’ll begin with alternation.

Alternating Regular Expressions

So far, we only have recognizers for the empty set, the empty string, and any one character. Nevertheless, we will build alternation to handle any two recognizers, because that’s exactly how the rules of regular expressions defines it:

- The expression x

|y describes to the union of the languagesXandY, meaning, the sentencewbelongs tox|yif and only ifwbelongs to the languageXorwbelongs to the languageY. We can also say that x|y represents the alternation of x and y.

We’ll get started with a function that computes the union of the descriptions of two finite-state recognizers, which is built on a very useful operation, taking the product of two finite-state automata.

Taking the Product of Two Finite-State Automata

Consider two finite-state recognizers. The first, a, recognizes a string of one or more zeroes:

The second, b, recognizes a string of one or more ones:

Recognizer a has two declared states: 'empty' and 'zero'. Recognizer b also has two declared states: 'empty' and 'one'. Both also have an undeclared state: they can halt. As a convention, we will refer to the halted state as an empty string, ''.

Thus, recognizer a has three possible states: 'empty', 'zero', and ''. Likewise, recognizer b has three possible states: 'empty', 'one', and ''.

Now let us imagine the two recognizers are operating concurrently on the same stream of symbols:

At any one time, there are nine possible combinations of states the two machines could be in:

| a | b |

|---|---|

'' |

'' |

'' |

'emptyB' |

'' |

'one' |

'emptyA' |

'' |

'emptyA' |

'emptyB' |

'emptyA' |

'one' |

'zero' |

'' |

'zero' |

'emptyB' |

'zero' |

'one' |

If we wish to simulate the actions of the two recognizers operating concurrently, we could do so if we had a finite-state automaton with nine states, one for each of the pairs of states that a and b could be in.

It will look something like this:

The reason this is called the product of a and b, is that when we take the product of the sets { '', 'emptyA', 'zero' } and {'', 'emptyB', 'one' } is the set of tuples { ('', ''), ('', 'emptyB'), ('', 'one'), ('emptyA', ''), ('emptyA', 'emptyB'), ('emptyA', 'one'), ('zero', ''), ('zero', 'emptyB'), ('zero', 'one')}.

There will be (at most) one set in the product state machine for each tuple in the product of the sets of states for a and b.

We haven’t decided where such an automaton would start, how it transitions between its states, and which states should be accepting states. We’ll go through those in that order.

starting the product

Now let’s consider a and b simultaneously reading the same string of symbols in parallel. What states would they respectively start in? emptyA and emptyB, of course, therefore our product will begin in state5, which corresponds to emptyA and emptyB:

This is a rule for constructing products: The product of two recognizers begins in the state corresponding to the start state for each of the recognizers.

transitions

Now let’s think about our product automaton. It begins in state5. What transitions can it make from there? We can work that out by looking at the transitions for emptyA and emptyB.

Given that the product is in a state corresponding to a being in state Fa and b being in state Fb, We’ll follow these rules for determining the transitions from the state (Fa and Fb):

- If when

ais in state Fa it consumes a symbol S and transitions to state Ta, but whenbis in state Fb it does not consume the symbol S, then the product ofaandbwill consume S and transition to the state (Ta and''), denoting that were the two recognizers operating concurrently,awould transition to state Ta whilebwould halt. - If when

ais in state Fa it does not consume a symbol S, but whenbis in state Fb it consumes the symbol S and transitions to state Tb, then the product ofaandbwill consume S and transition to (''and Tb), denoting that were the two recognizers operating concurrently,awould halt whilebwould transition to state Tb. - If when

ais in state Fa it consumes a symbol S and transitions to state Ta, and also if whenbis in state Fb it consumes the symbol S and transitions to state Tb, then the product ofaandbwill consume S and transition to (Ta and Tb), denoting that were the two recognizers operating concurrently,awould transition to state Ta whilebwould transition to state Tb.

When our product is in state 'state5', it corresponds to the states ('emptyA' and 'emptyB'). Well, when a is in state 'emptyA', it consumes 0 and transitions to 'zero', but when b is in 'emptyB', it does not consume 0.

Therefore, by rule 1, when the product is in state 'state5' corresponding to the states ('emptyA' and 'emptyB'), it consumes 0 and transitions to 'state7' corresponding to the states ('zero' and ''):

And by rule 2, when the product is in state 'state5' corresponding to the states ('emptyA' and 'emptyB'), it consumes 1 and transitions to 'state3', corresponding to the states ('' and 'one'):

What transitions take place from state 'state7'? b is halted in 'state7', and therefore b doesn’t consume any symbols in 'state7', and therefore we can apply rule 1 to the case where a consumes a 0 from state 'zero' and transitions to state 'zero':

We can always apply rule 1 to any state where b is halted, and it follows that all of the transitions from a state where b is halted will lead to states where b is halted. Now what about state 'state3'?

Well, by similar logic, since a is halted in state 'state3', and b consumes a 1 in state 'one' and transitions back to state 'one', we apply rule 2 and get:

We could apply our rules to the other six states, but we don’t need to: The states 'state2', 'state4', 'state6', 'state8', and 'state9' are unreachable from the starting state 'state5'.

And 'state1 need not be included: When both a and b halt, then the product of a and b also halts. So we can leave it out.

Thus, if we begin with the start state and then recursively follow transitions, we will automatically end up with the subset of all possible product states that are reachable given the transitions for a and b.

a function to compute the product of two recognizers

Here is a function that takes the product of two recognizers:

// A state aggregator maps a set of states

// (such as the two states forming part of the product

// of two finite-state recognizers) to a new state.

class StateAggregator {

constructor () {

this.map = new Map();

this.inverseMap = new Map();

}

stateFromSet (...states) {

const materialStates = states.filter(s => s != null);

if (materialStates.some(ms=>this.inverseMap.has(ms))) {

error(`Surprise! Aggregating an aggregate!!`);

}

if (materialStates.length === 0) {

error('tried to get an aggregate state name for no states');

} else if (materialStates.length === 1) {

// do not need a new state name

return materialStates[0];

} else {

const key = materialStates.sort().map(s=>`(${s})`).join('');

if (this.map.has(key)) {

return this.map.get(key);

} else {

const [newState] = names();

this.map.set(key, newState);

this.inverseMap.set(newState, new Set(materialStates));

return newState;

}

}

}

setFromState (state) {

if (this.inverseMap.has(state)) {

return this.inverseMap.get(state);

} else {

return new Set([state]);

}

}

}

function product (a, b, P = new StateAggregator()) {

const {

stateMap: aStateMap,

start: aStart

} = validatedAndProcessed(a);

const {

stateMap: bStateMap,

start: bStart

} = validatedAndProcessed(b);

// R is a collection of states "remaining" to be analyzed

// it is a map from the product's state name to the individual states

// for a and b

const R = new Map();

// T is a collection of states already analyzed

// it is a map from a product's state name to the transitions

// for that state

const T = new Map();

// seed R

const start = P.stateFromSet(aStart, bStart);

R.set(start, [aStart, bStart]);

while (R.size > 0) {

const [[abState, [aState, bState]]] = R.entries();

const aTransitions = aState != null ? (aStateMap.get(aState) || []) : [];

const bTransitions = bState != null ? (bStateMap.get(bState) || []) : [];

let abTransitions = [];

if (T.has(abState)) {

error(`Error taking product: T and R both have ${abState} at the same time.`);

}

if (aTransitions.length === 0 && bTransitions.length == 0) {

// dead end for both

// will add no transitions

// we put it in T just to avoid recomputing this if it's referenced again

T.set(abState, []);

} else if (aTransitions.length === 0) {

const aTo = null;

abTransitions = bTransitions.map(

({ consume, to: bTo }) => ({ from: abState, consume, to: P.stateFromSet(aTo, bTo), aTo, bTo })

);

} else if (bTransitions.length === 0) {

const bTo = null;

abTransitions = aTransitions.map(

({ consume, to: aTo }) => ({ from: abState, consume, to: P.stateFromSet(aTo, bTo), aTo, bTo })

);

} else {

// both a and b have transitions

const aConsumeToMap =

aTransitions.reduce(

(acc, { consume, to }) => (acc.set(consume, to), acc),

new Map()

);

const bConsumeToMap =

bTransitions.reduce(

(acc, { consume, to }) => (acc.set(consume, to), acc),

new Map()

);

for (const { from, consume, to: aTo } of aTransitions) {

const bTo = bConsumeToMap.has(consume) ? bConsumeToMap.get(consume) : null;

if (bTo != null) {

bConsumeToMap.delete(consume);

}

abTransitions.push({ from: abState, consume, to: P.stateFromSet(aTo, bTo), aTo, bTo });

}

for (const [consume, bTo] of bConsumeToMap.entries()) {

const aTo = null;

abTransitions.push({ from: abState, consume, to: P.stateFromSet(aTo, bTo), aTo, bTo });

}

}

T.set(abState, abTransitions);

for (const { to, aTo, bTo } of abTransitions) {

// more work remaining?

if (!T.has(to) && !R.has(to)) {

R.set(to, [aTo, bTo]);

}

}

R.delete(abState);

}

const accepting = [];

const transitions =

[...T.values()].flatMap(

tt => tt.map(

({ from, consume, to }) => ({ from, consume, to })

)

);

return { start, accepting, transitions };

}

We can test it with out a and b:

const a = {

"start": 'emptyA',

"accepting": ['zero'],

"transitions": [

{ "from": 'emptyA', "consume": '0', "to": 'zero' },

{ "from": 'zero', "consume": '0', "to": 'zero' }

]

};

const b = {

"start": 'emptyB',

"accepting": ['one'],

"transitions": [

{ "from": 'emptyB', "consume": '1', "to": 'one' },

{ "from": 'one', "consume": '1', "to": 'one' }

]

};

product(a, b)

//=>

{

"start": "G41",

"transitions": [

{ "from": "G41", "consume": "0", "to": "G42" },

{ "from": "G41", "consume": "1", "to": "G43" },

{ "from": "G42", "consume": "0", "to": "G42" },

{ "from": "G43", "consume": "1", "to": "G43" }

],

"accepting": []

}

It doesn’t actually accept anything, so it’s not much of a recognizer. Yet.

From Product to Union

We know how to compute the product of two recognizers, and we see how the product actually simulates having two recognizers simultaneously consuming the same symbols. But what we want is to compute the union of the recognizers.

So let’s consider our requirements. When we talk about the union of a and b, we mean a recognizer that recognizes any sentence that a recognizes, or any sentence that b recognizes.

If the two recognizers were running concurrently, we would want to accept a sentence if a ended up in one of its recognizing states or if b ended up in one of its accepting states. How does this translate to the product’s states?

Well, each state of the product represents one state from a and one state from b. If there are no more symbols to consume and the product is in a state where the state from a is in a’s set of accepting states, then this is equivalent to a having accepted the sentence. Likewise, if there are no more symbols to consume and the product is in a state where the state from b is in b’s set of accepting states, then this is equivalent to b having accepted the sentence.

In theory, then, for a and b, the following product states represent the union of a and b:

| a | b |

|---|---|

'' |

'one' |

'emptyA' |

'one' |

'zero' |

'' |

'zero' |

'emptyB' |

'zero' |

'one' |

Of course, only two of these ('zero' and '', '' and 'one') are reachable, so those are the ones we want our product to accept when we want the union of two recognizers.

Here’s a union function that makes use of product and some of the helpers we’ve already written:

function union2 (a, b) {

const {

states: aDeclaredStates,

accepting: aAccepting

} = validatedAndProcessed(a);

const aStates = [null].concat(aDeclaredStates);

const {

states: bDeclaredStates,

accepting: bAccepting

} = validatedAndProcessed(b);

const bStates = [null].concat(bDeclaredStates);

// P is a mapping from a pair of states (or any set, but in union2 it's always a pair)

// to a new state representing the tuple of those states

const P = new StateAggregator();

const productAB = product(a, b, P);

const { start, transitions } = productAB;

const statesAAccepts =

aAccepting.flatMap(

aAcceptingState => bStates.map(bState => P.stateFromSet(aAcceptingState, bState))

);

const statesBAccepts =

bAccepting.flatMap(

bAcceptingState => aStates.map(aState => P.stateFromSet(aState, bAcceptingState))

);

const allAcceptingStates =

[...new Set([...statesAAccepts, ...statesBAccepts])];

const { stateSet: reachableStates } = validatedAndProcessed(productAB);

const accepting = allAcceptingStates.filter(state => reachableStates.has(state));

return { start, accepting, transitions };

}

And when we try it:

union2(a, b)

//=>

{

"start": "G41",

"transitions": [

{ "from": "G41", "consume": "0", "to": "G42" },

{ "from": "G41", "consume": "1", "to": "G43" },

{ "from": "G42", "consume": "0", "to": "G42" },

{ "from": "G43", "consume": "1", "to": "G43" }

],

"accepting": [ "G42", "G43" ]

}

Now we can incorporate union2 as an operator:

const regexB = {

operators: {

'∅': {

symbol: Symbol('∅'),

type: 'atomic',

fn: emptySet

},

'ε': {

symbol: Symbol('ε'),

type: 'atomic',

fn: emptyString

},

'|': {

symbol: Symbol('|'),

type: 'infix',

precedence: 10,

fn: union2

}

},

defaultOperator: undefined,

toValue (string) {

return literal(string);

}

};

verifyEvaluate('a', regexB, {

'': false,

'a': true,

'A': false,

'aa': false,

'AA': false

});

//=> All 5 tests passing

verifyEvaluate('A', regexB, {

'': false,

'a': false,

'A': true,

'aa': false,

'AA': false

});

//=> All 5 tests passing

verifyEvaluate('a|A', regexB, {

'': false,

'a': true,

'A': true,

'aa': false,

'AA': false

});

//=> All 5 tests passing

We’re ready to work on catenation now, but before we do, a digression about product.

the marvellous product

Taking the product of two recognizers is a general-purpose way of simulating the effect of running two recognizers in parallel on the same input. In the case of union(a, b), we obtained product(a, b), and then selected as accepting states, all those states where either a or b reached an accepting state.

But what other ways could we determine the accepting state of the result?

If we accept all those states where both a and b reached an accepting state, we have computed the intersection of a and b. intersection is not a part of formal regular expressions or of most regexen, but it can be useful and we will see later how to add it as an operator.

If we accept all those states where a reaches an accepting state but b does not, we have computed the difference between a and b. This can also be used for implementing regex lookahead features, but this time for negative lookaheads.

We could even compute all those states where either a or b reach an accepting state, but not both. This would compute the disjunction of the two recognizers.

We’ll return to some of these other uses for product after we satisfy ourselves that we can generate a finite-state recognizer for any formal regular expression we like.

Catenating Regular Expressions

And now we turn our attention to catenating descriptions. Let’s begin by informally defining what we mean by “catenating descriptions:”

Given two recognizers, a and b, the catenation of a and b is a recognizer that recognizes a sentence AB, if and only if A is a sentence recognized by a and B is a sentence recognized by b.

Catenation is very common in composing patterns. It’s how we formally define recognizers that recognize things like “the function keyword, followed by optional whitespace, followed by an optional label, followed by optional whitespace, followed by an open parenthesis, followed by…” and so forth.

A hypothetical recognizer for JavaScript function expressions would be composed by catenating recognizers for keywords, optional whitespace, labels, parentheses, and so forth.

catenating descriptions with epsilon-transitions

Our finite-state automata are very simple: They are deterministic, meaning that in every state, there is one and only one transition for each unique symbol. And they always consume a symbol when they transition.

Some finite-state automata relax the second constraint. They allow a transition between states without consuming a symbol. If a transition with a symbol to be consumed is like an “if statement,” a transition without a symbol to consume is like a “goto.”

Such transitions are called “ε-transitions,” or “epsilon transitions” for those who prefer to avoid greek letters. As we’ll see, ε-transitions do not add any power to finite-state automata, but they do sometimes help make diagrams a little easier to understand and formulate.

Recall our recognizer that recognizes variations on the name “reg.” Here it is as a diagram:

And here is the diagram for a recognizer that recognizes one or more exclamation marks:

The simplest way to catenate recognizers is to put all their states together in one big diagram, and create an ε-transition between the accepting states for the first recognizer, and the start state of the second. The start state of the first recognizer becomes the start state of the result, and the accepting states of the second recognizer become the accepting state of the result.

Like this:

Here’s a function to catenate any two recognizers, using ε-transitions:

function epsilonCatenate (a, b) {

const joinTransitions =

a.accepting.map(

from => ({ from, to: b.start })

);

return {

start: a.start,

accepting: b.accepting,

transitions:

a.transitions

.concat(joinTransitions)

.concat(b.transitions)

};

}

epsilonCatenate(reg, exclamations)

//=>

{

"start": "empty",

"accepting": [ "bang" ],

"transitions": [

{ "from": "empty", "consume": "r", "to": "r" },

{ "from": "empty", "consume": "R", "to": "r" },

{ "from": "r", "consume": "e", "to": "re" },

{ "from": "r", "consume": "E", "to": "re" },

{ "from": "re", "consume": "g", "to": "reg" },

{ "from": "re", "consume": "G", "to": "reg" },

{ "from": "reg", "to": "empty2" },

{ "from": "empty2", "to": "bang", "consume": "!" },

{ "from": "bang", "to": "bang", "consume": "!" }

]

}

Of course, our engine for finite-state recognizers doesn’t actually implement ε-transitions. We could add that as a feature, but instead, let’s look at an algorithm for removing ε-transitions from finite-state machines.

removing epsilon-transitions

To remove an ε-transition between any two states, we start by taking all the transitions in the destination state, and copy them into the origin state. Next, if the destination state is an accepting state, we make the origin state an accepting state as well.

We then can remove the ε-transition without changing the recognizer’s behaviour. In our catenated recognizer, we have an ε-transition between the reg and empty states:

The empty state has one transition, from empty to bang, while consuming !. If we copy that into reg, we get:

Since empty is not an accepting state, we do not need to make reg an accepting state, so we are done removing this ε-transition. We repeat this process for all ε-transitions, in any order we like, until there are no more ε-transitions. In this case, there only was one, so the result is:

This is clearly a recognizer that recognizes the name “reg” followed by one or more exclamation marks. Our catenation algorithm has two steps. In the first, we create a recognizer with ε-transitions:

- Connect the two recognizers with an ε-transition from each accepting state from the first recognizer to the start state from the second recognizer.

- The start state of the first recognizer becomes the start state of the catenated recognizers.

- The accepting states of the second recognizer become the accepting states of the catenated recognizers.

This transformation complete, we can then remove the ε-transitions. For each ε-transition between an origin and destination state:

- Copy all of the transitions from the destination state into the origin state.

- If the destination state is an accepting state, make the origin state an accepting state as well.

- Remove the ε-transition.

(Following this process, we sometimes wind up with unreachable states. In our example above, empty becomes unreachable after removing the ε-transition. This has no effect on the behaviour of the recognizer, and in the next section, we’ll see how to prune those unreachable states.)

implementing catenation

Here’s a function that implements the steps described above: It takes any finite-state recognizer, and removes all of the ε-transitions, returning an equivalent finite-state recognizer without ε-transitions.

There’s code to handle cases we haven’t discussed–like ε-transitions between a state and itself, and loops in epsilon transitions (bad!)–but at its heart, it just implements the simple algorithm we just described.

function removeEpsilonTransitions ({ start, accepting, transitions }) {

const acceptingSet = new Set(accepting);

const transitionsWithoutEpsilon =

transitions

.filter(({ consume }) => consume != null);

const stateMapWithoutEpsilon = toStateMap(transitionsWithoutEpsilon);

const epsilonMap =

transitions

.filter(({ consume }) => consume == null)

.reduce(

(acc, { from, to }) => {

const toStates = acc.has(from) ? acc.get(from) : new Set();

toStates.add(to);

acc.set(from, toStates);

return acc;

},

new Map()

);

const epsilonQueue = [...epsilonMap.entries()];

const epsilonFromStatesSet = new Set(epsilonMap.keys());

const outerBoundsOnNumberOfRemovals = transitions.length;

let loops = 0;

while (epsilonQueue.length > 0 && loops++ <= outerBoundsOnNumberOfRemovals) {

let [epsilonFrom, epsilonToSet] = epsilonQueue.shift();

const allEpsilonToStates = [...epsilonToSet];

// special case: We can ignore self-epsilon transitions (e.g. a-->a)

const epsilonToStates = allEpsilonToStates.filter(

toState => toState !== epsilonFrom

);

// we defer resolving destinations that have epsilon transitions

const deferredEpsilonToStates = epsilonToStates.filter(s => epsilonFromStatesSet.has(s));

if (deferredEpsilonToStates.length > 0) {

// defer them

epsilonQueue.push([epsilonFrom, deferredEpsilonToStates]);

} else {

// if nothing to defer, remove this from the set

epsilonFromStatesSet.delete(epsilonFrom);

}

// we can immediately resolve destinations that themselves don't have epsilon transitions

const immediateEpsilonToStates = epsilonToStates.filter(s => !epsilonFromStatesSet.has(s));

for (const epsilonTo of immediateEpsilonToStates) {

const source =

stateMapWithoutEpsilon.get(epsilonTo) || [];

const potentialToMove =

source.map(

({ consume, to }) => ({ from: epsilonFrom, consume, to })

);

const existingTransitions = stateMapWithoutEpsilon.get(epsilonFrom) || [];

// filter out duplicates