This content originally appeared on Google Developers Blog and was authored by Google Developers

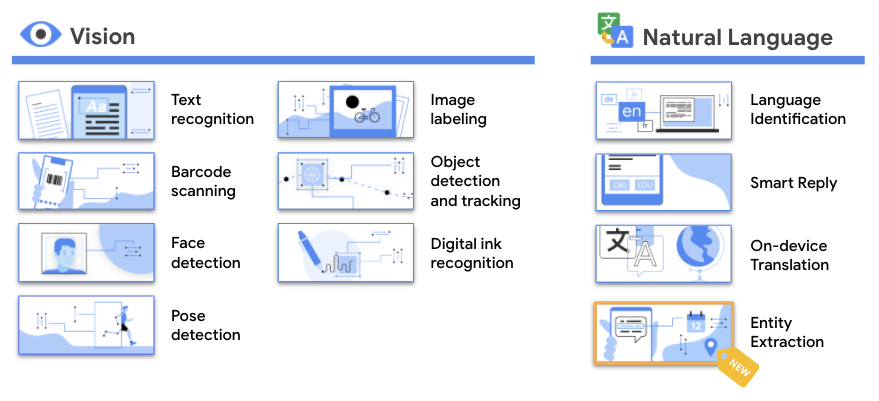

Six months ago, we introduced the standalone version of the ML Kit SDK, making it even easier to integrate on-device machine learning into mobile apps. Since then, we’ve launched the Digital Ink Recognition and Pose Detection APIs, and also introduced the ML Kit early access program. Today we are excited to add Entity Extraction to the official ML Kit lineup and also debut a new API for our early access program, Selfie Segmentation!

With ML Kit’s Entity Extraction API, you can now improve the user experience inside your app by understanding text and performing specific actions on it.

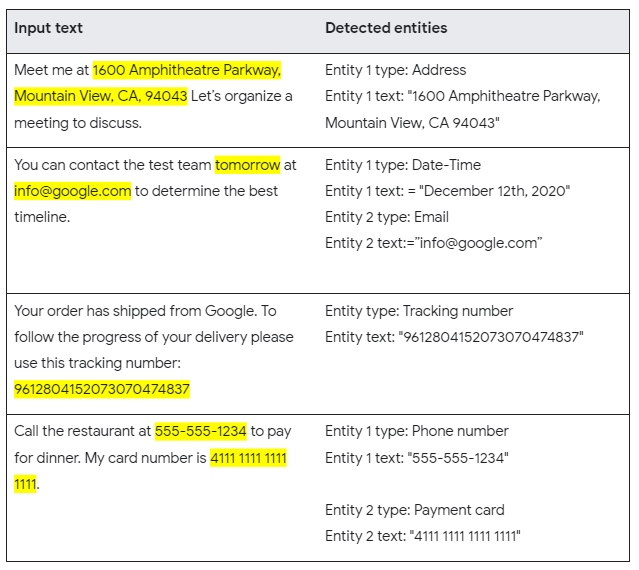

The Entity Extraction API allows you to detect and locate entities from raw text, and take action based on those entities. The API works on static text and also in real-time while a user is typing. It supports 11 different entities and 15 different languages (with more coming in the future) to allow developers to make any text interaction a richer experience for the user.

Supported Entities

- Address (350 third street, cambridge)

- Date-Time* (12/12/2020, tomorrow at 3pm) (let's meet tomorrow at 6pm)

- Email (entity-extraction@google.com)

- Flight Number* (LX37)

- IBAN* (CH52 0483 0000 0000 0000 9)

- ISBN* (978-1101904190)

- Money (including currency)* ($12, 25USD)

- Payment Card* (4111 1111 1111 1111)

- Phone Number ((555) 225-3556, 12345)

- Tracking Number* (1Z204E380338943508)

- URL (www.google.com, https://en.wikipedia.org/wiki/Platypus, seznam.cz)

Real World Applications

(Images courtesy of TamTam)

Our early access partner, TamTam, has been using the Entity Extraction API to provide helpful suggestions to their users during their chat conversations. This feature allows users to quickly perform actions based on the context of their conversations.

While integrating this API, Iurii Dorofeev, Head of TamTam Android Development, mentioned, “We appreciated the ease of integration of the ML Kit ... and it works offline. Clustering the content of messages right on the device allowed us to save resources. ML Kit capabilities will help us develop other features for TamTam messenger in the future.”

Check out their messaging app on the Google Play and App Store today.

Under The Hood

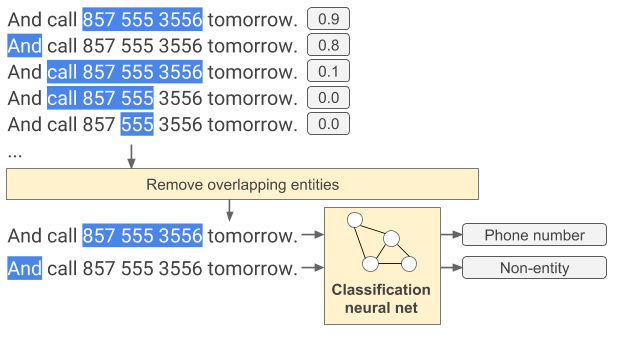

(Diagram of underlying Text Classifier API)

ML Kit’s Entity Extraction API builds upon the technology powering the Smart Linkify feature in Android 10+ to deliver an easy-to-use and streamlined experience for developers. For an in-depth review of the Text Classifier API, please see our blog post here.

The neural network annotators/models in the Entity Extraction API work as follows: A given input text is first split into words (based on space separation), then all possible word subsequences of certain maximum length (15 words in the example above) are generated, and for each candidate the scoring neural net assigns a value (between 0 and 1) based on whether it represents a valid entity.

Next, the generated entities that overlap are removed, favoring the ones with a higher score over the conflicting ones with a lower score. Then a second neural network is used to classify the type of the entity as a phone number, an address, or in some cases, a non-entity.

The neural network models in the Entity Extraction API are used in combination with other types of models (e.g. rule-based) to identify additional entities in text, such as: flight numbers, currencies and other examples listed above. Therefore, if multiple entities are detected for one text input, the Entity Extraction API can return several overlapping results.

Lastly, ML Kit will automatically download the required language-specific models to the device dynamically. You can also explicitly manage models you want available on the device by using ML Kit’s model management API. This can be useful if you want to download models ahead of time for your users. The API also allows you to delete models that are no longer required.

New APIs Coming Soon

Selfie Segmentation

With the increased usage of selfie cameras and webcams in today's world, being able to quickly and easily add effects to camera experiences has become a necessity for many app developers today.

ML Kit's Selfie Segmentation API allows developers to easily separate the background from a scene and focus on what matters. Adding cool effects to selfies or inserting your users into interesting background environments has never been easier. This API produces great results with low latency on both Android and iOS devices.

(Example of ML Kit Selfie Segmentation)

Key capabilities:

- Easily allows developers to replace or blur a user’s background

- Works well with single or multiple people

- Cross-platform support (iOS and Android)

- Runs real-time on most modern phones

To join our early access program and request access to ML Kit's Selfie Segmentation API, please fill out this form.

This content originally appeared on Google Developers Blog and was authored by Google Developers

Google Developers | Sciencx (2020-12-11T18:56:00+00:00) Announcing the Newest Addition to MLKit: Entity Extraction. Retrieved from https://www.scien.cx/2020/12/11/announcing-the-newest-addition-to-mlkit-entity-extraction/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.

Posted by Kenny Sulaimon, Product Manager, ML Kit, Tory Voight, Product Manager, ML Kit, Daniel Furlong, Lei Yu, Bhaktipriya Radharapu, Adrien Couque, Software Engineers, ML Kit, Dong Chen, Technical Lead, MLKit

Posted by Kenny Sulaimon, Product Manager, ML Kit, Tory Voight, Product Manager, ML Kit, Daniel Furlong, Lei Yu, Bhaktipriya Radharapu, Adrien Couque, Software Engineers, ML Kit, Dong Chen, Technical Lead, MLKit