This content originally appeared on DEV Community and was authored by Majid Qafouri

If you are using Microservice-based architecture, one of the challenges is to integrate and monitor application logs from different services and ability to search on this data based on message string or sources, etc

So, what is the ELK Stack?

"ELK" is the acronym for three open source projects: Elasticsearch, Logstash, and Kibana. Elasticsearch is a search and analytics engine. Logstash is a server-side data processing pipeline that ingests data from multiple sources simultaneously, transforms it, and then sends it to a "stash" like Elasticsearch. Kibana lets users visualize data with charts and graphs in Elasticsearch.

On the other hand, NLog is a flexible and free logging platform for various .NET platforms, including .NET standard. NLog makes it easy to write to several targets. (database, file, console) and change the logging configuration on-the-fly.

If you combine these two powerful tools, you can get a good and easy way for processing and persisting your application's logs

In this article, I'm going to use NLog to write my .Net 5.0 web application logs into Elastic. after that, I will show you how you can monitor and search your logs with different filters by Kibana.

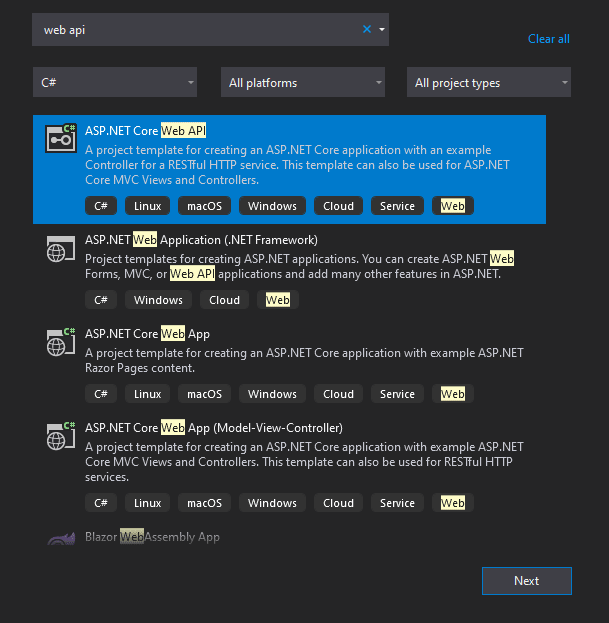

First of all Open Visual Studio and select a new project (ASP.NET Core Web API)

On the next page set the target framework on .Net 5.0(current) and also check Enable OpenAPI support option for using swagger in the project.

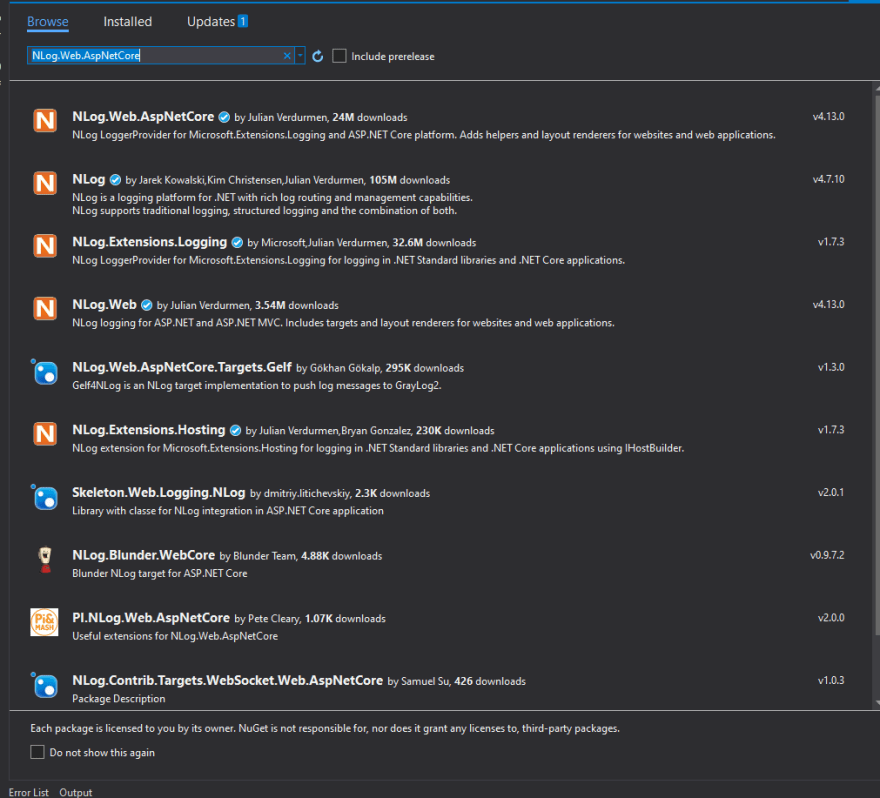

We need some Nuget packages, In order to install them, from the Tools menu select Nuget Package Manager and then select Manage Nuget packages for solutions.

In the opened panel search the below packages and install them one by one

NLog.Web.AspNetCore

NLog.Targets.ElasticSearch

After you installed the packages, you need to add a config file for NLog. In order to add the file, right-click on the current project in the solution and select add => new Item, then add a web configuration file and name it nlog.config

Open the newly added file and paste the below codes

<?xml version="1.0" encoding="utf-8" ?>

<nlog xmlns="http://www.nlog-project.org/schemas/NLog.xsd"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

autoReload="true">

<extensions>

<add assembly="NLog.Web.AspNetCore"/>

<add assembly="NLog.Targets.ElasticSearch"/>

</extensions>

<targets async="true">

<target name="elastic" xsi:type="ElasticSearch" index=" MyServiceName-${date:format=yyyy.MM.dd}"

uri="http://localhost:9200"

layout ="API:MyServiceName|${longdate}|${event-properties:item=EventId_Id}|${uppercase:${level}}|${logger}|${message} ${exception:format=tostring}|url: ${aspnet-request-url}|action: ${aspnet-mvc-action}" >

</target>

</targets>

<rules>

<logger name="*" minlevel="Debug" writeTo="elastic" />

</rules>

</nlog>

In the target tag, we define our configuration like Elastic service URI, layout pattern, Elastic index name and etc.

Let’s take a look at these tags and their attributes

Index: MyServiceName -${date:format=yyyy.MM.dd}: it means we will have different index for every day’s log. For example, MyServiceName-2021.08.03 related to all the logs of the third of August

ElasticUri: Elastic URI address.

Layout: your log message pattern, you can determine which information you want to have in the message of your log.

In the rules tag, the minimum level for the writing log is defined.

You can read more detail about nlog tag config Here

After we understand the structure and meaning of the config file, the next step is to set up NLog logger instead of .Net build-in logger. To achieve this, Open program.cs and change Main method like this

var logger = NLogBuilder.ConfigureNLog("nlog.config").GetCurrentClassLogger();

try

{

logger.Debug("init main");

CreateHostBuilder(args).Build().Run();

}

catch (Exception exception)

{

logger.Error(exception, "Stopped program because of exception");

throw;

}

finally

{

NLog.LogManager.Shutdown();

}

Change the CreateHostBuilder in program.cs as well:

public static IHostBuilder CreateHostBuilder(string[] args) =>

Host.CreateDefaultBuilder(args)

.ConfigureWebHostDefaults(webBuilder =>

{

webBuilder.UseStartup<Startup>();

})

.ConfigureLogging(logging =>

{

logging.ClearProviders();

logging.SetMinimumLevel(LogLevel.Trace);

})

.UseNLog(); // NLog: Setup NLog for Dependency injection

In the next step, open Startup.cs and in the ConfigureService method add below code

services.AddLogging();

Now you are able to write your log into elastic with Injecting ILogger in every class constructor.

[ApiController]

[Route("[controller]")]

public class WeatherForecastController : ControllerBase

{

private ILogger<WeatherForecastController> _logger;

public WeatherForecastController(ILogger<WeatherForecastController> logger)

{

_logger = logger;

}

[HttpGet]

public IActionResult Get()

{

_logger.LogDebug("Debug message");

_logger.LogTrace("Trace message");

_logger.LogError("Error message");

_logger.LogWarning("Warning message");

_logger.LogCritical("Critical message");

_logger.LogInformation("Information message");

return Ok();

}

}

From now on, If you run your application and send a request with Postman, all types of logs will be written into Elastic.

Well, We successfully configured our application to write the logs into Elastic, but how we can monitor and search out logs. For this purpose, Elastic has Kibana as a tool that lets users visualize data with charts and graphs in Elasticsearch.

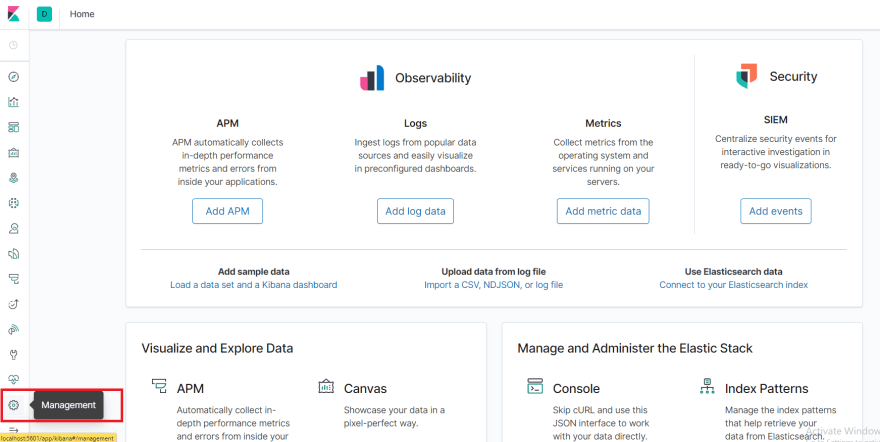

After you install the Elastic, you can have access to Kibana

First of all, we should be able to create a pattern which our application's log will be fetched by that pattern. For doing this, select the Management option in the left panel

In the management panel select Index Pattern link in the left side

In the showed panel click on Create index pattern button. You will see Create index pattern panel, there is an Index pattern input box which you should input your Index name(in our example we set the index name in the nlog.config file like this MyServiceName-${date:format=yyyy.MM.dd}.)

If you want your pattern to include wild character instead of the date you should write down myservicename-* in the input(it means we want to create a pattern that is able to load all logs with a prefix of myservicename- ).

If there were any logs with this pattern, a Success message will be shown beneath the input box. You can select the pattern and click on Next step.

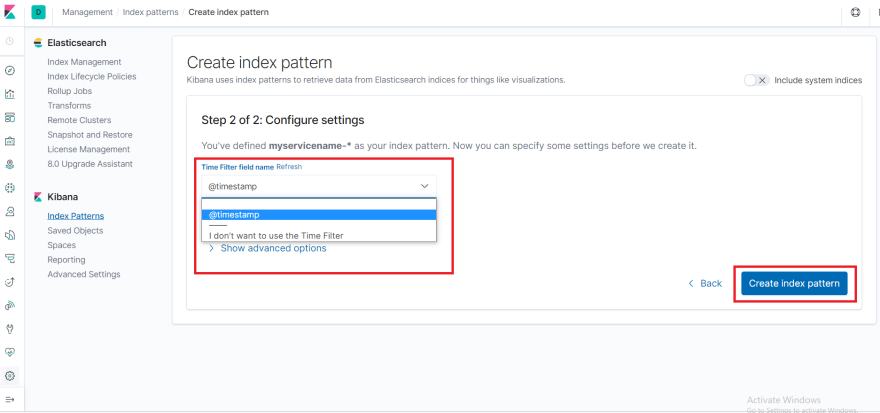

In the next panel, you can add a Time filter to your pattern, which means you will be able to filter logs according to the insertion date. After you selected @timestamp, click on Create index pattern button to complete this process.

Now click on the discover link on the left panel to redirect to the logged dashboard.

As you see, we have several logs with different types related to our application. If you do not see any log, be sure that you have selected your newly defined pattern on the left side of the dashboard

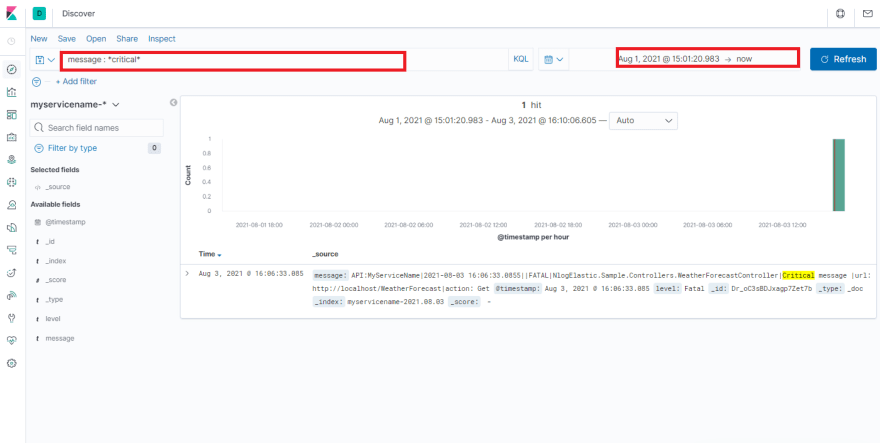

For filtering our logs according to the log message or insertion date you can use the filter bar located beneath the menu bar.

For example, in this picture, I have search logs that contain critical word in their log messages.

After you set your filter click on the green update button on the right side.

That’s it. Now you can easily filter your application's logs with different patterns.

For more information about Kibana features you can use this link

This content originally appeared on DEV Community and was authored by Majid Qafouri

Majid Qafouri | Sciencx (2021-08-04T10:25:58+00:00) Writing logs into Elastic with NLog , ELK and .Net 5.0. Retrieved from https://www.scien.cx/2021/08/04/writing-logs-into-elastic-with-nlog-elk-and-net-5-0/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.