This content originally appeared on Level Up Coding - Medium and was authored by Andreas Schallwig

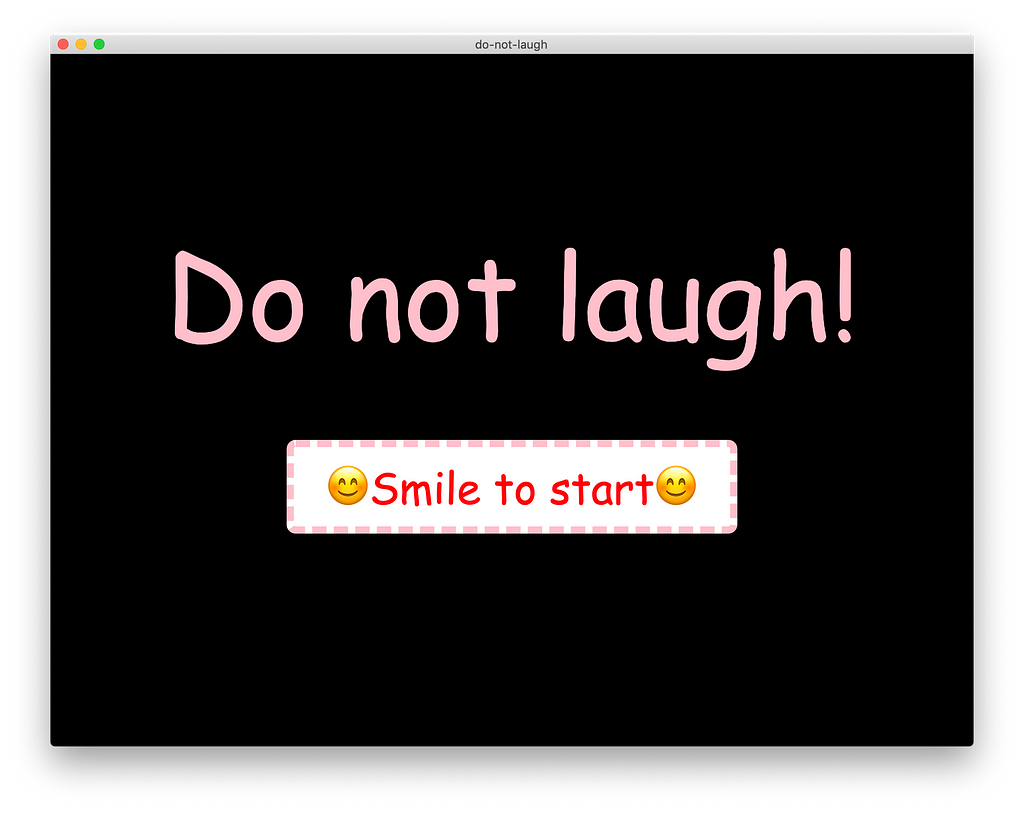

Do not laugh — A simple AI powered game using TensorFlow.js and Electron

For quite a while now I’ve been evaluating how AI technology can be used to leverage the user experience of digital applications or even enable completely new UI / UX concepts.

Recently I stumbled upon several articles describing how to train a CNN (Convolutional Neuronal Network) to recognize a person’s emotion expressed by their facial gestures. This sounded like an interesting idea for creating a user interface so in order to test it I came up with a simple game called “Do not laugh”.

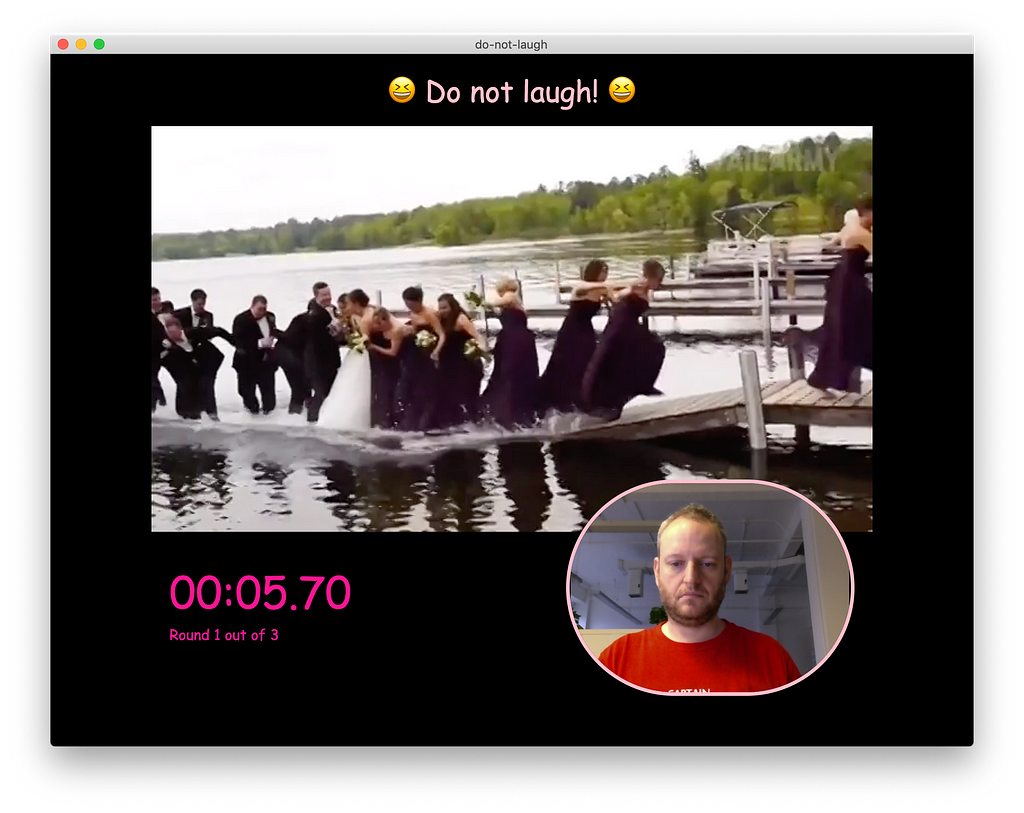

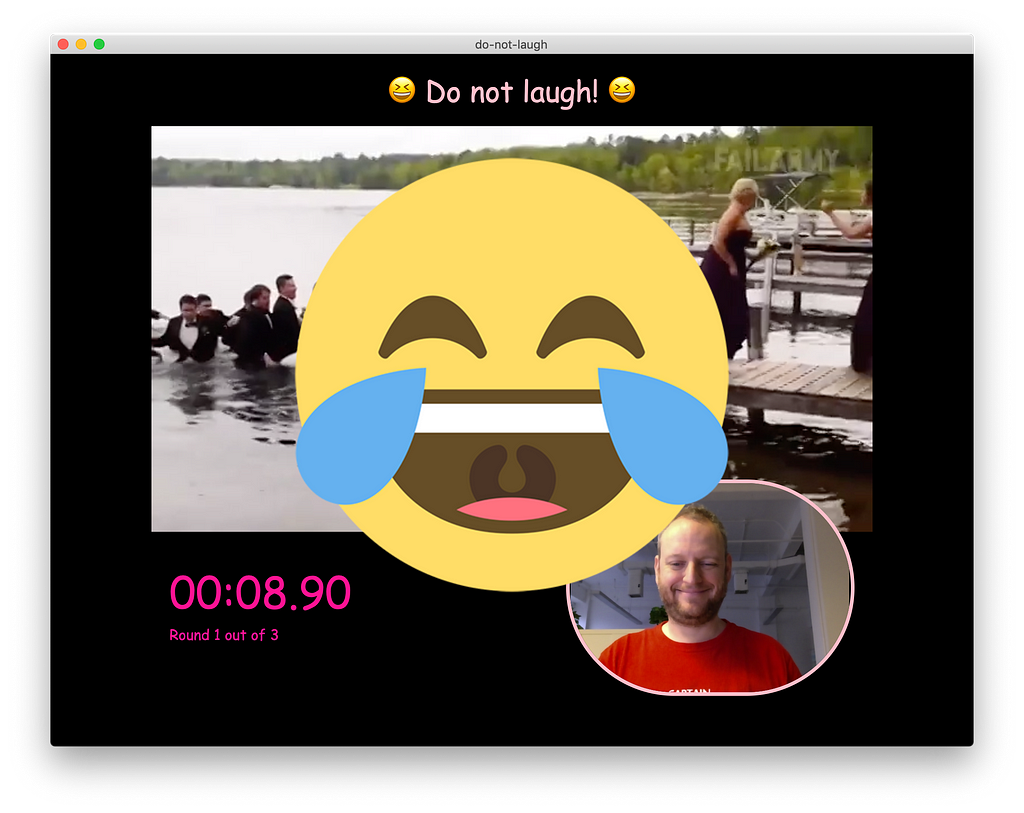

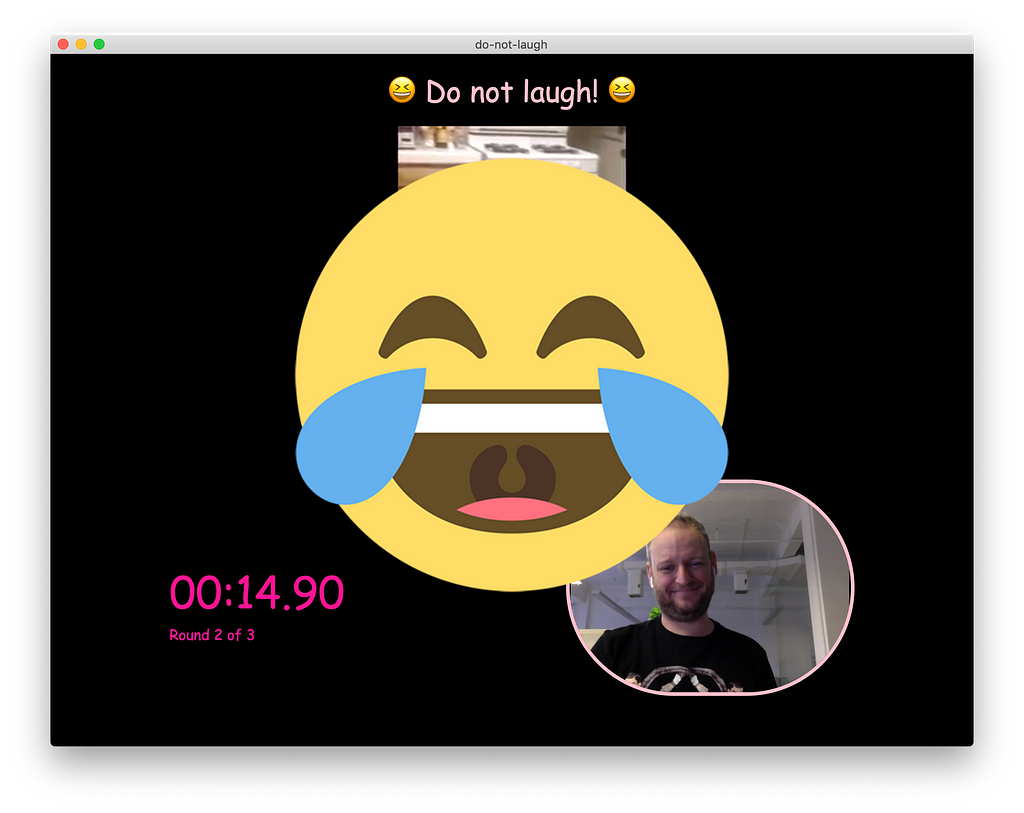

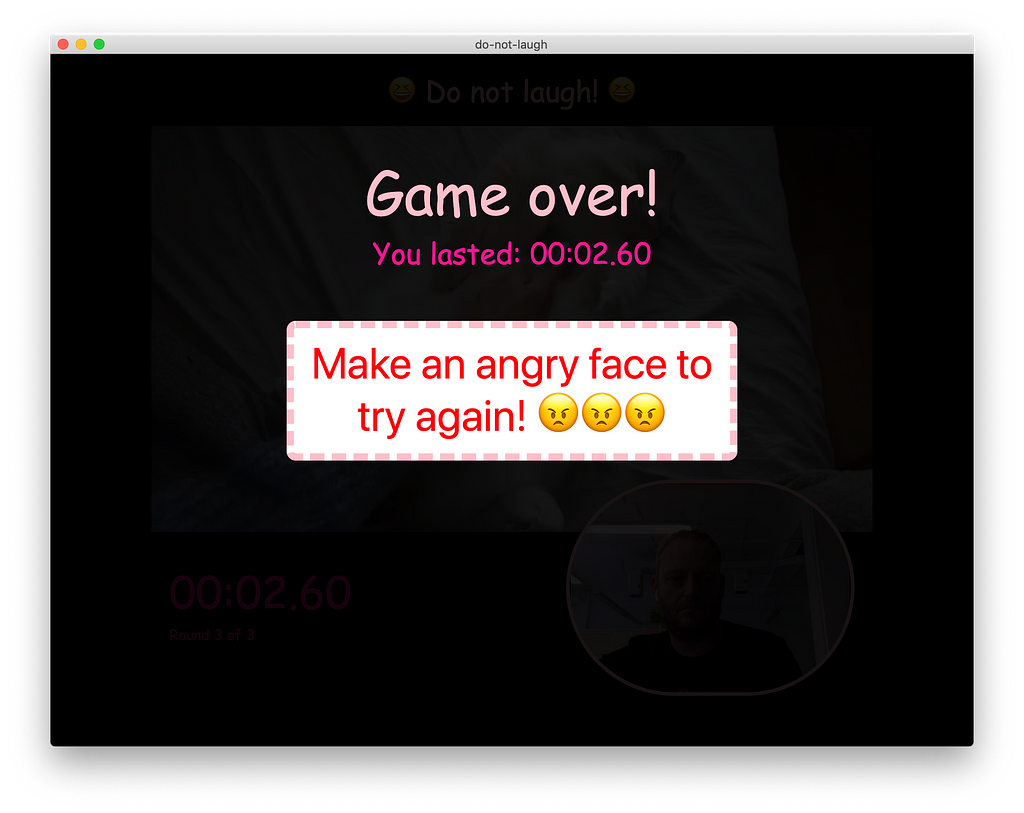

The game itself is dead simple. It will start playing a random funny video from YouTube and your only job is: Do not laugh! If the AI catches you laughing it’s game over. So I set out to create this game using Vue.js and Electron which has recently become my frontend frameworks of choice.

Using AI and machine learning technology inside HTML5 / JavaScript based applications is a rather new thing, so I decided to write down my experiences in this article and share some best practices with you. If you’re not interested in all the details you can also just download all the source code from here.

How to add TensorFlow to a Vue.js + Electron app

If you previously played around a bit with AI or machine learning code chances are you have already seen a library called TensorFlow in action. TensorFlow is Google’s open source AI framework for machine learning (ML) and it is widely used for tasks like image classification — Just what we need for our little game. The only drawbacks are, it is a Python library and it can only use nVidia GPUs for acceleration. Yes, you absolutely want that GPU acceleration when doing anything ML related.

Enter TensorFlow.js and WebGL GPU acceleration

Luckily the TensorFlow team has ported the library to JavaScript and released TensorFlow.js (tfjs) which lets us use it inside an Electron app. And even better — They went the extra mile and added WebGL based GPU acceleration with support for any modern GPU and doesn’t restrict us to nVidia and CUDA enabled hardware any longer👋 👋 👋. Cheers to that🍺!

Making things even more simple with face-api.js

At this point we’re basically all set. We have a powerful library on our hand and there are pre-trained models available for emotion detection. This means we can save ourselves the time and hassle to train our own model.

While I was writing some initial code I found that Vincent Mühler had already created a high-level API called face-api.js built upon TensorFlow.js which wraps all the low-level operations and provides a convenient API for common tasks like face recognition and emotion detection. He also provides lots of example code on his GitHub repository so I was able to build my first emotion recognizer within a matter of minutes.

Putting the application together

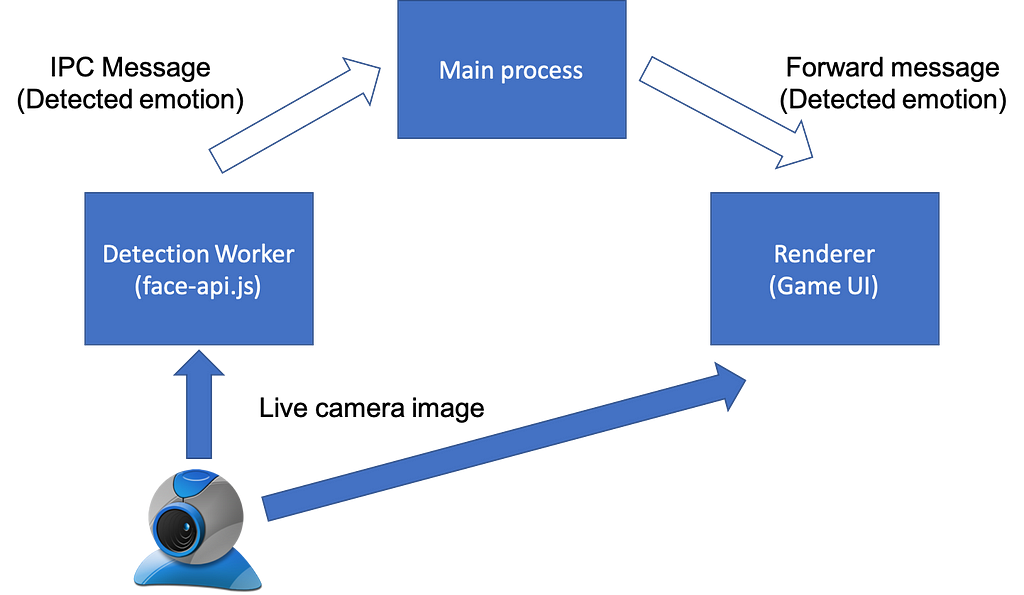

Let’s start with the overall game architecture. Using Electron means we have a Chromium renderer process which will become our game UI. It will play back the video file, display your live camera feed and of course run the game logic — You laugh, you lose.

So where does the actual emotion detection take place in this scenario? To answer this question you need to keep in mind two things:

- Real-time emotion detection is a very resource-intensive task. Even a good GPU will probably just yield you around 20–30 FPS.

- Running resource-intensive tasks inside an Electron renderer will cause the UI of this renderer to become unresponsive.

To keep things running smooth we need a way to move the heavy lifting into a separate process. Luckily Electron can do just that using hidden renderers. Therefore our final game architecture looks like this:

In this scenario we have face-api running inside a hidden renderer (“Detection Worker”), continuously evaluating emotions in the live camera stream. If an emotion is detected the worker will send an IPC message with the detected emotion to the game. Inside the game we can simply treat such messages as events and react accordingly.

Creating a new Vue.js / Electron App

To create your App boilerplate you can follow my instructions here. Start at the section “Getting your environment ready” and follow the instructions until you can successfully run the blank App using npm run electron:serve.

Next install face-api.js:

npm i --save face-api.js

The background detection worker process

First we create the background worker process which will handle all the detection work (aka. “heavy lifting”). Go ahead and create a file worker.html inside the public directory:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>Worker</title>

</head>

<body>

<video id="cam" autoplay muted playsinline></video>

</body>

</html>

Note the <video> tag here. We will refer to this element in our worker to retrieve the image data from the camera stream.

Next create worker.js inside the src directory. This is a longer file and you can see the full version here. I will break it down and explain the most important parts to you:

import * as faceapi from 'face-api.js';

// init detection options

const minConfidenceFace = 0.5;

const faceapiOptions = new faceapi.SsdMobilenetv1Options({ minConfidenceFace });

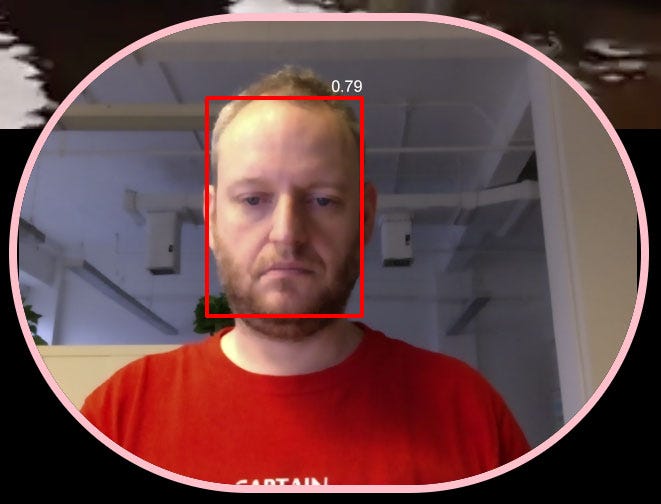

Here we include and configure face-api.js. Internally face-api.js uses the SSD MobileNet v1 model to identify the face inside the picture therefore we need to provide a minConfidenceFace configuration parameter which configures the model to identify a face if it is at least 50% confident.

// configure face API

faceapi.env.monkeyPatch({

Canvas: HTMLCanvasElement,

Image: HTMLImageElement,

ImageData: ImageData,

Video: HTMLVideoElement,

createCanvasElement: () => document.createElement('canvas'),

createImageElement: () => document.createElement('img')

});

This part is a workaround to make face-api.js work properly inside an Electron app. In a normal browser environment this would not be required. However we enable nodeIntegration inside the hidden renderer which causes TensorFlow.js to believe we are inside a NodeJS environment. That’s why we need to manually monkey patch the environment back to a browser environment. If you skip over this step you will receive an error Uncaught (in promise) TypeError: Illegal constructor at createCanvasElement [...].

let loadNet = async () => {

let detectionNet = faceapi.nets.ssdMobilenetv1;

await detectionNet.load('/data/weights');

await faceapi.loadFaceExpressionModel('/data/weights');

return detectionNet;

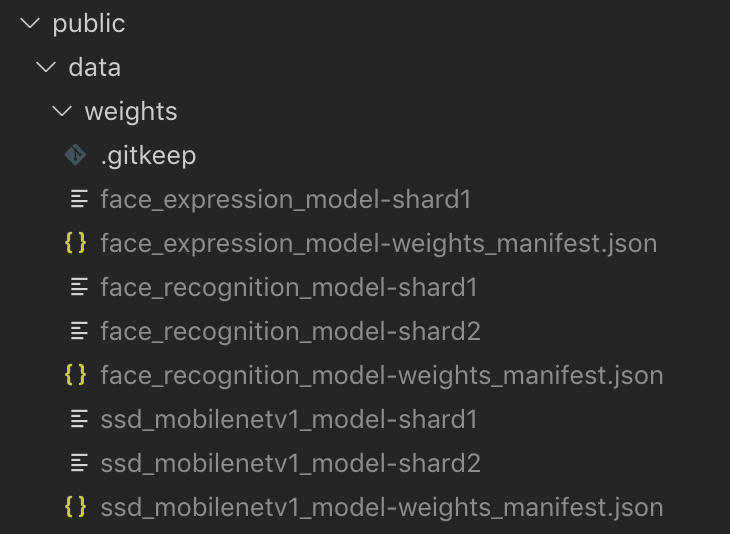

};Next we load the pre-trained model weights for the MobileNet V1 network and also the face expression model. As I wanted to make my App work offline I load them from the local URL /data/weights which translates to the /public/data/weights folder inside your project directory. You can download the required files from Vincent Mühler’s GitHub repository.

let cam;

let initCamera = async (width, height) => {

cam = document.getElementById('cam');

cam.width = width;

cam.height = height;

const stream = await navigator.mediaDevices.getUserMedia({

audio: false,

video: {

facingMode: "user",

width: width,

height: height

}

});

cam.srcObject = stream;

return new Promise((resolve) => {

cam.onloadedmetadata = () => {

resolve(cam);

};

});

};

The above code is pretty much the standard code for getting the video stream from a camera connected to your computer. We just wrap it inside a promise for convenience.

Now with everything in place we could directly continue with the detection part. I just add some convenience methods for sending the event messages to the game UI renderer:

let onReady = () => {

notifyRenderer('ready', {});

};let onExpression = (type) => {

notifyRenderer('expression', { type: type });

};let notifyRenderer = (command, payload) => {

ipcRenderer.send('window-message-from-worker', {

command: command, payload: payload

});

}onReady is triggered once the model has initialized “warmed up” and is ready for detections. Afterwards whenever an expression is detected onExpression will forward the detected expression to the main process via IPC.

And now for the actual detection part:

let detectExpressions = async () => {

// detect expression

let result = await faceapi.detectSingleFace(cam, faceapiOptions)

.withFaceExpressions();

if(!isReady) {

isReady = true;

onReady();

}

if(typeof result !== 'undefined') {

let happiness = 0, anger = 0;

if(result.expressions.hasOwnProperty('happy')) {

happiness = result.expressions.happy;

}

if(result.expressions.hasOwnProperty('angry')) {

anger = result.expressions.angry;

}

if(happiness > 0.7) {

onExpression('happy');

} else if(anger > 0.7) {

onExpression('angry');

}

}

if(isRunning) {

detectExpressions();

}

};This function is basically an infinite loop which will first detect a single face in the camera picture and then try to determine the facial expression (=emotion) on that face.

The result of detectSingleFace().withFaceExpression() will return a result object with an expressions dictionary, containing the probabilities (0–1) of a given expression like “angry” or “happy”. In my example I decided to set the threshold of the probability to 0.7 (70%) for triggering an onExpression event.

That’s it! We can now run the detection worker with this code:

loadNet()

.then(net => { return initCamera(640, 480); })

.then(video => { detectExpressions(); });

Configuring Vue.js for using a hidden background renderers

With your detection worker in place the next step is to configure both Vue.js and Electron to run your hidden renderer. Open up (or create) the file vue.config.js in your app’s root directory and insert / append the following configuration:

module.exports = {

pages: {

index: {

entry: 'src/main.js', //entry for the public page

template: 'public/index.html', // source template

filename: 'index.html' // output as dist/*

},

worker: {

entry: 'src/worker.js',

template: 'public/worker.html',

filename: 'worker.html'

}

},

devServer: {

historyApiFallback: {

rewrites: [

{ from: /\/index/, to: '/index.html' },

{ from: /\/worker/, to: '/worker.html' }

]

}

}

};This configuration will add a second entry point for the worker to Vue’s WebPack configuration and also create an alias to make it work during development.

Finally make the following modifications to background.js:

import { app, protocol, BrowserWindow, ipcMain } from 'electron'

import {

createProtocol,

installVueDevtools

} from 'vue-cli-plugin-electron-builder/lib';const isDevelopment = process.env.NODE_ENV !== 'production';

let win;

let workerWin;

// check if the "App" protocol has already been created

let createdAppProtocol = false;

// Scheme must be registered before the app is ready

protocol.registerSchemesAsPrivileged([{

scheme: 'app', privileges: {

secure: true,

standard: true,

corsEnabled: true,

supportFetchAPI: true

}

}])

function createWindow () {// create the game UI window

win = new BrowserWindow({

width: 1024, height: 790,

webPreferences: { nodeIntegration: true }

});

if (process.env.WEBPACK_DEV_SERVER_URL) {

win.loadURL(process.env.WEBPACK_DEV_SERVER_URL)

} else {

win.loadURL('app://./index.html');

} win.on('closed', () => {

// closing the main (visible) window should quit the App

app.quit();

});

}function createWorker(devPath, prodPath) {// create hidden worker window

workerWin = new BrowserWindow({

show: false,

webPreferences: { nodeIntegration: true }

});

if(process.env.WEBPACK_DEV_SERVER_URL) {

workerWin.loadURL(process.env.WEBPACK_DEV_SERVER_URL + devPath);

} else {

workerWin.loadURL(`app://./${prodPath}`)

} workerWin.on('closed', () => { workerWin = null; });

}function sendWindowMessage(targetWindow, message, payload) {

if(typeof targetWindow === 'undefined') {

console.log('Target window does not exist');

return;

}targetWindow.webContents.send(message, payload);

}

[...]

app.on('ready', async () => {

if (isDevelopment && !process.env.IS_TEST) {

// Install Vue Devtools

try {

await installVueDevtools()

} catch (e) {

console.error('Vue Devtools failed to install:', e.toString())

}

} if(!createdAppProtocol) {

createProtocol('app');

createdAppProtocol = true;

}// create the main application window

createWindow();

// create the background worker window

createWorker('worker', 'worker.html');

// setup message channels

ipcMain.on('window-message-from-worker', (event, arg) => {

sendWindowMessage(win, 'message-from-worker', arg);

});

})

[...]

Let’s look at the changes and additions I made here. The most obvious one is the second window workerWin which will be our hidden renderer. To make things more manageable I’ve created the function createWorker which kind of mirrors the default createWindow function, just with the specific requirements of the hidden worker.

Next I modified the app protocol to enable CORS and support for the fetch API. This is necessary to allow loading the model weights from the local /public folder.

Finally I added an IPC listener for the window-message-from-worker channel to relay incoming messages from the detection worker to the game via the sendWindowMessage method.

Adding the game user interface

I won’t go too much into detail on how to build the HTML / CSS and focus on how to to receive and process the “emotion” messages you receive from your detection worker. As a reminder, all the code is available on my GitHub repository for you to review.

Let’s look at this part of the source inside src/views/Play.vue:

this.$electron.ipcRenderer.on('message-from-worker', (ev, data) => { if(typeof data.command === 'undefined') {

console.error('IPC message is missing command string');

return;

} if(data.command == 'expression') {

if(data.payload.type == 'happy') {

this.onLaugh();

return;

} if(data.payload.type == 'angry') {

this.onAngry();

return;

}

}});

Here we start listening for incoming IPC messages via the message-from-worker channel. If the message contains an expression command we trigger a game event related to the message. In my game onLaugh would contain the logic when you get caught laughing, onAngry will re-start the game once it’s game over.

That’s it! If you’re interested in the finished project go ahead and download it from my GitHub page. Feel free to modify it or use it as a base for your own game — and I’d love to see all the cool stuff you come up with!

Thank you very much for reading!

Do not laugh — A simple AI powered game was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Andreas Schallwig

Andreas Schallwig | Sciencx (2021-11-17T14:06:40+00:00) Do not laugh — A simple AI powered game. Retrieved from https://www.scien.cx/2021/11/17/do-not-laugh%e2%80%8a-%e2%80%8aa-simple-ai-powered-game/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.