This content originally appeared on DEV Community and was authored by Nilay Jayswal

It has become very clear that the future of work will be fully remote or a hybrid. A lot of companies would have to use or develop tools to enhance their communication and remotely provide services to their customers.

This content was originally published - HERE

In this article we will demonstrate how easy it is to build a video chat application with 100ms SDKs in VueJs3 (using TypeScript) and Netlify functions in Golang. Tailwindcss will be used for the styles.

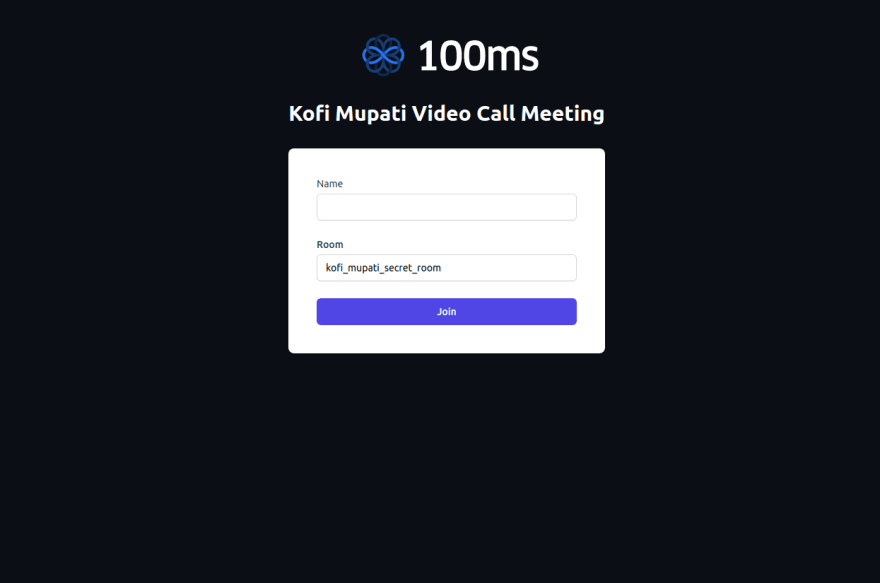

At the end of the tutorial, this is how our application will look like:

Features

- Creating a new room where conversation can take place

- Joining a room after generating an authentication token

- Muting and unmuting the audio and video for both Local and Remote Peers.

- Displaying appropriate user interface for the on and off states of the audio and video.

Prerequisites

-

100ms.live account. You'll need to get the

APP_ACCESS_KEYandAPP_SECRETfrom developer section in the dashboard. - Familiarity with Golang which we will be using to create new rooms and generate auth tokens.

- A fair understanding of VueJs3 and its composition API.

- Serverless functions. We'll be using Netlify functions in this blog to host our Golang backend. Make sure to install the Netlify CLI.

Project Setup

- Create a new VueJs3 application

npm init vite@latest vue-video-chat --template vue-ts

cd vue-video-chat

npm install

- Initialize a new Netlify app inside the application. Follow the prompts after running the following command:

ntl init

- Install 100ms JavaScript SDK and project dependencies. For Tailwindcss follow this installation guide.

# 100ms SDKs for conferencing

npm install @100mslive/hms-video-store

# Axios for making API calls

npm install axios

# Setup tailwindcss for styling.(https://tailwindcss.com/docs/guides/vite)

# A tailwind plugin for forms

npm install @tailwindcss/forms

- Add a

netlify.tomlfile and add the path to the functions directory.

# Let's tell Netlify about the directory where we'll

# keep the serverless functions

[functions]

directory = "hms-functions/"

- Create 2 Netlify functions:

createRoomandgenerateAppTokeninside a directory namedhms-functions.

inside the root directory of the project i.e vue-video-chat

mkdir hms-functions

cd hms-functions

ntl functions:create --name="createRoom"

ntl functions:create --name="generateAppToken"

Rest APIS For Room and Token

There are two things we want to have APIs for. The first is create room which will be invoked when a user wants to create a new room. The second is auth token which will be invoked when a user wants to join the room. The auth token is necessary to let 100ms allow the join.

Let's start with the Room Creation Endpoint

Navigate to the createRoom directory and install the following libraries.

cd hms-functions/createRoom

go get github.com/golang-jwt/jwt/v4 v4.2.0

go get github.com/google/uuid v1.3.0

go mod tidy

This endpoint will take the room name as an input which will be used while creating the room. 100ms ensures that we can only create one room with a name. So if we try to create it the next time we'll get the same room as earlier. We'll use this feature by calling the same creation endpoint from our UI while both creating the room and joining an existing one.

The endpoint does the following:

- Generates a management token in the

generateManagementTokenfunction which is used for authorisation while creating the room. - Creates a room using the management token and the passed in room name.

Add the following to hms-functions/createRoom/main.go

package main

import (

"bytes"

"context"

"encoding/json"

"errors"

"io/ioutil"

"net/http"

"strings"

"time"

"os"

"github.com/aws/aws-lambda-go/events"

"github.com/aws/aws-lambda-go/lambda"

"github.com/golang-jwt/jwt/v4"

"github.com/google/uuid"

)

type RequestBody struct {

Room string `json:"room"`

}

// https://docs.100ms.live/server-side/v2/foundation/authentication-and-tokens#management-token

func generateManagementToken() string {

appAccessKey := os.Getenv("APP_ACCESS_KEY")

appSecret := os.Getenv("APP_SECRET")

mySigningKey := []byte(appSecret)

expiresIn := uint32(24 * 3600)

now := uint32(time.Now().UTC().Unix())

exp := now + expiresIn

token := jwt.NewWithClaims(jwt.SigningMethodHS256, jwt.MapClaims{

"access_key": appAccessKey,

"type": "management",

"version": 2,

"jti": uuid.New().String(),

"iat": now,

"exp": exp,

"nbf": now,

})

// Sign and get the complete encoded token as a string using the secret

signedToken, _ := token.SignedString(mySigningKey)

return signedToken

}

func handleInternalServerError(errMessage string) (*events.APIGatewayProxyResponse, error) {

err := errors.New(errMessage)

return &events.APIGatewayProxyResponse{

StatusCode: http.StatusInternalServerError,

Headers: map[string]string{"Content-Type": "application/json"},

Body: "Internal server error",

}, err

}

func handler(ctx context.Context, request events.APIGatewayProxyRequest) (*events.APIGatewayProxyResponse, error) {

var f RequestBody

managementToken := generateManagementToken()

b := []byte(request.Body)

err1 := json.Unmarshal(b, &f)

if err1 != nil {

return &events.APIGatewayProxyResponse{

StatusCode: http.StatusUnprocessableEntity,

}, errors.New("Provide room name in the request body")

}

postBody, _ := json.Marshal(map[string]interface{}{

"name": strings.ToLower(f.Room),

"active": true,

})

payload := bytes.NewBuffer(postBody)

roomUrl := os.Getenv("ROOM_URL")

method := "POST"

client := &http.Client{}

req, err := http.NewRequest(method, roomUrl, payload)

if err != nil {

return handleInternalServerError(err.Error())

}

// Add Authorization header

req.Header.Add("Authorization", "Bearer "+managementToken)

req.Header.Add("Content-Type", "application/json")

// Send HTTP request

res, err := client.Do(req)

if err != nil {

return handleInternalServerError(err.Error())

}

defer res.Body.Close()

resp, err := ioutil.ReadAll(res.Body)

if err != nil {

return handleInternalServerError(err.Error())

}

return &events.APIGatewayProxyResponse{

StatusCode: res.StatusCode,

Headers: map[string]string{"Content-Type": "application/json"},

Body: string(resp),

IsBase64Encoded: false,

}, nil

}

func main() {

// start the serverless lambda function for the API calls

lambda.Start(handler)

}

Token Generation Endpoint

Now that we have an API to create a room, we'll also need to allow for users to join them. 100ms requires an app token to authorise a valid join. Navigate to the generateAppToken directory and install the following libraries.

cd hms-functions/generateAppToken

go get github.com/golang-jwt/jwt/v4 v4.2.0

go get github.com/google/uuid v1.3.0

go mod tidy

This endpoint accepts the following params:

-

user_id: This is meant to be used to store the reference user id from our system but as we don't have any, we'll simply use the name as user_id in our UI. -

room_id: The room id which the user wants to join. -

role: The role you want to assign to a user while joining the video chat. For e.g. host or guest. This decides what all permissions they'll have post joining.

The following code accepts the params listed above and returns a JWT token with a 1-day expiration period which will be used when joining a video call.

Add the following code to hms-functions/generateAppToken/main.go:

package main

import (

"context"

"encoding/json"

"errors"

"net/http"

"os"

"time"

"github.com/aws/aws-lambda-go/events"

"github.com/aws/aws-lambda-go/lambda"

"github.com/golang-jwt/jwt/v4"

"github.com/google/uuid"

)

type RequestBody struct {

UserId string `json:"user_id"`

RoomId string `json:"room_id"`

Role string `json:"role"`

}

func handler(ctx context.Context, request events.APIGatewayProxyRequest) (*events.APIGatewayProxyResponse, error) {

var f RequestBody

b := []byte(request.Body)

err1 := json.Unmarshal(b, &f)

if err1 != nil {

return &events.APIGatewayProxyResponse{

StatusCode: http.StatusUnprocessableEntity,

}, errors.New("Provide user_id, room_id and room in the request body")

}

appAccessKey := os.Getenv("APP_ACCESS_KEY")

appSecret := os.Getenv("APP_SECRET")

mySigningKey := []byte(appSecret)

expiresIn := uint32(24 * 3600)

now := uint32(time.Now().UTC().Unix())

exp := now + expiresIn

token := jwt.NewWithClaims(jwt.SigningMethodHS256, jwt.MapClaims{

"access_key": appAccessKey,

"type": "app",

"version": 2,

"room_id": f.RoomId,

"user_id": f.UserId,

"role": f.Role,

"jti": uuid.New().String(),

"iat": now,

"exp": exp,

"nbf": now,

})

// Sign and get the complete encoded token as a string using the secret

signedToken, err := token.SignedString(mySigningKey)

if err != nil {

return &events.APIGatewayProxyResponse{

StatusCode: http.StatusInternalServerError,

Headers: map[string]string{"Content-Type": "application/json"},

Body: "Internal server error",

}, err

}

// return the app token so the UI can join

return &events.APIGatewayProxyResponse{

StatusCode: http.StatusOK,

Headers: map[string]string{"Content-Type": "application/json"},

Body: signedToken,

IsBase64Encoded: false,

}, nil

}

func main() {

lambda.Start(handler)

}

The UI

The UI is made up of a form where users will enter some details to join a room and where their video and audio streams will be displayed when they successfully join the same room for the video chat.

Utility functions to make the API requests.

- Create

types.tsto contain our type definitions

// Inside the project's root directory

touch src/types.ts

// Add the following code to types.ts

export type HmsTokenResponse = {

user_id?: String;

room_id?: String;

token: String;

};

- Create

hms.tswhich will contain the utility functions and initiate 100ms SDK instances.

We initialise the HMSReactiveStore instance and create the following:

-

hmsStore: For accessing the current room state, who all are there in the room and if their audio/video is on. -

hmsActions: For performing actions in the room like muting and unmuting.

The FUNCTION_BASE_URL is the base url for hitting the Netlify functions.

fetchToken: This function is used for creating the room followed by generating the authToken which will be used when joining the video chat. We'll set the role to "host" in all cases for simplicity. Roles can be used to decide the set of permissions a user will have if required.

// this code will be in src/hms.ts

import axios from "axios";

import { HMSReactiveStore } from "@100mslive/hms-video-store";

import { HmsTokenResponse } from "./types";

const FUNCTION_BASE_URL = "/.netlify/functions";

const hmsManager = new HMSReactiveStore();

// store will be used to get any state of the room

// actions will be used to perform an action in the room

export const hmsStore = hmsManager.getStore();

export const hmsActions = hmsManager.getActions();

export const fetchToken = async (

userName: string,

roomName: string

): Promise<HmsTokenResponse | any> => {

try {

// create or fetch the room_id for the passed in room

const { data: room } = await axios.post(

`${FUNCTION_BASE_URL}/createRoom`,

{ room: roomName },

{

headers: {

"Content-Type": "application/json",

},

}

);

// Generate the app/authToken

const { data:token } = await axios.post(

`${FUNCTION_BASE_URL}/generateAppToken`,

{

user_id: userName,

room_id: room.id,

role: "host",

},

{

headers: {

"Content-Type": "application/json",

},

}

);

return token;

} catch (error: any) {

throw error;

}

};

Add a form where users enter their details to join the video chat in a file named: join.vue

This is a simple form where users enter their username and the room they want to join for the video call.

joinHmsRoom: This function calls the fetchToken method and uses the response to join the room with hmsActions.join method. All users who join will have their audio muted by default as we have set isAudioMuted: true.

// Add the following to src/components/Join.vue

<script setup lang="ts">

import { reactive, ref } from "vue";

import { fetchTokens, hmsActions } from "../hms";

const defaultRoomName = import.meta.env.VITE_APP_DEFAULT_ROOM;

const isLoading = ref(false);

const formData = reactive({

name: "",

room: `${defaultRoomName}`,

});

const joinHmsRoom = async () => {

try {

isLoading.value = true;

const authToken = await fetchToken(formData.name, formData.room);

hmsActions.join({

userName: formData.name,

authToken: authToken,

settings: {

isAudioMuted: true, // Join with audio muted

},

});

} catch (error) {

alert(error);

}

isLoading.value = false;

};

</script>

<template>

<div class="mt-8 sm:mx-auto sm:w-full sm:max-w-md">

<div class="bg-white py-10 px-5 shadow sm:rounded-lg sm:px-10">

<form class="space-y-6" @submit.prevent="joinHmsRoom">

<div>

<label for="name" class="block text-sm font-2xl text-gray-700">

Name

</label>

<div class="mt-1">

<input

id="name"

name="name"

type="text"

autocomplete="username"

required

v-model="formData.name"

class="

appearance-none

block

w-full

px-3

py-2

border border-gray-300

rounded-md

shadow-sm

placeholder-gray-400

focus:outline-none focus:ring-indigo-500 focus:border-indigo-500

sm:text-sm

"

/>

</div>

</div>

<div>

<label for="room" class="block text-sm font-medium text-gray-700">

Room

</label>

<div class="mt-1">

<input

id="room"

name="room"

type="text"

required

disabled

v-model="formData.room"

class="

appearance-none

block

w-full

px-3

py-2

border border-gray-300

rounded-md

shadow-sm

placeholder-gray-400

focus:outline-none focus:ring-indigo-500 focus:border-indigo-500

sm:text-sm

disabled:cursor-not-allowed

"

/>

</div>

</div>

<div>

<button

type="submit"

:disabled="formData.name === '' || isLoading"

:class="{ 'cursor-not-allowed': isLoading }"

class="

w-full

flex

justify-center

py-2

px-4

border border-transparent

rounded-md

shadow-sm

text-sm

font-medium

text-white

bg-indigo-600

hover:bg-indigo-700

focus:outline-none

focus:ring-2

focus:ring-offset-2

focus:ring-indigo-500

"

>

<svg

class="animate-spin mr-3 h-5 w-5 text-white"

xmlns="http://www.w3.org/2000/svg"

fill="none"

viewBox="0 0 24 24"

v-if="isLoading"

>

<circle

class="opacity-25"

cx="12"

cy="12"

r="10"

stroke="currentColor"

stroke-width="4"

></circle>

<path

class="opacity-75"

fill="currentColor"

d="M4 12a8 8 0 018-8V0C5.373 0 0 5.373 0 12h4zm2 5.291A7.962 7.962 0 014 12H0c0 3.042 1.135 5.824 3 7.938l3-2.647z"

></path>

</svg>

{{ isLoading ? "Joining..." : "Join" }}

</button>

</div>

</form>

</div>

</div>

</template>

Create the component where the video streams will be displayed named: conference.vue

The hmsStore as I mentioned earlier contains the various states provided by 100ms for a video chat.

The subscribe method provides a very easy way to get the value for the various states. All you need to do is subscribe a state and attach a handler function to process the state changes from the given selector.

hmsStore.getState also accepts a state selector to get the value at a point in time. We'll be using it at places where reactivity is not required.

We use selectors to determine the audio and video states for the Local and Remote Peers.

Explanation of the various methods used:

- onAudioChange: A handler for when the local peer mutes/unmutes audio

- onVideoChange: A handler for when the local peer mutes/unmutes video

- onPeerAudioChange: A handler for when the remote peer mutes/unmutes audio

- onPeerVideoChange: A handler for when the remote peer mutes/unmutes video

- toggleAudio & toggleVideo: Function to mute/unmute local audio and video

- renderPeers: This is a handler that detects Peer addition and removal via the selectPeers selector. For every peer that connects, their video stream is displayed with the

hmsActions.attachVideomethod.

For a RemotePeer, we subscribe to their audio and video's muted states with the selectIsPeerAudioEnabled and selectIsPeerVideoEnabled selectors. The detected changes trigger the respective UI change.

// Add the following to src/components/Conference.vue

<script setup lang="ts">

import { ref, reactive, onUnmounted } from "vue";

import {

selectPeers,

HMSPeer,

HMSTrackID,

selectIsLocalAudioEnabled,

selectIsLocalVideoEnabled,

selectIsPeerAudioEnabled,

selectIsPeerVideoEnabled,

} from "@100mslive/hms-video-store";

import { hmsStore, hmsActions } from "../hms";

const videoRefs: any = reactive({});

const remotePeerProps: any = reactive({});

const allPeers = ref<HMSPeer[]>([]);

const isAudioEnabled = ref(hmsStore.getState(selectIsLocalAudioEnabled));

const isVideoEnabled = ref(hmsStore.getState(selectIsLocalVideoEnabled));

enum MediaState {

isAudioEnabled = "isAudioEnabled",

isVideoEnabled = "isVideoEnabled",

}

onUnmounted(() => {

if (allPeers.value.length) leaveMeeting();

});

const leaveMeeting = () => {

hmsActions.leave();

};

const onAudioChange = (newAudioState: boolean) => {

isAudioEnabled.value = newAudioState;

};

const onVideoChange = (newVideoState: boolean) => {

isVideoEnabled.value = newVideoState;

};

const onPeerAudioChange = (isEnabled: boolean, peerId: string) => {

if (videoRefs[peerId]) {

remotePeerProps[peerId][MediaState.isAudioEnabled] = isEnabled;

}

};

const onPeerVideoChange = (isEnabled: boolean, peerId: string) => {

if (videoRefs[peerId]) {

remotePeerProps[peerId][MediaState.isVideoEnabled] = isEnabled;

}

};

const renderPeers = (peers: HMSPeer[]) => {

allPeers.value = peers;

peers.forEach((peer: HMSPeer) => {

if (videoRefs[peer.id]) {

hmsActions.attachVideo(peer.videoTrack as HMSTrackID, videoRefs[peer.id]);

// If the peer is a remote peer, attach a listener to get video and audio states

if (!peer.isLocal) {

// Set up a property to track the audio and video states of remote peer so that

if (!remotePeerProps[peer.id]) {

remotePeerProps[peer.id] = {};

}

remotePeerProps[peer.id][MediaState.isAudioEnabled] = hmsStore.getState(

selectIsPeerAudioEnabled(peer.id)

);

remotePeerProps[peer.id][MediaState.isVideoEnabled] = hmsStore.getState(

selectIsPeerVideoEnabled(peer.id)

);

// Subscribe to the audio and video changes of the remote peer

hmsStore.subscribe(

(isEnabled) => onPeerAudioChange(isEnabled, peer.id),

selectIsPeerAudioEnabled(peer.id)

);

hmsStore.subscribe(

(isEnabled) => onPeerVideoChange(isEnabled, peer.id),

selectIsPeerVideoEnabled(peer.id)

);

}

}

});

};

const toggleAudio = async () => {

const enabled = hmsStore.getState(selectIsLocalAudioEnabled);

await hmsActions.setLocalAudioEnabled(!enabled);

};

const toggleVideo = async () => {

const enabled = hmsStore.getState(selectIsLocalVideoEnabled);

await hmsActions.setLocalVideoEnabled(!enabled);

// rendering again is required for the local video to show after turning off

renderPeers(hmsStore.getState(selectPeers));

};

// HMS Listeners

hmsStore.subscribe(renderPeers, selectPeers);

hmsStore.subscribe(onAudioChange, selectIsLocalAudioEnabled);

hmsStore.subscribe(onVideoChange, selectIsLocalVideoEnabled);

</script>

<template>

<main class="mx-10 min-h-[80vh]">

<div class="grid grid-cols-2 gap-2 sm:grid-cols-3 lg:grid-cols-3 my-6">

<div v-for="peer in allPeers" :key="peer.id" class="relative">

<video

autoplay

:muted="peer.isLocal"

playsinline

class="h-full w-full object-cover"

:ref="

(el) => {

if (el) videoRefs[peer.id] = el;

}

"

></video>

<p

class="

flex

justify-center

items-center

py-1

px-2

text-sm

font-medium

bg-black bg-opacity-80

text-white

pointer-events-none

absolute

bottom-0

left-0

"

>

<span

class="inline-block w-6"

v-show="

(peer.isLocal && isAudioEnabled) ||

(!peer.isLocal &&

remotePeerProps?.[peer.id]?.[MediaState.isAudioEnabled])

"

>

<svg viewBox="0 0 32 32" xmlns="http://www.w3.org/2000/svg">

<path

stroke="#FFF"

fill="#FFF"

d="m23 14v3a7 7 0 0 1 -14 0v-3h-2v3a9 9 0 0 0 8 8.94v2.06h-4v2h10v-2h-4v-2.06a9 9 0 0 0 8-8.94v-3z"

/>

<path

stroke="#FFF"

fill="#FFF"

d="m16 22a5 5 0 0 0 5-5v-10a5 5 0 0 0 -10 0v10a5 5 0 0 0 5 5z"

/>

<path d="m0 0h32v32h-32z" fill="none" />

</svg>

</span>

<span

class="inline-block w-6"

v-show="

(peer.isLocal && !isAudioEnabled) ||

(!peer.isLocal &&

!remotePeerProps?.[peer.id]?.[MediaState.isAudioEnabled])

"

>

<svg viewBox="0 0 32 32" xmlns="http://www.w3.org/2000/svg">

<path

fill="#FFF"

d="m23 17a7 7 0 0 1 -11.73 5.14l1.42-1.41a5 5 0 0 0 8.31-3.73v-4.58l9-9-1.41-1.42-26.59 26.59 1.41 1.41 6.44-6.44a8.91 8.91 0 0 0 5.15 2.38v2.06h-4v2h10v-2h-4v-2.06a9 9 0 0 0 8-8.94v-3h-2z"

/>

<path

fill="#FFF"

d="m9 17.32c0-.11 0-.21 0-.32v-3h-2v3a9 9 0 0 0 .25 2.09z"

/>

<path fill="#FFF" d="m20.76 5.58a5 5 0 0 0 -9.76 1.42v8.34z" />

<path d="m0 0h32v32h-32z" fill="none" />

</svg>

</span>

<span class="inline-block">

{{ peer.isLocal ? `You (${peer.name})` : peer.name }}</span

>

</p>

<p

class="text-white text-center absolute top-1/2 right-0 left-0"

v-show="

(peer.isLocal && !isVideoEnabled) ||

(!peer.isLocal &&

!remotePeerProps?.[peer.id]?.[MediaState.isVideoEnabled])

"

>

Camera Off

</p>

</div>

</div>

<div

class="mx-auto mt-10 flex items-center justify-center"

v-if="allPeers.length"

>

<button

class="bg-teal-800 text-white rounded-md p-3 block"

@click="toggleAudio"

>

{{ isAudioEnabled ? "Mute" : "Unmute" }} Microphone

</button>

<button

class="bg-indigo-400 text-white rounded-md p-3 block mx-5"

@click="toggleVideo"

>

{{ isVideoEnabled ? "Mute" : "Unmute" }} Camera

</button>

<button

class="bg-rose-800 text-white rounded-md p-3 block"

@click="leaveMeeting"

>

Leave Meeting

</button>

</div>

<div v-else>

<p class="text-white text-center font-bold text-2xl">

Hold On!, Loading Video Tiles...

</p>

</div>

</main>

</template>

Add the components to App.vue

We subscribe to the selectRoomStarted state to know when the join has completed and show the Conference component. If room has not started we'll show the the Join component.

<script setup lang="ts">

import { ref } from "vue";

import { selectRoomStarted } from "@100mslive/hms-video-store";

import { hmsStore } from "./hms";

import Join from "./components/Join.vue";

import Conference from "./components/Conference.vue";

const isConnected = ref(false);

const onConnection = (connectionState: boolean | undefined) => {

isConnected.value = Boolean(connectionState);

};

hmsStore.subscribe(onConnection, selectRoomStarted);

</script>

<template>

<div class="min-h-full flex flex-col justify-center py-12 sm:px-6 lg:px-8">

<div class="sm:mx-auto sm:w-full sm:max-w-md">

<img

class="mx-auto block h-20 w-auto"

src="https://www.100ms.live/assets/logo.svg"

alt="100ms"

/>

<h2 class="mt-6 text-center text-3xl font-extrabold text-white">

Kofi Mupati Video Call Meeting

</h2>

</div>

<Conference v-if="isConnected" />

<Join v-else />

</div>

</template>

Add Environment variables

Update the following environment variables in the .env file.

Note that I've set a default room name to prevent room creation every time we try to join a video chat.

For other people to join the video chat, they must use the same room name.

ROOM_URL=https://prod-in2.100ms.live/api/v2/rooms

APP_ACCESS_KEY=your_hms_app_access_key_from_dashboard

APP_SECRET=your_hms_app_secret_from_dashboard

VITE_APP_DEFAULT_ROOM=kofi_mupati_secret_room

Testing the Application

- Run the application locally with the Netlify-cli. The application will open on the following port: http://localhost:8888/

ntl dev

Open two browsers. One should be in the regular mode and the other incognito and open the link the application will run on.

Enter your username and join the video chat.

Visual learners can watch the application demonstration on YouTube

Conclusion

You can find the complete project repository here.

For me the, the ability to simply subscribe to specific states makes the 100ms SDKs very easy to use. The type definitions are great, documentations are simple and provide a very good developer experience.

I hope this tutorial is a very welcoming introduction to the 100ms.live platform and I look forward to the amazing applications you are going to build.

This content originally appeared on DEV Community and was authored by Nilay Jayswal

Nilay Jayswal | Sciencx (2022-01-25T12:43:35+00:00) Building Video Chat App with VueJs and Golang. Retrieved from https://www.scien.cx/2022/01/25/building-video-chat-app-with-vuejs-and-golang/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.