This content originally appeared on DEV Community and was authored by Kevin Wan

Did you feel the same?

When you are developing backend services, do you often have the following questions?

- How many connections does the database connection pool have?

- How long does the lifetime of each connection last?

- When a connection is abnormally disconnected, is it the server side or the client side that actively disconnected?

- Does the underlying library have KeepAlive requests when there are no requests for a long time?

Handling complex network situations is always one of the key points and difficulties of backend development. Are you also suffering from debugging various network situations?

Why I wrote tproxy?

When I was doing backend development and writing go-zero, I would often need to monitor network connections and analyze the request packets. For example.

- Analyzing when a gRPC connection connects and reconnects, and adjusting various parameters accordingly, e.g. MaxConnectionIdle

- Analyze the MySQL connection pool, how many connections are currently available, and what the connection lifetime policy is

- It can also be used to observe and analyze any TCP connection to see if the server is actively disconnecting or the client is actively disconnecting, etc.

Installation of tproxy

$ GOPROXY=https://goproxy.cn/,direct go install github.com/kevwan/tproxy@latest

Or use the docker image: ``Shell

`Shell

$ docker run --rm -it -p <listen-port>:<listen-port> -p <remote-port>:<remote-port> kevinwan/tproxy:v1 tproxy -l 0.0.0.0 -p <listen-port> -r host. docker.internal:<remote-port>

`

arm64 system:

`Shell

$ docker run --rm -it -p <listen-port>:<listen-port> -p <remote-port>:<remote-port> kevinwan/tproxy:v1-arm64 tproxy -l 0.0.0.0 -p <listen-port> - r host.docker.internal:<remote-port>

`

Usage of tproxy

`Shell

$ tproxy --help

Usage of tproxy:

-d duration

the delay to relay packets

-l string

Local address to listen on (default "localhost")

-p int

Local port to listen on

-q Quiet mode, only prints connection open/close and stats, default false

-r string

Remote address (host:port) to connect

-t string

The type of protocol, currently support grpc

`

Analyze gRPC connections

`Shell

tproxy -p 8088 -r localhost:8081 -t grpc -d 100ms

`

- Listening on localhost and port 8088

- Redirect requests to

localhost:8081 - Analyze packet format as gRPC

- Packet delay of 100ms

where we can see the initialization and back and forth of a request from gRPC, and we can see the first request with the stream id as 1.

For example, gRPC has a MaxConnectionIdle parameter that sets how long the connection will be closed after idle, and we can directly observe that the server will send an http2 GoAway packet after the time is up.

For example, if I set MaxConnectioinIdle to 5 minutes, the connection is automatically closed after 5 minutes of no requests, and then a new connection is created.

Analyzing MySQL Connections

Let's analyze the impact of MySQL connection pooling settings on the connection pool. For example, if I set the parameters to

`Go

maxIdleConns = 5

maxOpenConns = 8

maxLifetime = time.Minute

...

conn.SetMaxIdleConns(maxIdleConns)

conn.SetMaxOpenConns(maxOpenConns)

conn.SetConnMaxLifetime(maxLifetime)

`

We set MaxIdleConns and MaxOpenConns to different values, then we do a benchmark test with hey

`Shell

hey -c 10 -z 10s "http://localhost:8888/lookup?url=go-zero.dev"

`

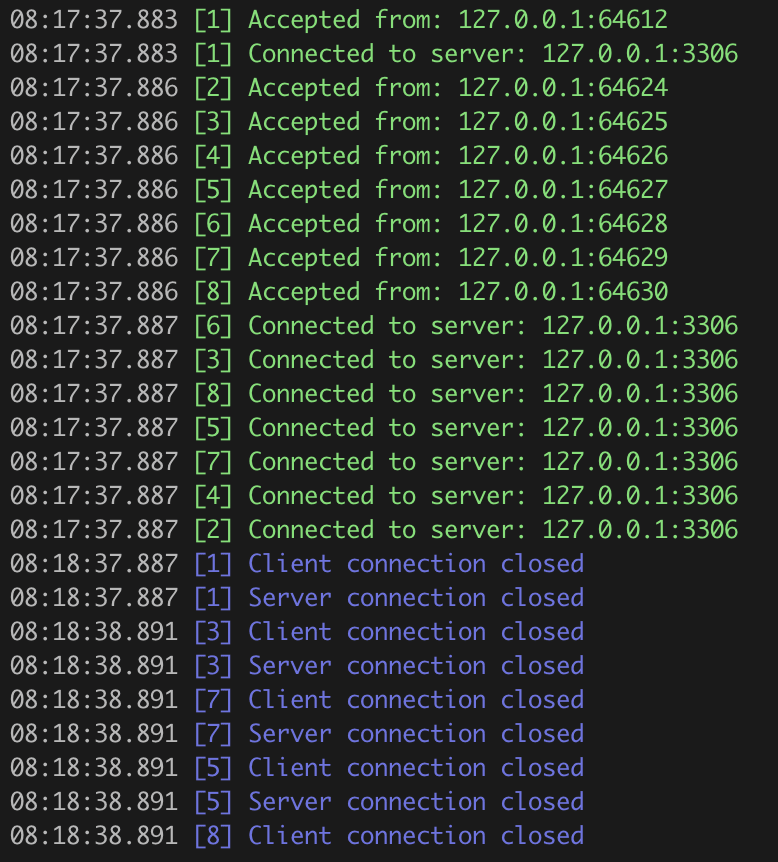

We did a benchmark test with a concurrency of 10QPS and a duration of 10 seconds, and the connection result is as follows.

As we can see.

- 2000+ connections were established in 10 seconds

- The service constantly closing existing connections and reopening new ones

- Each time the connection is used and put back, it may exceed MaxIdleConns, and then the connection will be closed

- When a new request comes to get the connection, it is found that the number of connections is less than MaxOpenConns, but there are no more connections available, so a new connection is created.

This is the reason why we often see a lot of TIME_WAIT connections for MySQL.

Then we set MaxIdleConns and MaxOpenConns to the same value and do the same benchmark test again:

We can see that.

- 8 connections have been maintained constant

- After a minute of idle (ConnMaxLifetime), all connections are closed

The ConnMaxLifetime here must be set smaller than the wait_timeout, you can get the wait_timeout value from the following method.

I recommend setting a value of less than 5 minutes, because some switches will clean up idle connections for 5 minutes, for example, when we are doing social apps, the heartbeat packets usually do not exceed 5 minutes. The specific reasons can be seen in

https://github.com/zeromicro/go-zero/blob/master/core/stores/sqlx/sqlmanager.go#L65

There is a paragraph in issue #257 of go-sql-driver that also talks about ConnMaxLifetime, as follows.

14400 sec is too long. one minutes is enough for most use cases.

Even if you configure entire your DC (OS, switch, router, etc...) , TCP connection may be lost from various reasons. (bugs in router firmware, unstable power voltage, electric nose, etc...)

So if you don't know how to set MySQL connection pool parameters, you can refer to go-zero's settings.

Also, ConnMaxIdleTime has no effect on the above benchmark test results, so you don't really need to set it.

If you have any questions about the above settings, or if you think something is wrong, please feel free to leave comments and let's discuss.

Project address

tproxy: https://github.com/kevwan/tproxy

go-zero: https://github.com/zeromicro/go-zero

Welcome to use and star to support us!

This content originally appeared on DEV Community and was authored by Kevin Wan

Kevin Wan | Sciencx (2022-06-21T05:12:21+00:00) Understanding and tuning parameters of connection pools. Retrieved from https://www.scien.cx/2022/06/21/understanding-and-tuning-parameters-of-connection-pools/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.