This content originally appeared on Level Up Coding - Medium and was authored by Anas Anjaria

Databases

Incrementally rolling-out migration from MongoDB to PostgreSQL

There could be various reasons to move from one database to another—for instance, cost reduction, maintenance overhead, performance optimization, etc.

Migrating a smoothly running production database is always challenging. Challenges may include but are not limited to the following:

- Enabling a new database to work under the same production load.

- To have the better or at least the performance as that of the currently running production database.

This post aims to explain a strategy & process to facilitate migration in our production system.

Let’s assume we would like to migrate from MongoDB to PostgreSQL.

High-level Overview

The strategy is:

Migrate a database incrementally

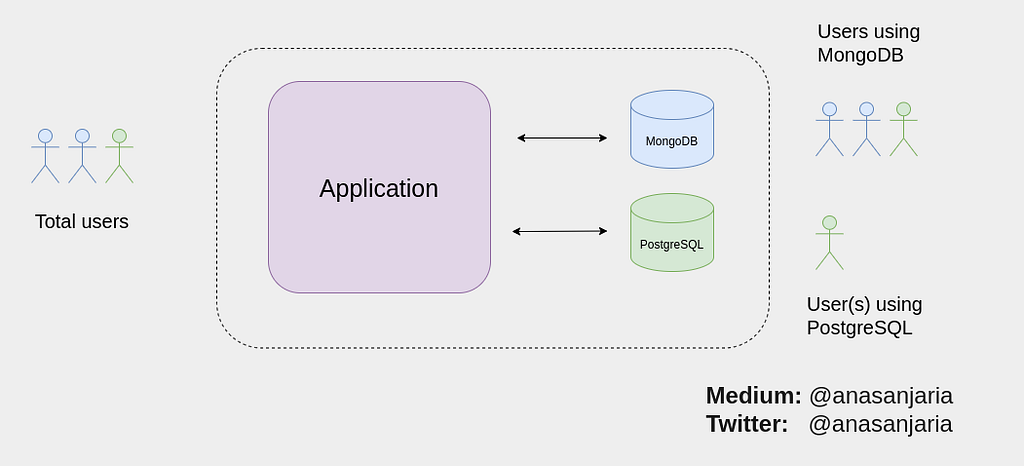

It means that we start using PostgresSQL for a small percentage of users and observe the performance & resource utilization of our system.

When it looks good and working as expected, we will incrementally increase the percentage of users for PostgreSQLdatabase. Otherwise, we need to troubleshoot the reason(s) (discussed later in this post under Troubleshooting section).

As you may have noticed that the percentage of a user(s) using PostgreSQL is also using MongoDB.

This redundancy is a design decision. We cannot fully rely on our new database system until complete migration.

So, the migration process is divided into two parts:

Stabilize writing operations first

This enables us to have the same data in both databases.

- Incrementally increase the percentage of users using a new database.

- It’s okay to have failures at the PostgreSQL side. But don’t let the whole incoming HTTP request and/or process fail due to this failure.

- Focus on stabilizing the write operations.

- Data will be available on both databases as soon as write operations are stabilized.

- Now, fail the whole incoming HTTP request and/or process when failures occur in any of the databases. This enables us to act accordingly upon failures.

Stabilize reading now

As data is now available on both databases, we will enable a small portion of users to read from PostgreSQL.

We will follow the same approach as mentioned above in the Stabilize writing operation first section but now it applies to reading.

Proof of concept

The source code is written in Scala: https://github.com/anasanjaria/incremental-db-service-migration

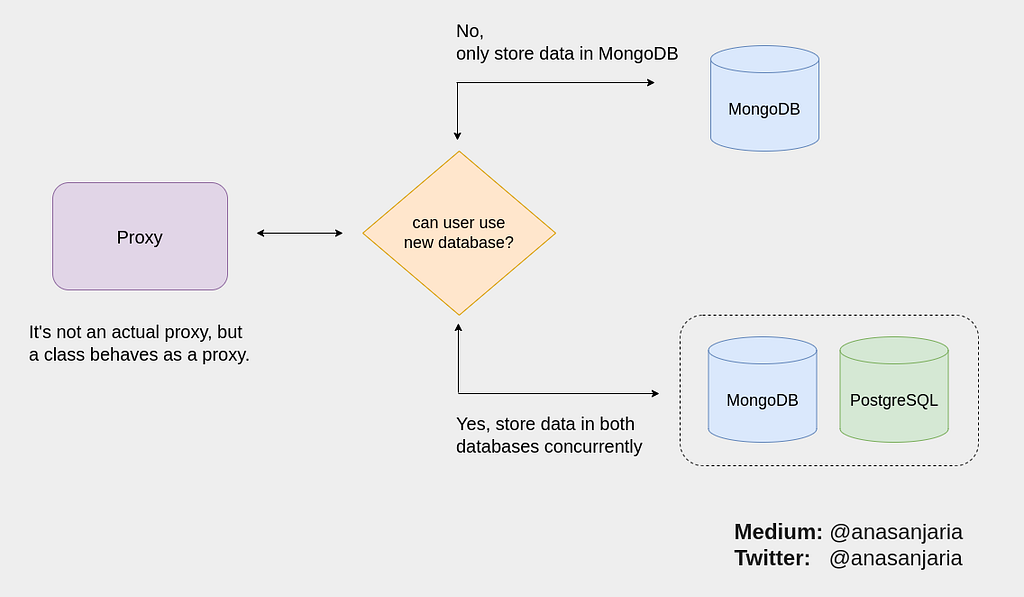

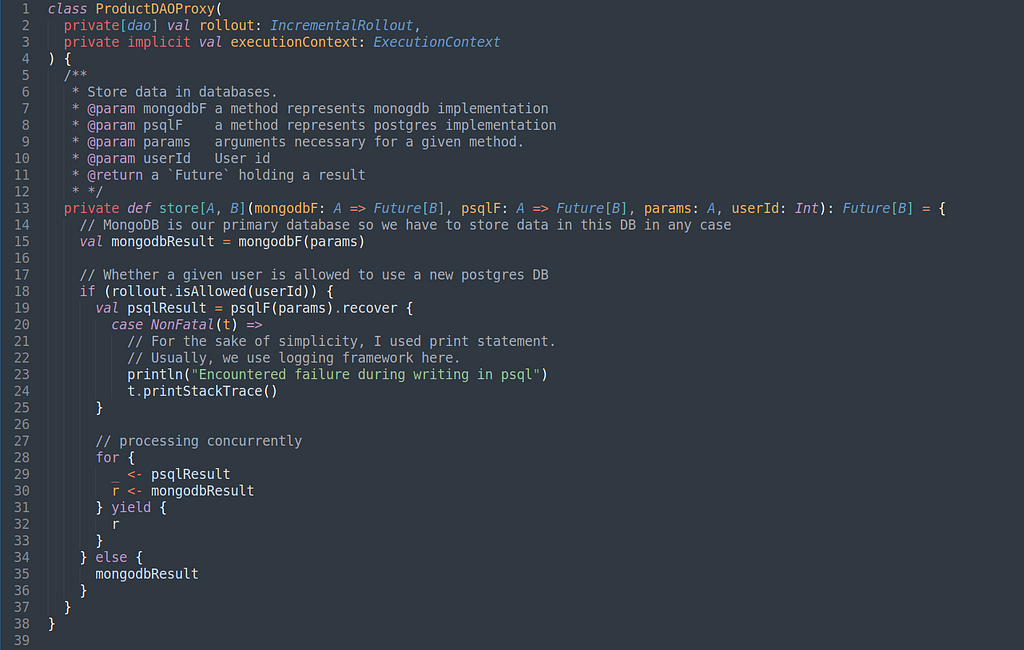

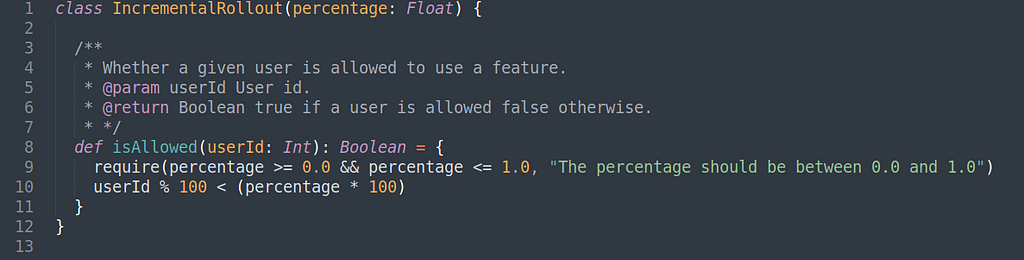

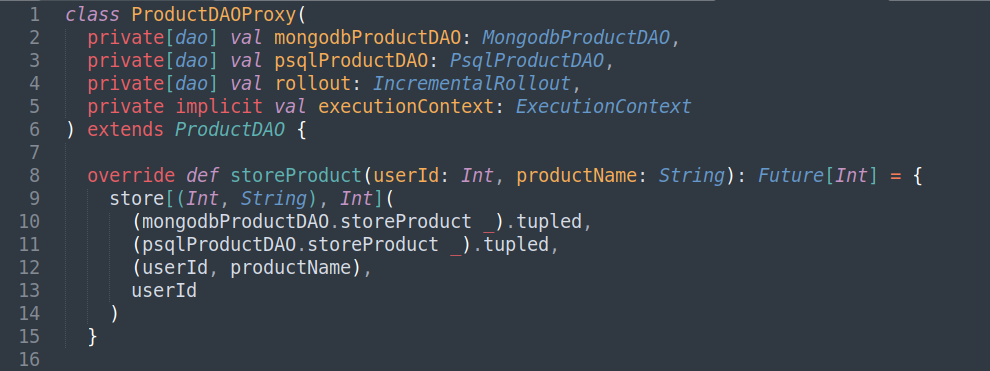

We will implement this concept using a proxy. However, it’s not an actual proxy, but a class behaves as a proxy as shown in the following piece of code.

A rollout argument in the proxy determines whether a user is allowed to use a new database or not.

Please note that it's just a very simple and basic implementation. You can adjust it as per your need.

As we always communicate with our databases using DAO hence proxy needs DAOs to communicate with respective databases.

MongodbProductDAO & PsqlProductDAO manage products CRUD operations for mongodb & postgesql respectively.

storeProduct stores a product name in respective databases.

Unit tests

I have written unit tests explaining the high-level concepts mentioned in this post earlier. Have a look at the proxy test here

ProductDAOProxy

should fail whole product saving such that user can use both databases

- when it fails to store product in a mongodb

should not fail whole product saving such that user can use both databases

- when it fails to store product in a postgres

should only store products in monogdb when user is not allowed to use postgres

We would like to fail the whole operation if there is a failure at the primary database but we should not fail in the case of a secondary database.

Similarly, unit tests for an incremental rollout concept can be found here.

IncrementalRollout

should allow a user to use a feature

- when a feature is enabled completely for all users

- when a feature is enabled 50%

should not allow a user to use a feature

- when a feature is disabled completely for all users

- when a feature is enabled 50%

Troubleshooting

It’s very likely that our new production database will not work as expected. Maybe it will have high resource consumption (memory or CPU) or a query takes too long to respond to.

Both of these problems are an indication of query optimization. Maybe we need to tune our new database by adding indices.

For a precise reason, we need to enable logging for slow queries. And this can be done by adjusting the Postgres configuration. Here is a nice tutorial on this topic — 3 WAYS TO DETECT SLOW QUERIES IN POSTGRESQL.

Once you determined your slow query, you can check the query execution plan and improve it accordingly.

Thanks for reading.

If you have any questions, please feel free to ask me.

If you enjoy this post, you might also like the following post.

Want to connect?

Facebook | LinkedIn | Twitter

Subscribe to get my work directly into your inbox.

https://medium.com/subscribe/@anasanjaria

Strategy to Migrate from one Database to Another Incrementally was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Anas Anjaria

Anas Anjaria | Sciencx (2022-10-23T16:28:46+00:00) Strategy to Migrate from one Database to Another Incrementally. Retrieved from https://www.scien.cx/2022/10/23/strategy-to-migrate-from-one-database-to-another-incrementally/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.