This content originally appeared on Level Up Coding - Medium and was authored by Mehran

One of the most amazing things about node.js is streaming and how you could benefit from it while dealing with a big file. Today in this post, I will show you how to stream a big JSON file from the S3 bucket and perform specific actions while streaming it.

Here is the typescript module that I will explain in detail.

I used Typescript to clarify the code better, but of course, you can use vanilla JavaScript.

Scenario

Imagine a backend service that receives events from various sources and stores them as a zipped JSON file into an S3 bucket. This is a pretty standard exercise, and a good example is user events collected from Web and Mobile applications.

Let's assume that you want to look into the collected events and filter the event name called cancelled_purchase and store them separately into a Redshift table or Postgres that later on accessed by the data team.

You have two options:

- Download the files from the S3 bucket, unzip them, read each file separately, and filter the cancelled_purchase events and process them.

- Unzip, filter, and process file while it gets streamed from the S3 bucket.

The first approach needs local storage and most probably a lot of processing power and RAM; you have to clean up after your done with the file.

The second approach is cleaner for few reasons:

- The service only uses a fixed amount of CPU and memory at any given time, no matter how big a file is.

- There is no need for cleanup as no physical file is downloaded.

Jumping to the code to understand it better.

Here is the list of npm packages needed for this project.

- aws-sdk

This package is used to communicate with AWS and specifically S3 in our case. - jsonstream

This package is used to access a stream of JSON - zlib

This package is used to unzip and stream a file. - rxjs

This package is used to perform actions like filter and map on a stream of JSON

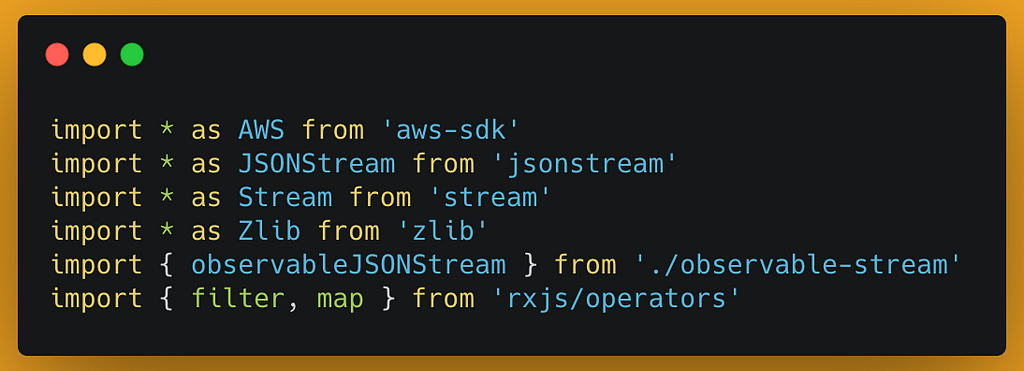

Importing npm packages

Here is the list of packages you have to import

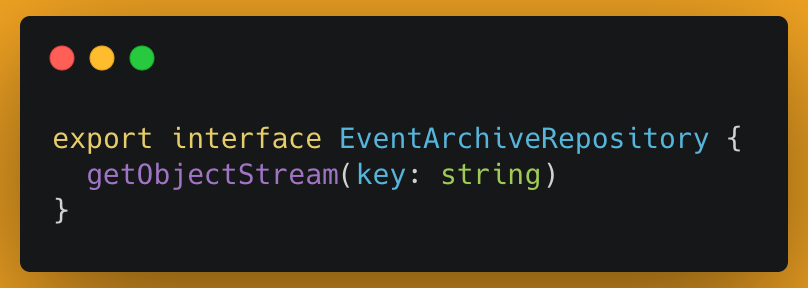

Interface for an exported function

It is always good to create an interface that defines the exported module and helps the client know the contact in advance.

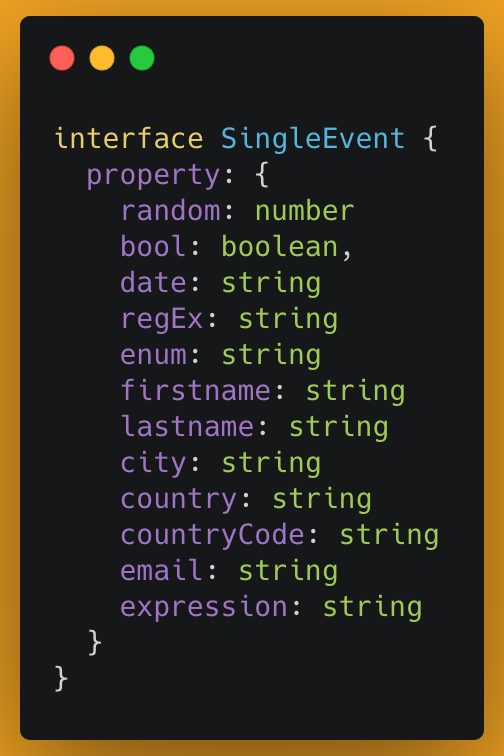

Define a type for the expected event

As explained earlier, The service streams an array of events stored as a gzip file in an S3 bucket;

A type for the event makes accessing the event properties easier. This type is not mandatory if you decide to go with vanilla Javascript.

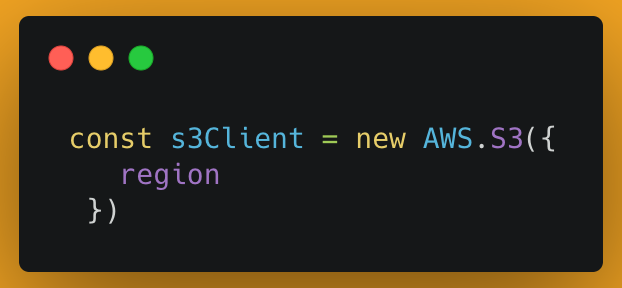

S3 Client

Create an S3 client that communicates with AWS S3

getObjectStream

getObjectStream is a function that holds streaming logic.

Stream events

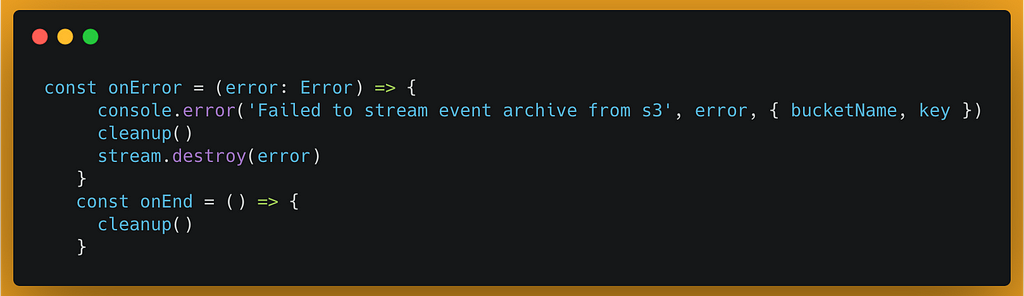

The stream is an ongoing process, and it could be interrupted by many events, like an error. A few functions must be created to respond to these events.

Start the stream

S3 client provides the ability to stream a file from the S3 bucket but just by calling.

Once the stream is ready, you can pipe it, and in each pipe, you could perform a specific action.

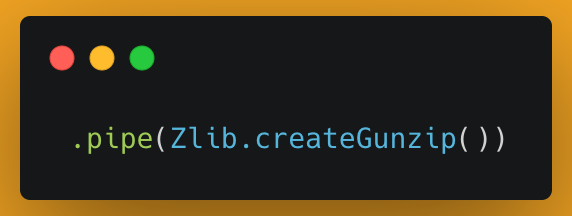

Unzip the file

The first action to perform is unzipping the file's content as it comes; The Zlib package is used for this purpose.

Access to the right property of the event

Events are stored as an array, and accessing each item of the array is possible by JSONStream. JSONStream does more than this, and you can read about it here.

Creating Observable Stream

To benefit from the functionalities that rxjs offers, the standard stream must be converted to an observable stream. The below module helps with this matter.

This module accepts a Readable stream and then returns an Rx.Observable stream.

User Observable stream

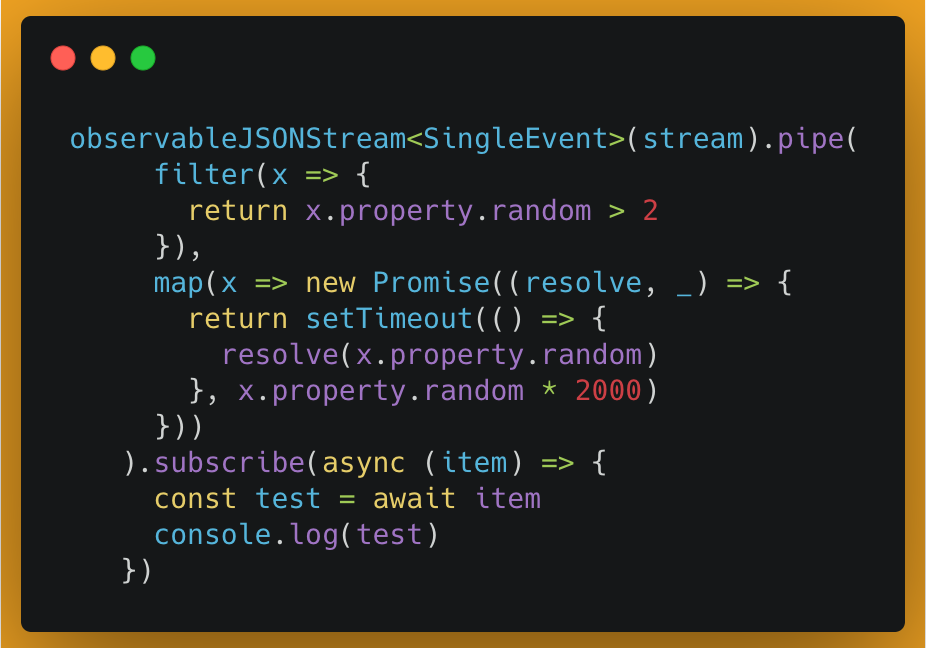

The below code uses the observableJSONStream module to create an observable stream.

Function like filter or map can be used after converting a stream to an observable.

Filter

Filter function works exactly like JavaScript filter, but the main difference between these two is that the observable filter works on a stream of data that is not possible with a standard filter. This example used the filter function to fan out the events that their property. random is smaller than 3.

Map

Maybe the most interesting part of this module is the map function, which is similar to the JavaScript map and same as the observable filter only works on stream.

The map function is the best place for performing an asynchronous action like calling an API per event.

subscribe

Like any other observable, a subscription is needed to get the final result, and in this example, the subscriber receives a promise from the map function.

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 💰 Free coding interview course ⇒ View Course

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

Streaming gzip JSON file from S3. was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Mehran

Mehran | Sciencx (2023-04-18T23:05:41+00:00) Streaming gzip JSON file from S3.. Retrieved from https://www.scien.cx/2023/04/18/streaming-gzip-json-file-from-s3/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.