This content originally appeared on DEV Community and was authored by Athreya aka Maneshwar

We're a lean team of 10 managing a relatively simple infrastructure.

Our setup includes a master Kube v1.24.4, two v1.25.1 nodes, and a self-hosted GitLab for version control.

Our CI/CD pipeline relies on GitLab runners to build images and deploy Docker containers using Kubernetes.

Kubernetes: The Goliath We Couldn't Tame

For a while, our infrastructure was running smoothly with eight services deployed across our Kubernetes cluster.

However, a few months ago, we hit a snag.

Nodes became unreachable, deployments stalled, and pods started crashing.

This instability was diverting our energy into fixing things up instead of producing.

As a temporary fix, we decided to host four of our deployments on a separate node using Docker.

This provided some stability, but the underlying issue persisted – the nodes connection continued to go offline.

Drowning in YAML: Our Kubernetes Nightmare

Maintaining Kubernetes for our small team felt manageable at first.

Helm templates streamlined our deployments, but downtime issues persisted.

Maintaining Kubernetes for a small team like ours was becoming increasingly burdensome.

The constant troubleshooting and firefighting were taking a toll on our productivity.

It felt like we were spending more time managing the infrastructure than building our product.

Nomad: The Oasis in Our Infrastructure Desert

(Source: HashiCorp)

After careful consideration, we decided to explore Nomad.

Large organizations like Cloudflare and Trivago have been running Nomad for some time now.

Their endorsement of Nomad's simplicity and reduced operational overhead increased our interest.

We were eager to explore how this platform could benefit our smaller team.

What we wanted was something like a supercharged pm2, but multi-node with good support for both docker and binaries.

Nomad seemed like the perfect fit, so we decided to give it a shot.

Our next steps involve diving into Nomad's capabilities and potentially conducting a proof of concept to assess its suitability for our environment, before that let's understand the key difference between Kubernetes and Nomad.

Kubernetes vs. Nomad: A Clash of Titans

Kubernetes is like a big, complex city. It’s got everything, but it can be hard to navigate and manage. It's is a collection of many, many services & tools working together.

Nomad is more like a cozy town. It’s simpler, easier to get around, and still gets the job done. It's is a single binary, which you have to run & you're done

Here's the breakdown:

- Simplicity: Nomad wins. It's like comparing a bike to a car.

- Nomad is simple to learn and use, making it accessible even for teams with limited experience in managing complex container orchestration systems.

- Flexibility: Nomad can handle different types of workloads, while Kubernetes is mostly focused on containers.

- It has the ability to schedule, deploy, and manage various workloads (virtualized, containerized, and standalone applications) types, including both Windows- and Linux-based containers.

- Consistency: Nomad is the same everywhere, while Kubernetes can vary depending on how you set it up.

- Setting up Kubernetes in production can be complex and resource-intensive. Kubernetes, while versatile, can introduce complexities due to its various deployment options (like minikube, kubeadm, and k3s). This can lead to differences in setup and management.

- Nomad stands out with its consistent deployment process. As a single binary, it works seamlessly across different environments, from development to production. This unified approach simplifies management and reduces potential issues.

- Scaling: Both can handle big jobs, but Nomad seems to do it smoother.

According to Kubernetes documentation, it supports clusters with up to 5,000 nodes and 300,000 total containers. However, as the environment grows, managing the system at scale can become increasingly challenging.

Nomad is built for scale. It effortlessly handles tens of thousands of nodes and can be spread across multiple regions without added complexity. Rigorous testing, including the 2 million container challenge, has proven Nomad's ability to manage massive workloads efficiently.

So, if you want something easy to manage and versatile, Nomad might be your jam.

But if you need a full-featured platform and don't mind the complexity, Kubernetes could be the way to go.

Image source: This image is extracted from a presentation included in the Internet Archive article "Migrating from Kubernetes to Nomad and Consul" which details the migration process.

Nomad has a pretty solid UI for real-time insights.

From YAML to HCL: A Developer-Friendly Shift

Thankfully, getting started with Nomad was a breeze.

In Kubernetes, we define deployments via YAML files. Whereas Nomad leverages HCL (HashiCorp Configuration Language).

This language, built using Go, proved to be surprisingly user-friendly for developers like ourselves.

We were able to quickly grasp the syntax and start building deployments with minimal learning curve.

Nomad 101: Your Quick Start Guide

1. Installing Nomad for Production

I've set up Nomad with a single server and multiple clients.

If you wish to deploy services on the server machine as well, you can register it as a client.

This way, one node can act as a coordinator/leader while also deploying services, making efficient use of compute resources.

The official HashiCorp installation instructions for Linux are clear and easy to follow.

We found them to be much simpler than the sometimes cumbersome setup process experienced with Kubernetes years earlier.

Note: To run your services that depend on Docker using Nomad, Docker must be installed on the clients.

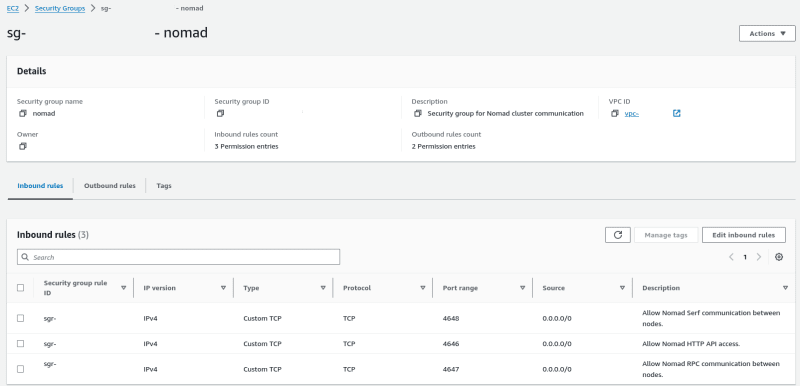

2. Exposing Nomad Ports

To ensure your Nomad servers and clients can communicate (join), we need to open specific ports on your network.

Create Security Group

A security group acts as a firewall, controlling incoming and outgoing traffic for your instances.

In our AWS setup, we'll create a security group to allow access to the following ports:

- Port

4648: Used for Serf communication between Nomad servers. Both a TCP and UDP listener will be exposed to this address. This protocol is responsible for cluster membership and failure detection. - Port

4647: Dedicated to Nomad RPC communication between nodes. The address used to advertise to Nomad clients for connecting to Nomad servers for RPC. This allows Nomad clients to connect to Nomad servers from behind a NAT gateway. This address muct be reachable by all Nomad client nodes. When set, the Nomad servers will use the advertise.serf address for RPC connections amongst themselves. This is the primary channel for task scheduling and status updates. - Port

4646: Enables HTTP API access for interacting between CLI Nomad agents. The address the HTTP server is bound to. The address to advertise for the HTTP interface.

Attach Security Group to Server and Client Nodes

We need to associate the created security group with both your Nomad server and client instances.

By doing this, you allow incoming traffic on the specified ports, enabling communication within the Nomad cluster.

EC2 -> Instance -> Actions -> Security -> Change Security Groups

3. Joining Forces: Connecting Clients to Your Nomad Cluster

Assuming Nomad is already installed on your client machines, follow these steps to join them to the Nomad server:

-

Edit

client.hclOpen the client.hcl file located at/etc/nomad.d/client.hclon each client machine. Add the following configuration, replacing152.21.12.71with the actual IP address of your Nomad server:

client {

enabled = true

server_join {

retry_join = ["152.21.12.71:4647"]

}

}

- Restart Nomad To apply the configuration changes, restart the Nomad service on each client machine:

sudo systemctl restart nomad

- Verify Client Status Check the Nomad logs on each client to ensure they have joined the cluster successfully:

sudo journalctl -u nomad -f

# or

sudo systemctl status nomad

- Look for messages indicating that the client has connected to the server.

node4 nomad[1441379]: [INFO] agent.joiner: starting retry join: servers=152.21.12.71:4647

node4 nomad[1441379]: [INFO] agent.joiner: retry join completed: initial_servers=1 agent_mode=client

node4 nomad[1441379]: [INFO] client: node registration complete

Your Nomad clients will now join the cluster and start receiving job allocations from the server. 5. Listing the Nodes status

nomad node status

You can view the clients and servers with their status from the dashboard, which is pretty great.

4. Deploying with Ease: Your First Nomad Job

Before deploying a job, let's create a HCL configuration file to define the job's specifications, including where it runs, how many instances to launch, and resource requirements.

Generating an Example Job File

Nomad offers a handy command to generate a basic HCL file as a starting point.

nomad init

Example Job File (fw-parse/fw-parse.hcl)

This example showcases a job that runs a Node.js backend service within a Docker container.

Datacenters:

A datacenter in Nomad represents a region or availability zone where your jobs will run. It's essential to specify the datacenter for your job.

job "fw-parse" {

datacenters = ["dc1"]

# ... rest of the configuration

}

In this example, dc1 is the name of the datacenter. You can have multiple datacenters in your Nomad cluster.

Groups:

A group is a logical container for one or more tasks. You can use groups to organize your job and define constraints.

job "fw-parse" {

datacenters = ["dc1"]

group "servers" {

# Specifies the number of instances of this group that should be running.

# Use this to scale or parallelize your job.

# This can be omitted and it will default to 1.

count = 1

network {

port "http" {

static = 1337 # Use the internal port your Docker container listens on

}

}

# ... task configuration

}

# ... rest of the configuration

}

Task Configuration: Docker Driver

For our Node.js backend, we'll use the Docker driver.

job "fw-parse" {

datacenters = ["dc1"]

group "servers" {

task "fw-parse" {

driver = "docker"

config {

image = "gitlab.site:5050/fw-parse/main:latest"

# ... other config options

}

}

}

}

The image attribute specifies the Docker image to use.

fw-parse/fw-parse.hcl

job "fw-parse" {

# Specifies the datacenter where this job should be run

datacenters = ["dc1"]

group "servers" {

# Specifies the number of instances of this group that should be running.

# Use this to scale or parallelize your job.

# This can be omitted and it will default to 1.

count = 1

network {

port "http" {

static = 1337 # Use the internal port your Docker container listens on

}

}

# This particular task starts a simple web server within a Docker container

task "fw-parse" {

driver = "docker"

config {

image = "gitlab.hexmos.site:5050/backend/fw-parse/main:latest"

auth {

username = "gitlab@hexmos.site"

password = "password"

}

ports = ["http"]

}

# Specify the maximum resources required to run the task

resources {

cpu = 500

memory = 256

}

}

}

}

More about ports:

If your service is exposing multiple ports, you can configure Nomad to handle them by specifying each port in the network block and mapping them to the tasks. This is particularly useful when your application requires several different ports for various services, such as HTTP, HTTPS, and an another one.

For example, if your service is exposing ports 1337, 1339, and 3000, you would define them in your Nomad job file like this:

job "fw-parse" {

datacenters = ["dc1"]

group "servers" {

network {

port "http" {

static = 1337 # Use the internal port your Docker container listens on

}

port "https" {

static = 1339 # Another port used by your container

}

port "admin" {

static = 3000 # Port for an admin interface or additional service

}

}

task "fw-parse" {

driver = "docker"

config {

image = "gitlab.hexmos.site:5050/backend/fw-parse/main:latest"

auth {

username = "gitlab@hexmos.site"

password = "password"

}

ports = ["http", "https", "admin"] # List all the ports that the container will use

}

}

}

}

4. Running and Stopping Deployments

Running a Deployment

Nomad offers two ways to kickstart your deployment:

- Command Line: Use the nomad job run command.

nomad job run fw-parse/fwparse.hcl

- Nomad UI: For a more visual experience, leverage Nomad's intuitive user interface.

Stopping a Deployment

When you need to halt a running deployment, simply execute the command nomad job stop where represents the name assigned to your deployment.

nomad stop example

Extras

- Format hcl files: hclfmt

- Config generator: nomcfg

- Guides for getting started: Nomad tutorial

Our Roadmap: Expanding Nomad's Potential

We've recently embarked on our Nomad journey, setting up a foundational environment with a single server and three clients.

After a week of running a basic Docker-based Node.js backend, we're pleased with the initial results.

Our vision for Nomad extends far beyond this initial proof of concept.

We're eager to integrate GitLab CI/CD for streamlined deployments and explore the use of HashiCorp Vault for robust secret management.

Given our team's shared ownership model, Nomad's developer-centric approach aligns perfectly with our goals of rapid iteration and efficient deployment.

We anticipate Nomad to enhance our operational efficiency and are excited to share our experiences and learnings as we progress.

We're open to feedback and suggestions from the Nomad community as we continue to refine our setup.

If you have any recommendations based on your experiences, we'd love to hear them.

Want to dive deeper into the Kubernetes world? Check out our other blog posts:

- Demystifying Kubernetes Port Forwarding

- 6 Kubernetes Ports: A Definitive Look - Expose, NodePort, TargetPort, & More

- Spotting Silent Pod Failures in Kubernetes with Grafana

Subscribe for a weekly dose of insights on development, IT, operations, design, leadership and more.

This content originally appeared on DEV Community and was authored by Athreya aka Maneshwar

Athreya aka Maneshwar | Sciencx (2024-08-11T17:36:23+00:00) Faster, Easier Deployments: How We Simplified Our Infrastructure with Nomad in 15 Hours (Goodbye, Kubernetes!). Retrieved from https://www.scien.cx/2024/08/11/faster-easier-deployments-how-we-simplified-our-infrastructure-with-nomad-in-15-hours-goodbye-kubernetes/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.