This content originally appeared on HackerNoon and was authored by Tech Media Bias [Research Publication]

:::info Authors:

(1) Jinhao Pan [0009 −0006 −1574 −6376], Texas A&M University, College Station, TX, USA;

(2) Ziwei Zhu [0000 −0002 −3990 −4774], George Mason University, Fairfax, VA, USA;

(3) Jianling Wang [0000 −0001 −9916 −0976], Texas A&M University, College Station, TX, USA;

(4) Allen Lin [0000 −0003 −0980 −4323], Texas A&M University, College Station, TX, USA;

(5) James Caverlee [0000 −0001 −8350 −8528]. Texas A&M University, College Station, TX, USA.

:::

Table of Links

3 End-to-End Adaptive Local Learning

3.1 Loss-Driven Mixture-of-Experts

3.2 Synchronized Learning via Adaptive Weight

4 Debiasing Experiments and 4.1 Experimental Setup

4.3 Ablation Study

4.4 Effect of the Adaptive Weight Module and 4.5 Hyper-parameter Study

6 Conclusion, Acknowledgements, and References

\ Abstract. Collaborative filtering (CF) based recommendations suffer from mainstream bias – where mainstream users are favored over niche users, leading to poor recommendation quality for many long-tail users. In this paper, we identify two root causes of this mainstream bias: (i) discrepancy modeling, whereby CF algorithms focus on modeling mainstream users while neglecting niche users with unique preferences; and (ii) unsynchronized learning, where niche users require more training epochs than mainstream users to reach peak performance. Targeting these causes, we propose a novel end-To-end Adaptive Local Learning (TALL) framework to provide high-quality recommendations to both mainstream and niche users. TALL uses a loss-driven Mixture-of-Experts module to adaptively ensemble experts to provide customized local models for different users. Further, it contains an adaptive weight module to synchronize the learning paces of different users by dynamically adjusting weights in the loss. Extensive experiments demonstrate the state-of-theart performance of the proposed model. Code and data are provided at https://github.com/JP-25/end-To-end-Adaptive-Local-Leanring-TALL

1 Introduction

The detrimental effects of algorithmic bias in collaborative filtering (CF) recommendations have been widely acknowledged [10,11,33,39]. Among these different types of recommendation bias, an especially critical one is Mainstream Bias (also called “grey-sheep” problem) [4,18,24,39], which refers to the phenomenon that a CF-based algorithm delivers recommendations of higher utility to users with mainstream interests at the cost of poor recommendation performance for users with niche or minority interests. For example, in a social media platform, mainstream users with interests in prevalent social topics will receive recommendations of high accuracy, while the system struggles to provide precise recommendations for niche users who focus on less common, yet equally important, topics. This makes the platform deliver unfair services to users with distinct interests and ultimately deteriorates the long-term prosperity of the platform.

\ In this work, we identify two core root causes of such a mainstream bias: the discrepancy modeling problem and the unsynchronized learning problem.

\ Discrepancy Modeling Problem: CF algorithms estimate user preferences based on other users with similar tastes. So, the data from users with different preferences cannot help (or even play a negative role) in predicting recommendations for a target user. This issue functions bidirectionally – it impacts niche users, who differ from the majority, and mainstream users affected by the data from niche users. While prior studies [12,39] have used heuristic-based local learning to craft customized models for different user types (e.g., mainstream vs. niche), their efficacy is often bound by the quality of the underlying heuristics. This underscores the need for adaptive approaches that learn to generate locally customized models for different user types in an end-to-end fashion.

\

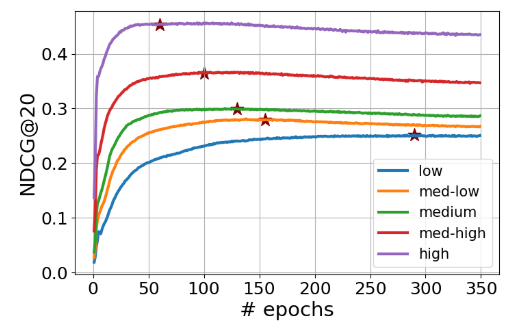

\ Unsynchronized Learning Problem: Another factor contributing to mainstream bias is the different learning paces between mainstream and niche users. Intuitively, mainstream users, with abundant training signals, tend to reach optimal learning faster. For instance, in Figure 1, the high-mainstream subgroup peaks at around 60 epochs, whereas the low-mainstream group takes nearly 300 epochs. Training a model for all users without considering these learning pace disparities often results in models that cater primarily to mainstream users, sidelining niche users from reaching their optimal utility. Addressing this requires a method to synchronize the learning process across users, irrespective of their mainstreamness.

\ To address these problems, we propose an end-To-end Adaptive Local Learning (TALL) framework. To tackle the discrepancy modeling problem affecting both niche and mainstream users, we devise a loss-driven Mixture-of-Experts structure as the backbone. This structure achieves local learning via an end-to-end neural network and adaptively assembles expert models using a loss-driven gate model, offering tailored local models for different users. Further, we develop an adaptive weight module to dynamically adjust the learning paces by weights in the loss, ensuring optimal learning for all user types. With these two complementary and adaptive modules, TALL can effectively promote the utility for niche users while preserving or even elevating the utility for mainstream users, leading to a significant debiasing effect based on the **Rawlsian Max-Min fairness principle [**29].

\ In sum, our contributions are: (1) we propose a loss-driven Mixture-of-Experts structure to tackle the discrepancy modeling problem, highlighted by an adaptive loss-driven gate module for customized local models; (2) we introduce an adaptive weight module to synchronize learning paces, augmented by a loss change and a gap mechanism for better debiasing; and (3) Extensive experiments demonstrate TALL’s superior debiasing capabilities compared to leading alternatives, enhancing utility for niche users by 6.1% over the best baseline with equal model complexity. Data and code are available at https://github. com/JP-25/end-To-end-Adaptive-Local-Learning-TALL-.

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by Tech Media Bias [Research Publication]

Tech Media Bias [Research Publication] | Sciencx (2024-08-21T11:00:18+00:00) Countering Mainstream Bias via End-to-End Adaptive Local Learning: Abstract and Introduction. Retrieved from https://www.scien.cx/2024/08/21/countering-mainstream-bias-via-end-to-end-adaptive-local-learning-abstract-and-introduction/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.