This content originally appeared on HackerNoon and was authored by Writings, Papers and Blogs on Text Models

:::info Authors:

(1) Rafael Rafailo, Stanford University and Equal contribution; more junior authors listed earlier;

(2) Archit Sharma, Stanford University and Equal contribution; more junior authors listed earlier;

(3) Eric Mitchel, Stanford University and Equal contribution; more junior authors listed earlier;

(4) Stefano Ermon, CZ Biohub;

(5) Christopher D. Manning, Stanford University;

(6) Chelsea Finn, Stanford University.

:::

Table of Links

4 Direct Preference Optimization

7 Discussion, Acknowledgements, and References

\ A Mathematical Derivations

A.1 Deriving the Optimum of the KL-Constrained Reward Maximization Objective

A.2 Deriving the DPO Objective Under the Bradley-Terry Model

A.3 Deriving the DPO Objective Under the Plackett-Luce Model

A.4 Deriving the Gradient of the DPO Objective and A.5 Proof of Lemma 1 and 2

\ B DPO Implementation Details and Hyperparameters

\ C Further Details on the Experimental Set-Up and C.1 IMDb Sentiment Experiment and Baseline Details

C.2 GPT-4 prompts for computing summarization and dialogue win rates

\ D Additional Empirical Results

D.1 Performance of Best of N baseline for Various N and D.2 Sample Responses and GPT-4 Judgments

5 Theoretical Analysis of DPO

In this section, we give further interpretation of the DPO method, provide theoretical backing, and relate advantages of DPO to issues with actor critic algorithms used for RLHF (such as PPO [37]).

5.1 Your Language Model Is Secretly a Reward Model

\ Definition 1. We say that two reward functions r(x, y) and r ′ (x, y) are equivalent iff r(x, y) − r ′ (x, y) = f(x) for some function f.

\ It is easy to see that this is indeed an equivalence relation, which partitions the set of reward functions into classes. We can state the following two lemmas:

\ Lemma 1. Under the Plackett-Luce, and in particular the Bradley-Terry, preference framework, two reward functions from the same class induce the same preference distribution.

\ Lemma 2. Two reward functions from the same equivalence class induce the same optimal policy under the constrained RL problem.

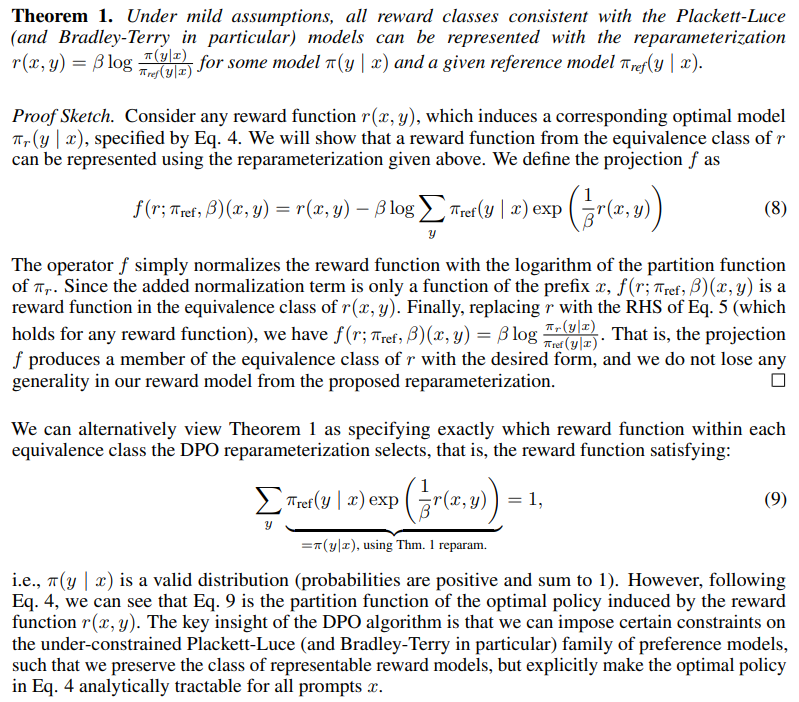

\ The proofs are straightforward and we defer them to Appendix A.5. The first lemma is a well-known under-specification issue with the Plackett-Luce family of models [30]. Due to this under-specification, we usually have to impose additional identifiability constraints to achieve any guarantees on the MLE estimates from Eq. 2 [4]. The second lemma states that all reward functions from the same class yield the same optimal policy, hence for our final objective, we are only interested in recovering an arbitrary reward function from the optimal class. We prove the following Theorem in Appendix A.6:

\

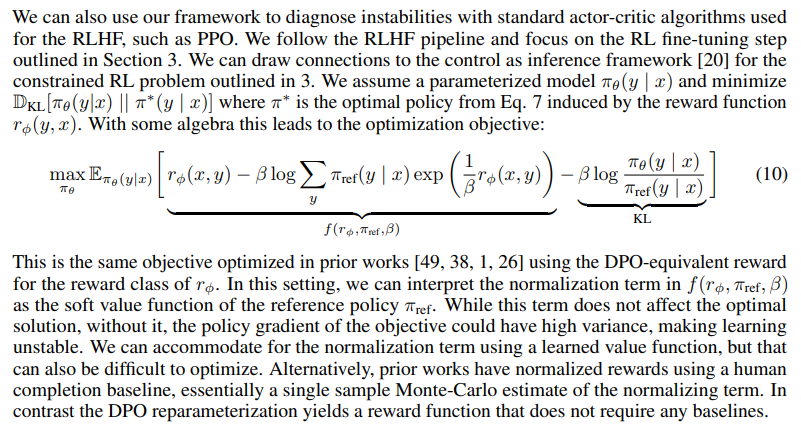

5.2 Instability of Actor-Critic Algorithms

\

\

:::info This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by Writings, Papers and Blogs on Text Models

Writings, Papers and Blogs on Text Models | Sciencx (2024-08-25T21:12:27+00:00) Theoretical Analysis of Direct Preference Optimization. Retrieved from https://www.scien.cx/2024/08/25/theoretical-analysis-of-direct-preference-optimization/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.