This content originally appeared on HackerNoon and was authored by Auto Encoder: How to Ignore the Signal Noise

:::info Authors:

(1) Tony Lee, Stanford with Equal contribution;

(2) Michihiro Yasunaga, Stanford with Equal contribution;

(3) Chenlin Meng, Stanford with Equal contribution;

(4) Yifan Mai, Stanford;

(5) Joon Sung Park, Stanford;

(6) Agrim Gupta, Stanford;

(7) Yunzhi Zhang, Stanford;

(8) Deepak Narayanan, Microsoft;

(9) Hannah Benita Teufel, Aleph Alpha;

(10) Marco Bellagente, Aleph Alpha;

(11) Minguk Kang, POSTECH;

(12) Taesung Park, Adobe;

(13) Jure Leskovec, Stanford;

(14) Jun-Yan Zhu, CMU;

(15) Li Fei-Fei, Stanford;

(16) Jiajun Wu, Stanford;

(17) Stefano Ermon, Stanford;

(18) Percy Liang, Stanford.

:::

Table of Links

Author contributions, Acknowledgments and References

A Datasheet

A.1 Motivation

Q1 For what purpose was the dataset created? Was there a specific task in mind? Was there a specific gap that needed to be filled? Please provide a description.

\ • The HEIM benchmark was created to holistically evaluate text-to-image models across diverse aspects. Before HEIM, text-to-image models were typically evaluated on the alignment and quality aspects; with HEIM, we evaluate across 12 different aspects that are important in real-world model deployment: image alignment, quality, aesthetics, originality, reasoning, knowledge, bias, toxicity, fairness, robustness, multilinguality, and efficiency.

\ Q2 Who created the dataset (e.g., which team, research group) and on behalf of which entity (e.g., company, institution, organization)?

\ • This benchmark is presented by the Center for Research on Foundation Models (CRFM), an interdisciplinary initiative born out of the Stanford Institute for Human-Centered Artificial Intelligence (HAI) that aims to make fundamental advances in the study, development, and deployment of foundation models. https://crfm.stanford.edu/.

\ Q3 Who funded the creation of the dataset? If there is an associated grant, please provide the name of the grantor and the grant name and number.

\ • This work was supported in part by the AI2050 program at Schmidt Futures (Grant G-22-63429).

\ Q4 Any other comments?

\ • No.

A.2 Composition

Q5 What do the instances that comprise the dataset represent (e.g., documents, photos, people, countries)? Are there multiple types of instances (e.g., movies, users, and ratings; people and interactions between them; nodes and edges)? Please provide a description.

\ • HEIM benchmark provides prompts/captions covering 62 scenarios. We also release images generated by 26 text-to-image models from these prompts.

\ Q6 How many instances are there in total (of each type, if appropriate)?

\ • HEIM contains 500K prompts in total covering 62 scenarios. The detailed statistics for each scenario can be found at https://crfm.stanford.edu/heim/v1.1.0.

\ Q7 Does the dataset contain all possible instances or is it a sample (not necessarily random) of instances from a larger set? If the dataset is a sample, then what is the larger set? Is the sample representative of the larger set (e.g., geographic coverage)? If so, please describe how this representativeness was validated/verified. If it is not representative of the larger set, please describe why not (e.g., to cover a more diverse range of instances, because instances were withheld or unavailable).

\ • Yes. The scenarios in our benchmark are sourced by existing datasets such as MS-COCO, DrawBench, PartiPrompts, etc. and we use all possible instances from these datasets.

\ Q8 What data does each instance consist of? “Raw” data (e.g., unprocessed text or images) or features? In either case, please provide a description.

\ • Input prompt and generated images.

\ Q9 Is there a label or target associated with each instance? If so, please provide a description.

\ • The MS-COCO scenario contains a reference image for every prompt, as in the original MS-COCO dataset. Other scenarios do not have reference images.

\ Q10 Is any information missing from individual instances? If so, please provide a description, explaining why this information is missing (e.g., because it was unavailable). This does not include intentionally removed information, but might include, e.g., redacted text.

\ • No.

\ Q11 Are relationships between individual instances made explicit (e.g., users’ movie ratings, social network links)? If so, please describe how these relationships are made explicit.

\ • Every prompt belongs to a scenario.

\ Q12 Are there recommended data splits (e.g., training, development/validation, testing)? If so, please provide a description of these splits, explaining the rationale behind them.

\ • No.

\ Q13 Are there any errors, sources of noise, or redundancies in the dataset? If so, please provide a description.

\ • No.

\ Q14 Is the dataset self-contained, or does it link to or otherwise rely on external resources (e.g., websites, tweets, other datasets)? If it links to or relies on external resources, a) are there guarantees that they will exist, and remain constant, over time; b) are there official archival versions of the complete dataset (i.e., including the external resources as they existed at the time the dataset was created); c) are there any restrictions (e.g., licenses, fees) associated with any of the external resources that might apply to a future user? Please provide descriptions of all external resources and any restrictions associated with them, as well as links or other access points, as appropriate.

\ • The dataset is self-contained. Everything is available at https://crfm.stanford. edu/heim/v1.1.0.

\ Q15 Does the dataset contain data that might be considered confidential (e.g., data that is protected by legal privilege or by doctor–patient confidentiality, data that includes the content of individuals’ non-public communications)? If so, please provide a description.

\ • No. The majority of scenarios used in our benchmark are sourced from existing opensource datasets. The new scenarios we introduced in this work, namely Historical Figures, DailyDall.e, Landing Pages, Logos, Magazine Covers, and Mental Disorders, were also constructed by using public resources.

\ Q16 Does the dataset contain data that, if viewed directly, might be offensive, insulting, threatening, or might otherwise cause anxiety? If so, please describe why.

\ • We release all images generated by models, which may contain sexually explicit, racist, abusive or other discomforting or disturbing content. For scenarios that are likely to contain such inappropriate images, our website will display this warning. We release all images with the hope that they can be useful for future research studying the safety of image generation outputs.

\ Q17 Does the dataset relate to people? If not, you may skip the remaining questions in this section.

\ • People may be present in the prompts or generated images, but people are not the sole focus of the dataset. Q18 Does the dataset identify any subpopulations (e.g., by age, gender)?

\ • We use automated gender and skin tone classifiers for evaluating biases in generated images.

Q19 Is it possible to identify individuals (i.e., one or more natural persons), either directly or indirectly (i.e., in combination with other data) from the dataset? If so, please describe how.

\ • Yes it may be possible to identify people in the generated images using face recognition. Similarly, people may be identified through the associated prompts.

\ Q20 Does the dataset contain data that might be considered sensitive in any way (e.g., data that reveals racial or ethnic origins, sexual orientations, religious beliefs, political opinions or union memberships, or locations; financial or health data; biometric or genetic data; forms of government identification, such as social security numbers; criminal history)? If so, please provide a description.

\ • Yes the model-generated images contain sensitive content. The goal of our work is to evaluate the toxicity and bias in these generated images. Q21 Any other comments? • We caution discretion on behalf of the user and call for responsible usage of the benchmark for research purposes only.

A.3 Collection Process

Q22 How was the data associated with each instance acquired? Was the data directly observable (e.g., raw text, movie ratings), reported by subjects (e.g., survey responses), or indirectly inferred/derived from other data (e.g., part-of-speech tags, model-based guesses for age or language)? If data was reported by subjects or indirectly inferred/derived from other data, was the data validated/verified? If so, please describe how.

\ • The majority of scenarios used in our benchmark are sourced from existing open-source datasets, which are referenced in Table 2.

\ • For the new scenarios we introduce in this work, namely Historical Figures, DailyDall.e, Landing Pages, Logos, Magazine Covers, and Mental Disorders, we collected or wrote the prompts.

\ Q23 What mechanisms or procedures were used to collect the data (e.g., hardware apparatus or sensor, manual human curation, software program, software API)? How were these mechanisms or procedures validated?

\ • The existing scenarios were downloaded by us.

\ • Prompts for the new scenarios were collected or written by us manually. For further details, please refer to §B.

\ Q24 If the dataset is a sample from a larger set, what was the sampling strategy (e.g., deterministic, probabilistic with specific sampling probabilities)?

\ • We use the whole datasets Q25 Who was involved in the data collection process (e.g., students, crowdworkers, contractors) and how were they compensated (e.g., how much were crowdworkers paid)?

\ • The authors of this paper collected the scenarios.

\ • Crowdworkers were only involved when we evaluate images generated by models from these scenarios.

\ Q26 Over what timeframe was the data collected? Does this timeframe match the creation timeframe of the data associated with the instances (e.g., recent crawl of old news articles)? If not, please describe the timeframe in which the data associated with the instances was created.

\ • The data was collected from December 2022 to June 2023.

\ Q27 Were any ethical review processes conducted (e.g., by an institutional review board)? If so, please provide a description of these review processes, including the outcomes, as well as a link or other access point to any supporting documentation.

\ • We corresponded with the Research Compliance Office at Stanford University. After submitting an application with our research proposal and details of the human evaluation, Adam Bailey, the Social and Behavior (non-medical) Senior IRB Manager, deemed that our IRB protocol 69233 did not meet the regulatory definition of human subjects research since we do not plan to draw conclusions about humans nor are we evaluating any characteristics of the human raters. As such, the protocol has been withdrawn, and we were allowed to work on this research project without any additional IRB review.

\ Q28 Does the dataset relate to people? If not, you may skip the remaining questions in this section.

\ • People may appear in the images and descriptions, although they are not the exclusive focus of the dataset.

\ Q29 Did you collect the data from the individuals in question directly, or obtain it via third parties or other sources (e.g., websites)?

\ • Our scenarios were collected from third party websites. Our human evaluation were conducted via crowdsourcing.

\ Q30 Were the individuals in question notified about the data collection? If so, please describe (or show with screenshots or other information) how notice was provided, and provide a link or other access point to, or otherwise reproduce, the exact language of the notification itself.

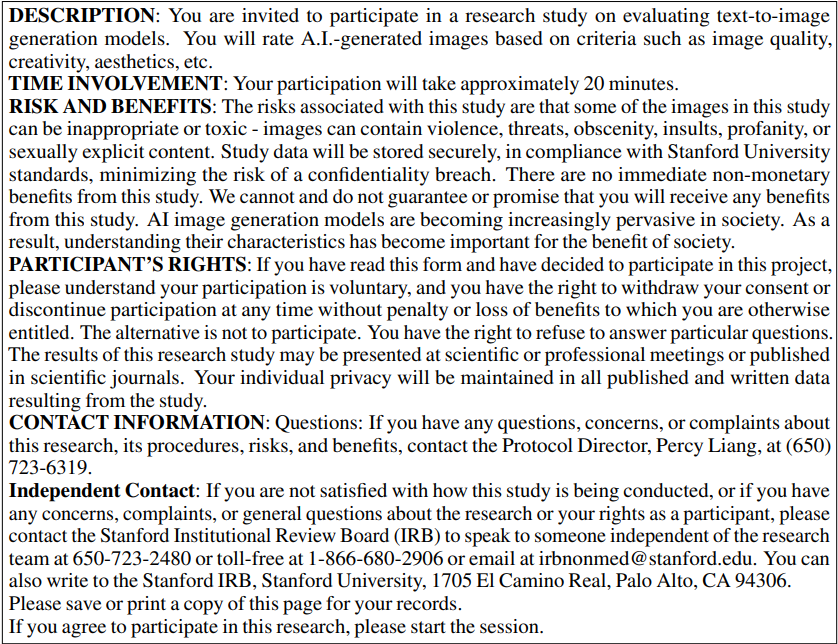

\ • Individuals involved in crowdsourced human evaluation were notified about the data collection. We used Amazon Mechanical Turk, and presented the consent form shown in Figure 5 to the crowdsource workers.

\

\ Q31 Did the individuals in question consent to the collection and use of their data? If so, please describe (or show with screenshots or other information) how consent was requested and provided, and provide a link or other access point to, or otherwise reproduce, the exact language to which the individuals consented.

\ • Yes, consent was obtained from crowdsource workers for human evaluation. Please refer to Figure 5.

\ Q32 If consent was obtained, were the consenting individuals provided with a mechanism to revoke their consent in the future or for certain uses? If so, please provide a description, as well as a link or other access point to the mechanism (if appropriate).

\ • Yes. Please refer to Figure 5.

\ Q33 Has an analysis of the potential impact of the dataset and its use on data subjects (e.g., a data protection impact analysis) been conducted? If so, please provide a description of this analysis, including the outcomes, as well as a link or other access point to any supporting documentation.

\ • We discuss the limitation of our current work in §10, and we plan to further investigate and analyze the impact of our benchmark in future work.

\ Q34 Any other comments?

\ • No.

A.4 Preprocessing, Cleaning, and/or Labeling

Q35 Was any preprocessing/cleaning/labeling of the data done (e.g., discretization or bucketing, tokenization, part-of-speech tagging, SIFT feature extraction, removal of instances, processing of missing values)? If so, please provide a description. If not, you may skip the remainder of the questions in this section.

\ • No preprocessing or labelling was done for creating the scenarios.

\ Q36 Was the “raw” data saved in addition to the preprocessed/cleaned/labeled data (e.g., to support unanticipated future uses)? If so, please provide a link or other access point to the “raw” data.

\ • N/A. No preprocessing or labelling was done for creating the scenarios.

\ Q37 Is the software used to preprocess/clean/label the instances available? If so, please provide a link or other access point.

\ • N/A. No preprocessing or labelling was done for creating the scenarios.

\ Q38 Any other comments?

\ • No.

A.5 Uses

Q39 Has the dataset been used for any tasks already? If so, please provide a description.

\ • Not yet. HEIM is a new benchmark.

\ Q40 Is there a repository that links to any or all papers or systems that use the dataset? If so, please provide a link or other access point.

\ • We will provide links to works that use our benchmark at https://crfm.stanford. edu/heim/v1.1.0.

\ Q41 What (other) tasks could the dataset be used for?

\ • The primary use case of our benchmark is text-to-image generation.

\ • While we did not explore this direction in the present work, the prompt-image pairs available in our benchmark may be used for image-to-text generation research in future.

\ Q42 Is there anything about the composition of the dataset or the way it was collected and preprocessed/cleaned/labeled that might impact future uses? For example, is there anything that a future user might need to know to avoid uses that could result in unfair treatment of individuals or groups (e.g., stereotyping, quality of service issues) or other undesirable harms (e.g., financial harms, legal risks) If so, please provide a description. Is there anything a future user could do to mitigate these undesirable harms?

\ • Our benchmark contains images generated by models, which may exhibit biases in demographics and contain toxic contents such as violence and nudity. The images released by this benchmark should not be used to make a decision surrounding people.

\ Q43 Are there tasks for which the dataset should not be used? If so, please provide a description.

\ • Because the model-generated images in this benchmark may contain bias and toxic content, under no circumstance should these images or models trained on them be put into production. It is neither safe nor responsible. As it stands, the images should be solely used for research purposes.

\ • Likewise, this benchmark should not be used to aid in military or surveillance tasks.

\ Q44 Any other comments?

\ • No.

A.6 Distribution and License

Q45 Will the dataset be distributed to third parties outside of the entity (e.g., company, institution, organization) on behalf of which the dataset was created? If so, please provide a description.

\ • Yes, this benchmark will be open-source.

\ Q46 How will the dataset be distributed (e.g., tarball on website, API, GitHub)? Does the dataset have a digital object identifier (DOI)?

\ • Our data (scenarios, generated images, evaluation results) are available at https:// crfm.stanford.edu/heim/v1.1.0.

\ • Our code used for evaluation is available at https://github.com/stanford-crfm/ helm. Q47 When will the dataset be distributed?

\ • June 7, 2023 and onward.

\ Q48 Will the dataset be distributed under a copyright or other intellectual property (IP) license, and/or under applicable terms of use (ToU)? If so, please describe this license and/or ToU, and provide a link or other access point to, or otherwise reproduce, any relevant licensing terms or ToU, as well as any fees associated with these restrictions.

\ • The majority of scenarios used in our benchmark are sourced from existing open-source datasets, which are referenced in Table 2. The license associated with them is followed accordingly.

\ • We release the new scenarios, namely Historical Figures, DailyDall.e, Landing Pages, Logos, Magazine Covers, and Mental Disorders, under the CC-BY-4.0 license.

\ • Our code is released under the Apache-2.0 license

\ Q49 Have any third parties imposed IP-based or other restrictions on the data associated with the instances? If so, please describe these restrictions, and provide a link or other access point to, or otherwise reproduce, any relevant licensing terms, as well as any fees associated with these restrictions.

\ • We own the metadata and release as CC-BY-4.0.

\ • We do not own the copyright of the images or text. Q50 Do any export controls or other regulatory restrictions apply to the dataset or to individual instances? If so, please describe these restrictions, and provide a link or other access point to, or otherwise reproduce, any supporting documentation.

\ • No.

\ Q51 Any other comments?

\ • No.

A.7 Maintenance

Q52 Who will be supporting/hosting/maintaining the dataset?

\ • Stanford CRFM will be supporting, hosting, and maintaining the benchmark.

\ Q53 How can the owner/curator/manager of the dataset be contacted (e.g., email address)?

\ • https://crfm.stanford.edu

\ Q54 Is there an erratum? If so, please provide a link or other access point.

\ • There is no erratum for our initial release. Errata will be documented as future releases on the benchmark website.

\ Q55 Will the dataset be updated (e.g., to correct labeling errors, add new instances, delete instances)? If so, please describe how often, by whom, and how updates will be communicated to users (e.g., mailing list, GitHub)?

\ • HEIM will be updated. We plan to expand scenarios, metrics, and models to be evaluated.

\ Q56 If the dataset relates to people, are there applicable limits on the retention of the data associated with the instances (e.g., were individuals in question told that their data would be retained for a fixed period of time and then deleted)? If so, please describe these limits and explain how they will be enforced.

\ • People may contact us at https://crfm.stanford.edu to add specific samples to a blacklist.

\ Q57 Will older versions of the dataset continue to be supported/hosted/maintained? If so, please describe how. If not, please describe how its obsolescence will be communicated to users.

\ • We will host other versions.

\ Q58 If others want to extend/augment/build on/contribute to the dataset, is there a mechanism for them to do so? If so, please provide a description. Will these contributions be validated/verified? If so, please describe how. If not, why not? Is there a process for communicating/distributing these contributions to other users? If so, please provide a description.

\ • People may contact us at https://crfm.stanford.edu to request adding new scenarios, metrics, or models.

\ Q59 Any other comments?

\ • No

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by Auto Encoder: How to Ignore the Signal Noise

Auto Encoder: How to Ignore the Signal Noise | Sciencx (2024-10-12T22:45:24+00:00) Holistic Evaluation of Text-to-Image Models: Datasheet. Retrieved from https://www.scien.cx/2024/10/12/holistic-evaluation-of-text-to-image-models-datasheet/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.