This content originally appeared on HackerNoon and was authored by Robert Scoble

In the dynamic political environment of the United States, the rapidly approaching 2024 presidential election is becoming the arena for a new kind of battle—one against the insidious rise of AI-generated deepfakes. These sophisticated synthetic constructs, capable of distorting reality, have started a legislative marathon across the nation, as state leaders press forward with decisive measures to uphold the integrity of voter information. There is no federal law that deals with deepfakes, leaving it up to individual states to legislate. The top official at the Federal Election Commission (FEC) has signaled expectations for the issue to reach a resolution later this year. This forecast hints at a significant stretch of the 2024 election period potentially unfolding with this brand of misinformation remaining unchecked.

\ Recently, bridging the partisan divide with a shared vision for election integrity, Sens. Amy Klobuchar of Minnesota and Lisa Murkowski of Alaska joined forces. Their collaborative legislative endeavor presents a forward-thinking bill aimed squarely at the digital frontier of political advertising. This proposed legislation would bring much-needed clarity to the murky waters of AI-generated political ads by enforcing disclaimers. But it doesn't stop there; the bill also empowers the Federal Election Commission (FEC) to step up as the digital watchdog, ensuring that any infractions don't go unnoticed or unaddressed. It's a bold stride towards safeguarding transparency as we sail into the next wave of electoral campaigns.

\ What is going to happen during this election year if, as expected, there will be a slew of politically-motivated deepfakes? How can people be protected from being influenced? There is no easy answer.

Deepfakes Decoded: Understanding the Synthetic Threat

Deepfakes—a term blending 'deep learning' with 'fake'—refer to artificially generated audio, images, or videos designed to replicate a person's likeness with unnerving accuracy. February's New Hampshire incident serves as a harrowing example: an AI-generated voice, eerily mimicking President Joe Biden, misled thousands with false voting information. This event underscores the pressing need for informed and proactive legislation.

\ "In a surprising turn of events this Tuesday, a voice on the airwaves was heard declaring, 'Your vote today is a step towards bringing Donald Trump back into office,'" recounted an associate from Rep. Dean Phillips' team, D-Minn., during an interview with NBC News. This individual, working for an underdog in the race against Biden, revealed that he had orchestrated the ad on his own accord, without the campaign's knowledge or approval.

\ Shifting to the campaign trail on the Republican side, a striking audio piecesurfaced from the Trump camp. The clip was artfully edited to give the illusion that Florida's Gov. Ron DeSantis was in dialogue with none other than Adolf Hitler.

\ In a retaliatory move, the DeSantis campaign then distributed a doctored photopresenting Trump in a warm embrace with Anthony Fauci, sending ripples through social media platforms.

\n

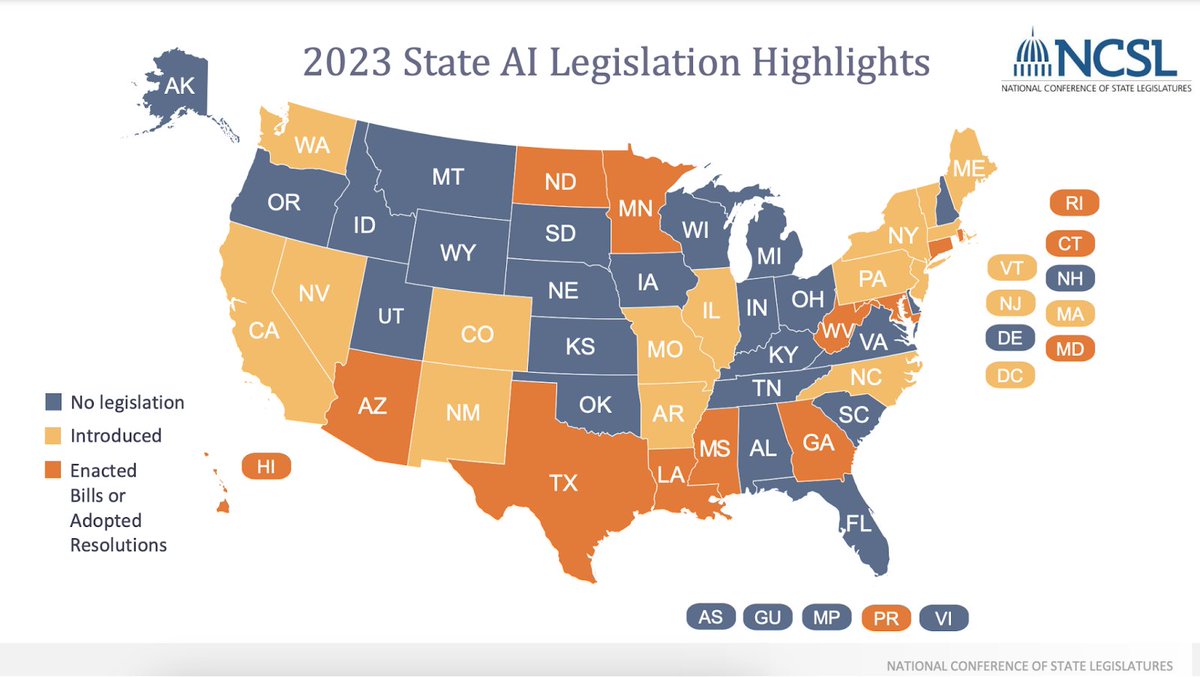

The Legislative Surge: Over 100 Bills to Protect Voters

As guardians of electoral integrity, the Voting Rights Lab vigilantly surveys an ever-growing wave of legislative initiatives. In a landscape where over 100 bills have sprung up across 40 states, the commitment to combating AI-generated election disinformation appears as unwavering. With each bill introduced or passed, there's an unmistakable sense of urgency among legislators to reinforce the foundations of democracy. It's a legislative crusade that's rapidly gaining traction, showcasing the collective resolve to shield our democratic processes from the deceptive shadows cast by digital manipulations.

Emerging Legislative Frameworks

In the wake of the robocall incident, a flurry of legislative activity ensued. States like Arizona, Florida, and Wisconsin are now at the forefront, each showcasing a different regulatory trend that epitomizes the varied approaches being adopted to combat these technological ploys.

\ Wisconsin recently enacted a law requiring political affiliates to disclose AI-generated content, while Florida awaits the governor's signature on a similar bill. Arizona, on the other hand, is threading the needle between regulation and constitutional rights, crafting bills that could classify the omission of labels on AI political media as a felony under certain conditions. Another bill in Arizona under consideration opens the door for individuals who have been wronged to initiate civil litigation against the creators of the content, providing them with the potential to obtain monetary compensation.

\ Since 2019, both California and Texas have established laws in place.

\ In Indiana, a bill that has garnered bipartisan backing is making its way to the governor's office. Should it pass, it would mandate that advertisements employing deepfake technology must clearly state, "Parts of this media have been digitally modified or synthetically produced." This directive would extend across images, videos, and audio content. Candidates at the state and federal level who find themselves impacted by such content would have the legal standing to pursue compensation through civil litigation.

\ Drawing inspiration from the deceptive robocall incident mimicking President Biden, a bipartisan effort happened in Kansas. Spearheaded by Republican State Representative Pat Proctor alongside a Democratic leader, they pushed forward a legislative proposal that's tackling the deepfake dilemma head-on. This bill not only called for mandatory disclosures on deepfake advertisements but also proposed stringent penalties for those impersonating election officials, like secretaries of state, with the malicious aim of dissuading voters from casting their ballots. Currently, the bill is dead, but according to Proctor, can be brought back to life.

Tackling the Disinformation Threat Beyond Legislation

Legislation is just one facet of the multi-pronged strategy needed to address AI-generated deepfakes. Entities like NewDeal are spearheading initiatives that equip election administrators with tools to counter disinformation campaigns effectively. Incident response preparation and public awareness campaigns, such as those launched by secretaries of state in New Mexico and Michigan, are vital components in educating voters and reinforcing the democratic infrastructure.

Big Tech Transparency Moves

Major tech companies are proactively setting new standards for authenticity in online content, with a sharp focus on advertisements influenced by AI. Meta, the umbrella company for social platforms like Facebook, Instagram, and Threads, is diligently removing any advertisements that are misleading or lack context, particularly those that could potentially undermine the electoral process or jump the gun on election results. All AI-generated ads are subject to this rigorous scrutiny.

\ In sync with these efforts, Google has made a declaration that transparency must accompany any AI-enhanced images, videos, or audio used in election advertising. As of November, such content will require explicit disclosures. YouTube, under Google's wing, is committing to transparency by ensuring its users can recognize when content has been digitally manipulated. These strategic moves by Meta and Google signal a pivotal shift toward clarity and honesty in the digital content we consume, especially in the political sphere.

\ As we navigate the complexities of an election year increasingly influenced by AI technology, the question remains: Are state laws sufficiently robust to control the spread of deepfakes? With the FEC indicating that national resolutions may not arrive until later in the year, the responsibility falls on state legislatures, tech companies, and the public to build a bulwark against these digital deceptions.

The legislative efforts, both proposed and enacted across the states, represent a patchwork of defenses, with some offering a glimpse of the rigorous measures needed to ensure fair play in our political arenas. The commitment to clarity and integrity from tech companies, too, adds a layer of much-needed oversight. Yet, as sophisticated as these measures may be, they are not impermeable. There will always be new challenges and loopholes to close, especially as technology advances at a breakneck pace.

\ In this environment, voter education and awareness become as crucial as the laws themselves. The dissemination of information on how to discern real from synthetic media, alongside a discerning eye for what we consume and share, is paramount. It's a collective effort—a blend of legal, corporate, and individual actions—that shapes our resilience against the rising tide of AI-generated misinformation.

As the 2024 election approaches, it's evident that there is no panacea for the deepfake phenomenon. Yet, the burgeoning legislative efforts, combined with proactive measures from tech companies and the vigilant participation of the public, set the stage for a robust defense. These are the foundations upon which we can hope to preserve the sanctity of our elections, fostering a well-informed electorate that is equipped to navigate the murky waters of digital propaganda.

\ In conclusion, while the legislative landscape is still forming and the digital battleground is ever-changing, our collective vigilance and adaptability are key. The actions taken today by lawmakers, technologists, and every voter will determine the efficacy of our defense against deepfakes. In this election year and beyond, the safeguarding of truth remains a shared responsibility—one that we must continuously strive to uphold.

This content originally appeared on HackerNoon and was authored by Robert Scoble

Robert Scoble | Sciencx (2024-10-22T11:15:12+00:00) Can The Government, The Technology Platforms, or Anyone Else Control the Spread of Deepfakes?. Retrieved from https://www.scien.cx/2024/10/22/can-the-government-the-technology-platforms-or-anyone-else-control-the-spread-of-deepfakes/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.