This content originally appeared on HackerNoon and was authored by Ilia Kuznetsov

Introduction

ARKit is Apple's powerful augmented reality framework that allows developers to craft immersive, interactive AR experiences specifically designed for iOS devices.

\ For devices equipped with LiDAR, ARKit takes full advantage of depth-sensing capabilities, which significantly enhances environmental scanning accuracy. Unlike many traditional LIDAR systems, which can be bulky and expensive, the iPhone's LiDAR is compact, cost-effective, and seamlessly integrated into a consumer device, making advanced depth sensing accessible to a wider range of developers and applications.

\ LiDAR allows a to make a point cloud which is a collection of data points that represents the surfaces of objects in a 3D space.

\ In the first part of this article, we will build an application that demonstrates how to extract LiDAR data and convert it into individual points within an AR 3D environment.

\ The second part will give an explanation on how to merge the continuously received points from the LiDAR sensor into a unified point cloud. Finally, we will cover how to export this data into the widely used .PLY file format, enabling further analysis and utilization in various applications.

\

Prerequisites

We will be using:

- Xcode 16 with Swift 6.

- SwiftUI for the app’s user interface

- Swift Concurrency for efficient multithreading.

\ Please ensure you have access to an iPhone or iPad equipped with a LiDAR sensor to follow along.

\

Setting up and creating a UI

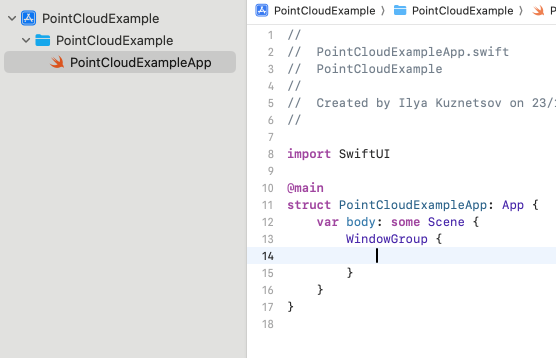

Create a new project ProjectCloudExample and remove all the unnecessary files we won’t use, keeping only the ProjectCloudExampleApp.swift.

\

\

Next, let’s create ARManager.swift with an actor to manage the ARSCNView and handle the associated AR session. Since SwiftUI currently lacks native support for ARSCNView, we’ll bridge it with UIKit.

\

In the initializer of ARManager, we will make it as a delegate for the ARSession, and start the session with ARWorldTrackingConfiguration. Given that we are targeting devices equipped with LiDAR technology, it is essential to set the .sceneDepth property to frame semantics.

import Foundation

import ARKit

actor ARManager: NSObject, ARSessionDelegate, ObservableObject {

@MainActor let sceneView = ARSCNView()

@MainActor

override init() {

super.init()

sceneView.session.delegate = self

// start session

let configuration = ARWorldTrackingConfiguration()

configuration.frameSemantics = .sceneDepth

sceneView.session.run(configuration)

}

}

\

Now let’s open the main ProjectCloudExampleApp.swift, create an instance of our ARManager as a state object and make a presentation of our AR view to SwiftUI. We are going to use UIViewWrapper for the latter.

struct UIViewWrapper<V: UIView>: UIViewRepresentable {

let view: UIView

func makeUIView(context: Context) -> some UIView { view }

func updateUIView(_ uiView: UIViewType, context: Context) { }

}

@main

struct PointCloudExampleApp: App {

@StateObjectvar arManager = ARManager()

var body: some Scene {

WindowGroup {

UIViewWrapper(view: arManager.sceneView).ignoresSafeArea()

}

}

}

\

Obtaining LiDAR depth data

Let’s return back to the ARManager.swift.

\ The AR session continuously generates frames containing depth and camera image data, which can be processed using delegate functions.

\

To maintain real-time performance, it’s impractical to process every frame due to time constraints. Instead, we’ll skip frames while one is being processed. Additionally, since our ARManager is implemented as an actor, we’ll handle processing on a separate thread. This prevents any potential freezing of the UI during intensive operations, ensuring a smooth user experience.

\

Add an isProcessing property to manage ongoing frame operations and a delegate function to handle incoming frames. Implement a function specifically for frame processing.

\

Also add an isCapturing property, that we are going to use later in our UI for toggling the capturing.

actor ARManager: NSObject, ARSessionDelegate, ObservableObject {

//...

@MainActor private var isProcessing = false

@MainActor @Published var isCapturing = false

// an ARSessionDelegate function for receiving an ARFrame instances

nonisolated func session(_ session: ARSession, didUpdate frame: ARFrame) {

Task { await process(frame: frame) }

}

// process a frame and skip frames that arrive while processing

@MainActor

private func process(frame: ARFrame) async {

guard !isProcessing else { return }

isProcessing = true

//processing code here

isProcessing = false

}

//...

}

\

As our processing function and isProcessing property are isolated we don’t need to bother with any additional synchronization between threads.

\

Now let’s create a PointCloud.swift with an actor for processing ARFrame.

ARFrame provides a depthMap, confidenceMap, and capturedImage, all represented by CVPixelBuffer, with different formats:

depthMap- a Float32 bufferconfidenceMap- a UInt8 buffercapturedImage- a pixel buffer in YCbCr format

\ You can think of the depth map as a LiDAR-captured photo where each pixel contains the distance (in meters) from the camera to a surface. This aligns with the camera feed provided by the captured image. Our goal is to extract the color from the captured image and use it for the corresponding pixel in the depth map.

\ The confidence map shares the same resolution as the depth map and contains values ranging from [1, 3], indicating the confidence level for each pixel depth measurement.

actor PointCloud {

func process(frame: ARFrame) async {

if let depth = (frame.smoothedSceneDepth ?? frame.sceneDepth),

let depthBuffer = PixelBuffer<Float32>(pixelBuffer: depth.depthMap),

let confidenceMap = depth.confidenceMap,

let confidenceBuffer = PixelBuffer<UInt8>(pixelBuffer: confidenceMap),

let imageBuffer = YCBCRBuffer(pixelBuffer: frame.capturedImage) {

//process buffers

}

}

}

\

Accessing pixel data from CVPixelBuffer

To extract pixel data from a CVPixelBuffer, we’ll create a class for each specific format, such as depth, confidence, and color maps. For the depth and confidence maps, we can design a universal class, as both follow similar structures.

Depth and confidence buffers

The core concept behind reading from a CVPixelBuffer is relatively simple: we need to lock the buffer to ensure exclusive access to its data. Once locked, we can directly read the memory by calculating the correct offset for the pixel we want to access.

==Value = Y * bytesPerRow + X==

//struct for storing CVPixelBuffer resolution

struct Size {

let width: Int

let height: Int

var asFloat: simd_float2 {

simd_float2(Float(width), Float(height))

}

}

final class PixelBuffer<T> {

let size: Size

let bytesPerRow: Int

private let pixelBuffer: CVPixelBuffer

private let baseAddress: UnsafeMutableRawPointer

init?(pixelBuffer: CVPixelBuffer) {

self.pixelBuffer = pixelBuffer

// lock the buffer while we are getting its values

CVPixelBufferLockBaseAddress(pixelBuffer, .readOnly)

guard let baseAddress = CVPixelBufferGetBaseAddressOfPlane(pixelBuffer, 0) else {

CVPixelBufferUnlockBaseAddress(pixelBuffer, .readOnly)

return nil

}

self.baseAddress = baseAddress

size = .init(width: CVPixelBufferGetWidth(pixelBuffer),

height: CVPixelBufferGetHeight(pixelBuffer))

bytesPerRow = CVPixelBufferGetBytesPerRow(pixelBuffer)

}

// obtain value from pixel buffer in specified coordinates

func value(x: Int, y: Int) -> T {

// move to the specified address and get the value bounded to our type

let rowPtr = baseAddress.advanced(by: y * bytesPerRow)

return rowPtr.assumingMemoryBound(to: T.self)[x]

}

deinit {

CVPixelBufferUnlockBaseAddress(pixelBuffer, .readOnly)

}

}

\

YCbCr captured image buffer

Extracting color values from a pixel buffer in YCbCr format requires a bit more effort compared to working with typical RGB buffers. The YCbCr color space separates luminance (Y) from chrominance (Cb and Cr), which means we must convert these components into the more familiar RGB format.

\ To achieve this, we first need to access the Y and Cb/Cr planes within the pixel buffer. These planes hold the necessary data for each pixel. Once we’ve obtained the values from their respective planes, we can convert them into RGB values. The conversion relies on a well-known formula, where Y, Cb, and Cr values are adjusted by certain offsets, then multiplied by specific coefficients to produce the final red, green, and blue values.

final class YCBCRBuffer {

let size: Size

private let pixelBuffer: CVPixelBuffer

private let yPlane: UnsafeMutableRawPointer

private let cbCrPlane: UnsafeMutableRawPointer

private let ySize: Size

private let cbCrSize: Size

init?(pixelBuffer: CVPixelBuffer) {

self.pixelBuffer = pixelBuffer

CVPixelBufferLockBaseAddress(pixelBuffer, .readOnly)

guard let yPlane = CVPixelBufferGetBaseAddressOfPlane(pixelBuffer, 0),

let cbCrPlane = CVPixelBufferGetBaseAddressOfPlane(pixelBuffer, 1) else {

CVPixelBufferUnlockBaseAddress(pixelBuffer, .readOnly)

return nil

}

self.yPlane = yPlane

self.cbCrPlane = cbCrPlane

size = .init(width: CVPixelBufferGetWidth(pixelBuffer),

height: CVPixelBufferGetHeight(pixelBuffer))

ySize = .init(width: CVPixelBufferGetWidthOfPlane(pixelBuffer, 0),

height: CVPixelBufferGetHeightOfPlane(pixelBuffer, 0))

cbCrSize = .init(width: CVPixelBufferGetWidthOfPlane(pixelBuffer, 1),

height: CVPixelBufferGetHeightOfPlane(pixelBuffer, 1))

}

func color(x: Int, y: Int) -> simd_float4 {

let yIndex = y * CVPixelBufferGetBytesPerRowOfPlane(pixelBuffer, 0) + x

let uvIndex = y / 2 * CVPixelBufferGetBytesPerRowOfPlane(pixelBuffer, 1) + x / 2 * 2

// Extract the Y, Cb, and Cr values

let yValue = yPlane.advanced(by: yIndex)

.assumingMemoryBound(to: UInt8.self).pointee

let cbValue = cbCrPlane.advanced(by: uvIndex)

.assumingMemoryBound(to: UInt8.self).pointee

let crValue = cbCrPlane.advanced(by: uvIndex + 1)

.assumingMemoryBound(to: UInt8.self).pointee

// Convert YCbCr to RGB

let y = Float(yValue) - 16

let cb = Float(cbValue) - 128

let cr = Float(crValue) - 128

let r = 1.164 * y + 1.596 * cr

let g = 1.164 * y - 0.392 * cb - 0.813 * cr

let b = 1.164 * y + 2.017 * cb

// normalize rgb components

return simd_float4(max(0, min(255, r)) / 255.0,

max(0, min(255, g)) / 255.0,

max(0, min(255, b)) / 255.0, 1.0)

}

deinit {

CVPixelBufferUnlockBaseAddress(pixelBuffer, .readOnly)

}

}

\

Reading depth and color

Now that we've set up the necessary buffers, we can return to our core processing function within the PointCloud actor. The next step is to make a structure for our vertex data, which will include both the 3D position and color for each point.

struct Vertex {

let position: SCNVector3

let color: simd_float4

}

\ Next we need to iterate through each pixel in depth map, get corresponding confidence value and color.

We will filter the points by best confidence, and distance, as points captured at greater distances tend to have lower accuracy due to the nature of the depth sensing technology.

\ The depth map and captured image have different resolutions. Therefore, to correctly map depth data to its corresponding color, we need to do proper coordinate conversion.

func process(frame: ARFrame) async {

guard let depth = (frame.smoothedSceneDepth ?? frame.sceneDepth),

let depthBuffer = PixelBuffer<Float32>(pixelBuffer: depth.depthMap),

let confidenceMap = depth.confidenceMap,

let confidenceBuffer = PixelBuffer<UInt8>(pixelBuffer: confidenceMap),

let imageBuffer = YCBCRBuffer(pixelBuffer: frame.capturedImage) else { return }

// iterate through pixels in depth buffer

for row in 0..<depthBuffer.size.height {

for col in 0..<depthBuffer.size.width {

// get confidence value

let confidenceRawValue = Int(confidenceBuffer.value(x: col, y: row))

guard let confidence = ARConfidenceLevel(rawValue: confidenceRawValue) else {

continue

}

// filter by confidence

if confidence != .high { continue }

// get distance value from

let depth = depthBuffer.value(x: col, y: row)

// filter points by distance

if depth > 2 { return }

let normalizedCoord = simd_float2(Float(col) / Float(depthBuffer.size.width),

Float(row) / Float(depthBuffer.size.height))

let imageSize = imageBuffer.size.asFloat

let pixelRow = Int(round(normalizedCoord.y * imageSize.y))

let pixelColumn = Int(round(normalizedCoord.x * imageSize.x))

let color = imageBuffer.color(x: pixelColumn, y: pixelRow)

}

}

}

\

Converting point to 3D scene coordinates

We start by calculating the point 2D coordinates on the captured photo:

let screenPoint = simd_float3(normalizedCoord * imageSize, 1)

Using camera intrinsics we convert this point to a 3D point in camera coordinate space with specified depth value.

let localPoint = simd_inverse(frame.camera.intrinsics) * screenPoint * depth

The iPhone camera is not aligned with the phone itself, this means when you keep an iPhone in portrait the camera gives us an image that actually has landscape right orientation. Moreover, to properly convert the point from the camera's local to the world coordinates, we need to apply a flip transformation to the Y and Z axes.

\ Let’s make a transformation matrix for this.

func makeRotateToARCameraMatrix(orientation: UIInterfaceOrientation) -> matrix_float4x4 {

// Flip Y and Z axes to align with ARKit's camera coordinate system

let flipYZ = matrix_float4x4(

[1, 0, 0, 0],

[0, -1, 0, 0],

[0, 0, -1, 0],

[0, 0, 0, 1]

)

// Get rotation angle in radians based on the display orientation

let rotationAngle: Float = switch orientation {

case .landscapeLeft: .pi

case .portrait: .pi / 2

case .portraitUpsideDown: -.pi / 2

default: 0

}

// Create a rotation matrix around the Z-axis

let quaternion = simd_quaternion(rotationAngle, simd_float3(0, 0, 1))

let rotationMatrix = matrix_float4x4(quaternion)

// Combine flip and rotation matrices

return flipYZ * rotationMatrix

}

let rotateToARCamera = makeRotateToARCameraMatrix(orientation: .portrait)

// the result transformation matrix for converting point from local camera coordinates to the world coordinates

let cameraTransform = frame.camera.viewMatrix(for: .portrait).inverse * rotateToARCamera

\ Finally, we can get the result point by multiplying a local point to the transformation matrix and normalizing it afterwards.

// Converts the local camera space 3D point into world space using the camera's transformation matrix.

let worldPoint = cameraTransform * simd_float4(localPoint, 1)

let resulPosition = (worldPoint / worldPoint.w)

Conclusion

In the first part, we established the foundation for creating a point cloud using ARKit and LiDAR. We explored how to obtain depth data from the LiDAR sensor alongside corresponding images, transforming each pixel into a colored point in 3D space. We also filtered points based on confidence levels to ensure data accuracy.

\ In the second part, we will examine how to merge the captured points into a unified point cloud, visualize it in our AR view and export into the .PLY file format for further use.

This content originally appeared on HackerNoon and was authored by Ilia Kuznetsov

Ilia Kuznetsov | Sciencx (2024-10-29T13:09:57+00:00) ARKit & LiDAR: Building Point Clouds in Swift (part 1). Retrieved from https://www.scien.cx/2024/10/29/arkit-lidar-building-point-clouds-in-swift-part-1/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.