This content originally appeared on HackerNoon and was authored by Economic Hedging Technology

Table of Links

Introduction

Methodology

Successfully implementing a DRL agent to achieve the American put option hedging task requires three main steps: the design of the DRL agent, the setup of the training procedure, and the setup of a testing procedure. The design of the DRL agent features the construction of the neural network, the choice of hyperparameters, the formulation of state- and action-spaces, and a reward. The training procedure involves the data generation processes required to provide the agent with adequate state and reward information. Finally, forming testing scenarios requires the acquisition of data, and the development of a benchmark comparator for the DRL agent, such as the Delta hedging method. This section will first detail the DRL agent design before detailing the training and testing procedures for all experiments.

DRL AGENT DESIGN

As policy based DRL methods allow for continuous action-spaces, the DRL method employed in this study is DDPG. In this study, the actor and critic networks are both fully connected NNs with two hidden layers consisting of 64 nodes each. In both the actor and critic networks, the hidden layers use the rectified linear unit as the non-linear activation function. The actor network uses a sigmoidal output function to map the actions to the range [0, 1], while the critic network uses a linear output. Note that the actor output is multiplied by −1, as this study hedges American put options, which require shares to be shorted.

\

\

\ \ The state-space for the DRL agent in this study includes the current asset price, the time-to-maturity, and the current holding (previous action). This is aligned with the state-spaces used in Kolm and Ritter (2019), Cao et al. (2021), Xiao, Yao, and Zhou (2021), and Assa, Kenyon, and Zhang (2021). This study does not include the BS Delta in the state-space, agreeing with Cao et al. (2021), Kolm and Ritter (2019), and Du et al. (2020) in that the addition of the BS Delta is an unnecessary augmentation of the state. Moreover, the inclusion of the BS Delta in the state may hinder the effectiveness of an American DRL hedger, as the BS model is derived using European options.

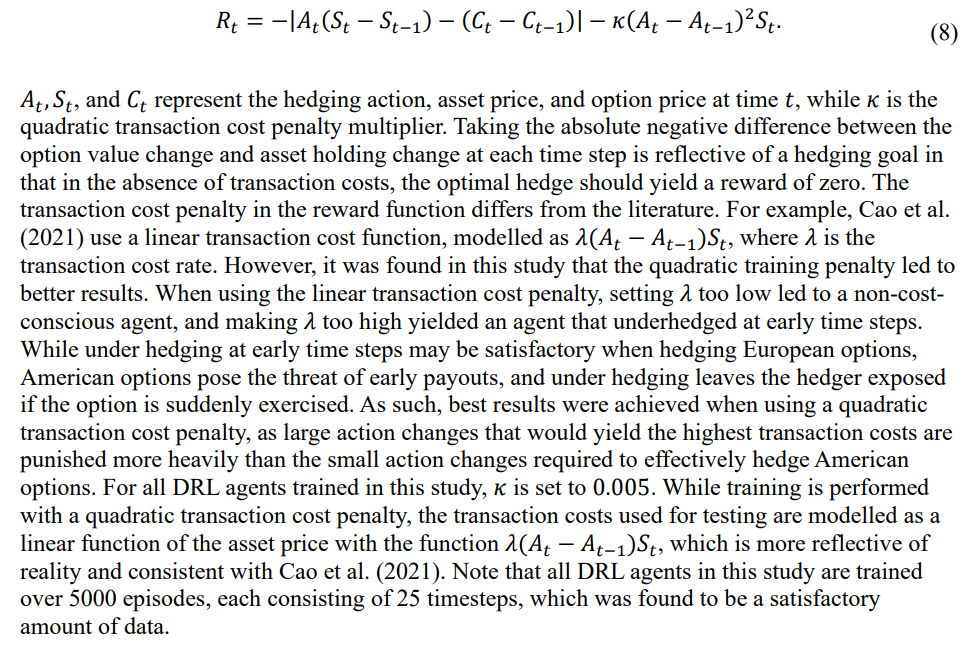

\ The reward formulation used in this study is given by

\

\

:::info Authors:

(1) Reilly Pickard, Department of Mechanical and Industrial Engineering, University of Toronto, Toronto, ON M5S 3G8, Canada (reilly.pickard@mail.utoronto.ca);

(2) Finn Wredenhagen, Ernst & Young LLP, Toronto, ON, M5H 0B3, Canada;

(3) Julio DeJesus, Ernst & Young LLP, Toronto, ON, M5H 0B3, Canada;

(4) Mario Schlener, Ernst & Young LLP, Toronto, ON, M5H 0B3, Canada;

(5) Yuri Lawryshyn, Department of Chemical Engineering and Applied Chemistry, University of Toronto, Toronto, ON M5S 3E5, Canada.

:::

:::info This paper is available on arxiv under CC BY-NC-SA 4.0 Deed (Attribution-Noncommercial-Sharelike 4.0 International) license.

:::

\

This content originally appeared on HackerNoon and was authored by Economic Hedging Technology

Economic Hedging Technology | Sciencx (2024-10-29T22:45:46+00:00) How to Design a Deep Reinforcement Learning Agent for American Put Option Hedging. Retrieved from https://www.scien.cx/2024/10/29/how-to-design-a-deep-reinforcement-learning-agent-for-american-put-option-hedging/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.